Dqn Architecture

Lec3 Dqn

Understanding Dqn Her Deep Robotics

3

Arxiv Org Pdf 1507

Torch Dueling Deep Q Networks

Google Ai Blog An Optimistic Perspective On Offline Reinforcement Learning

In this chapter, you will learn about:.

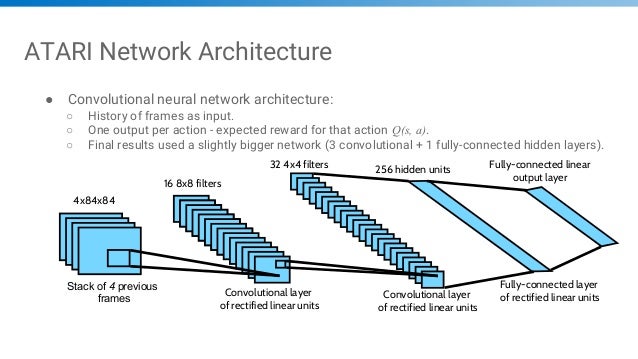

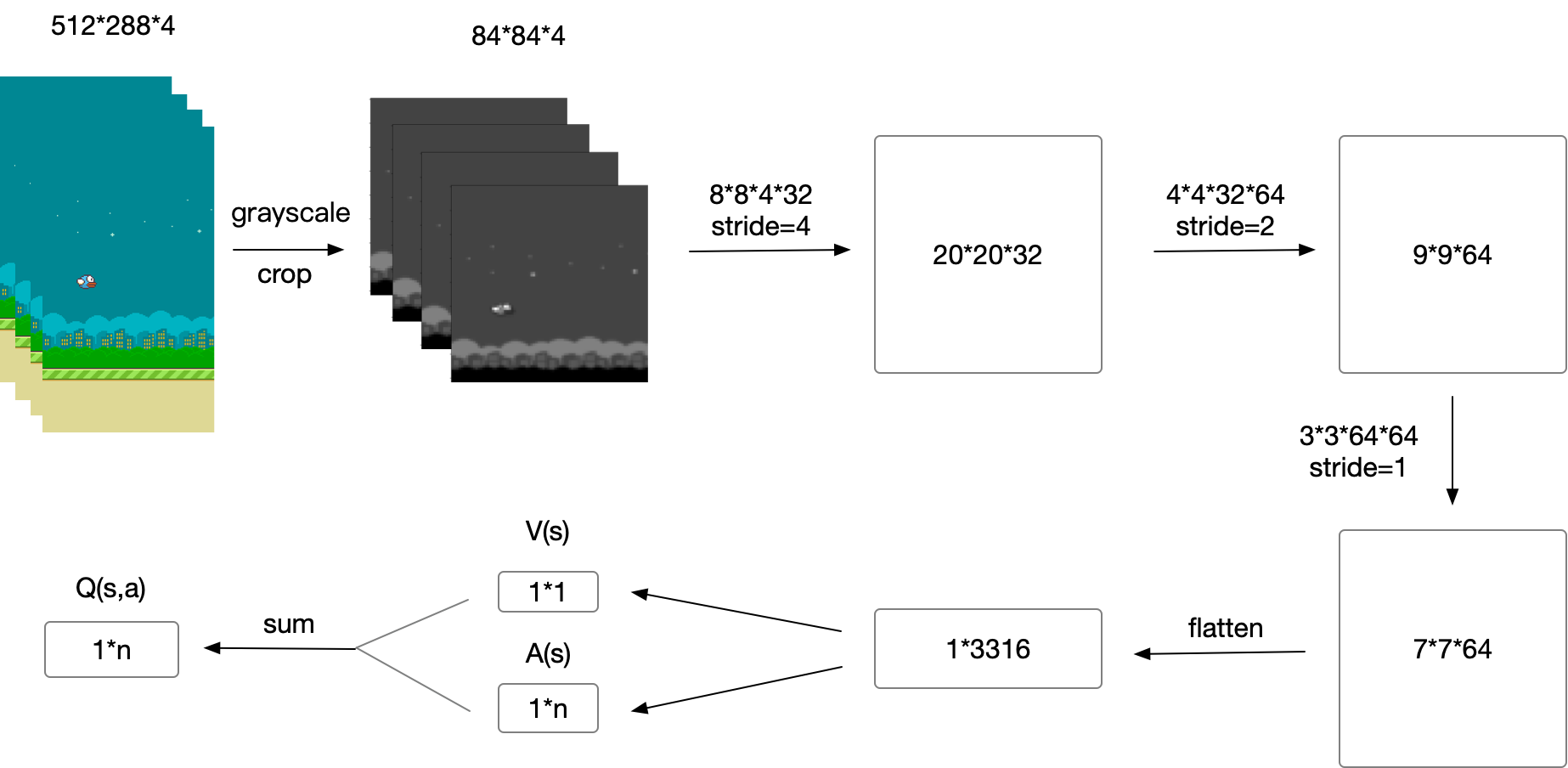

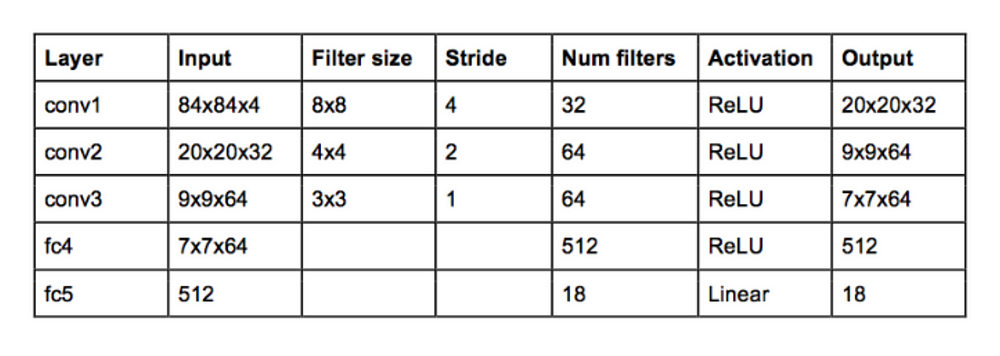

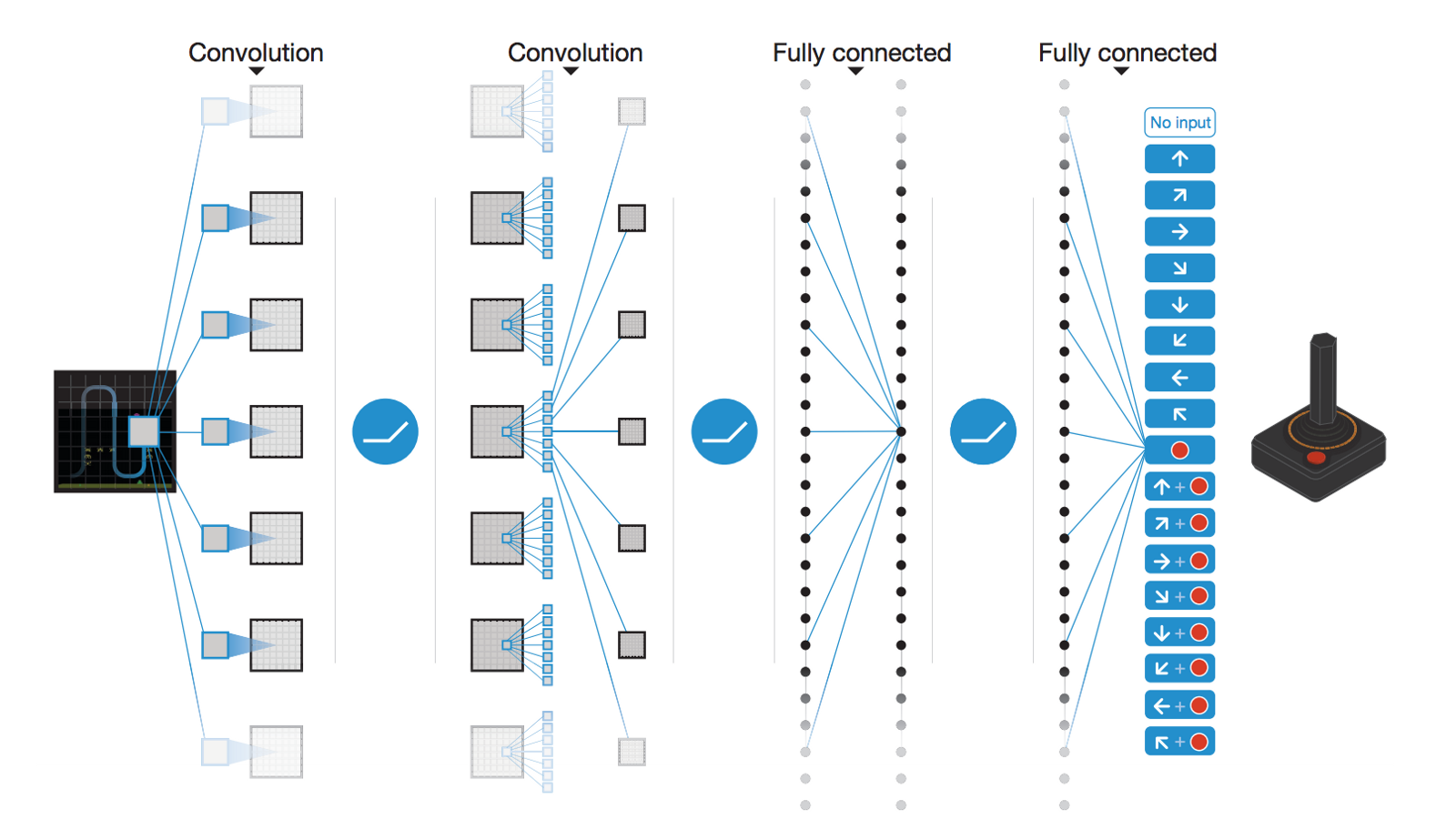

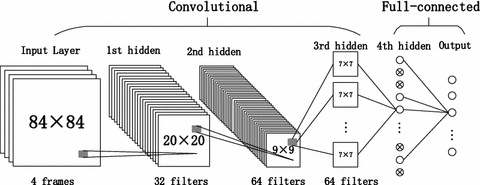

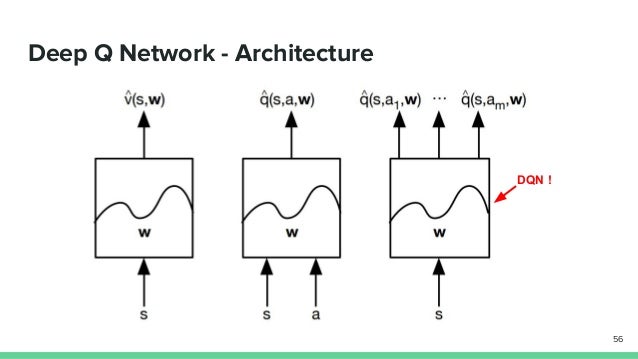

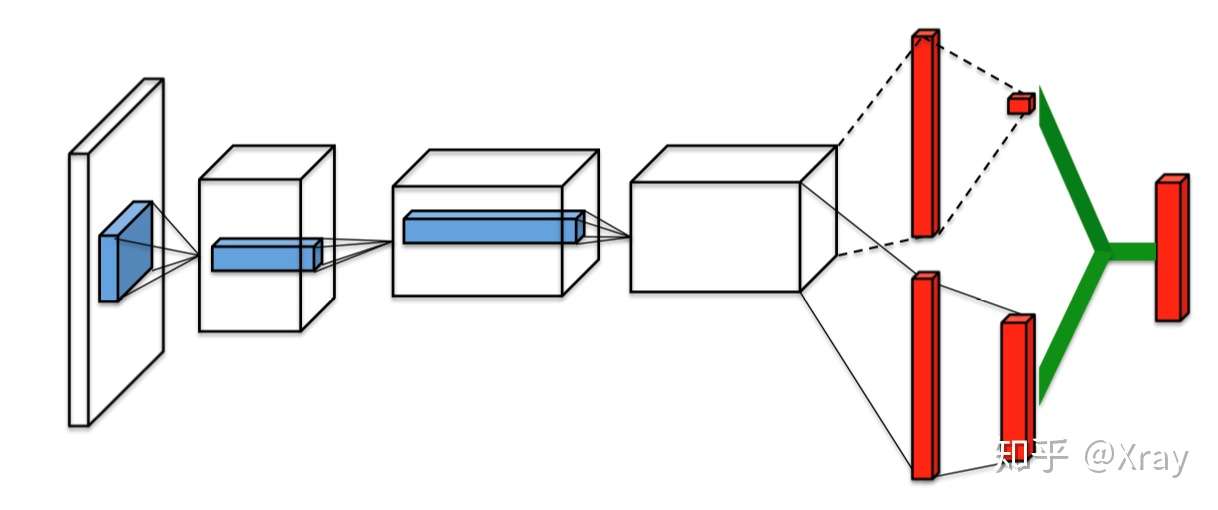

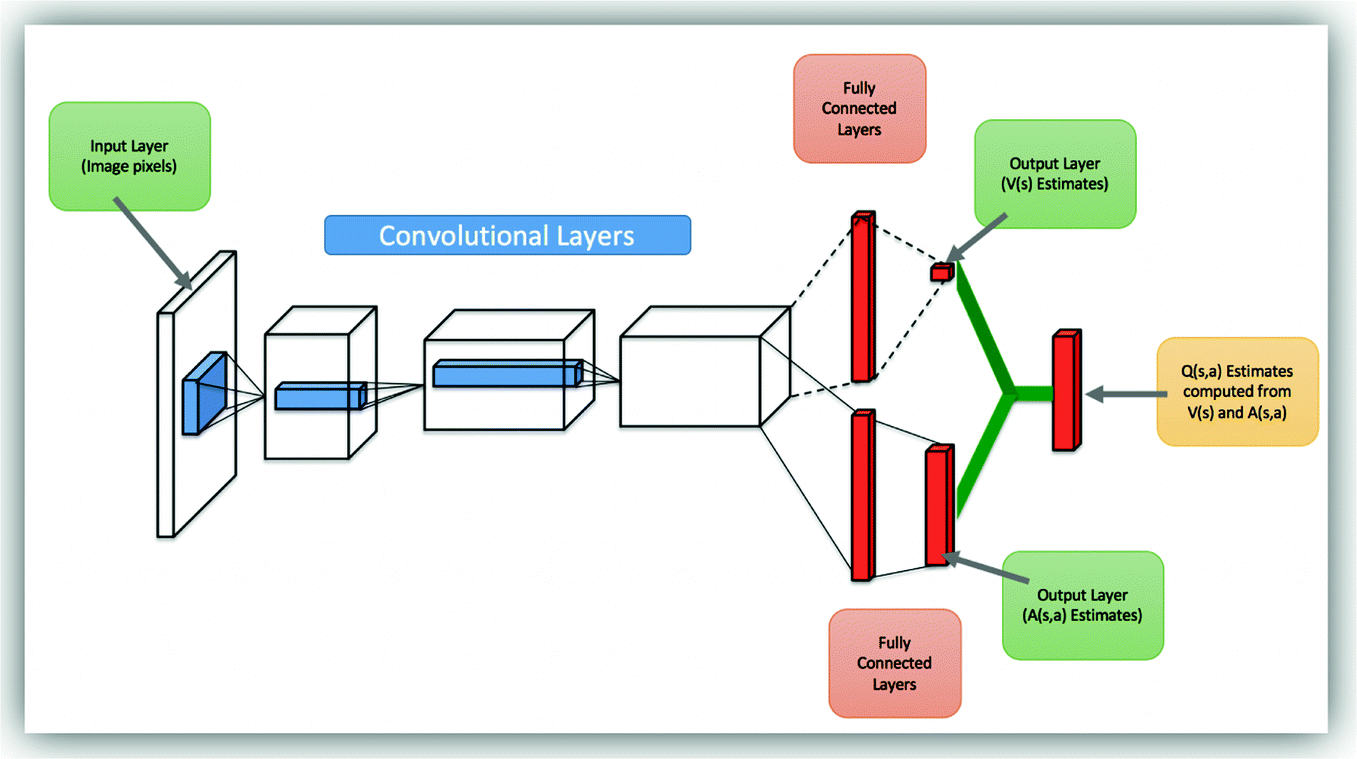

Dqn architecture. The agent has to decide between two actions - moving the cart left or right - so that the pole attached to it stays upright. DQN uses an architecture called the deep convolutional network, which utilizes hierarchical layers of tiled convolutional filters to exploit the local spatial correlations present in images. Architecture Here is the DQN architecture.

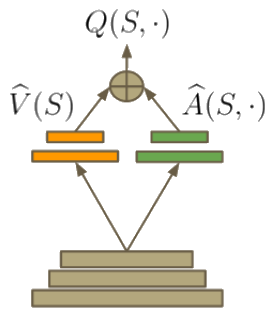

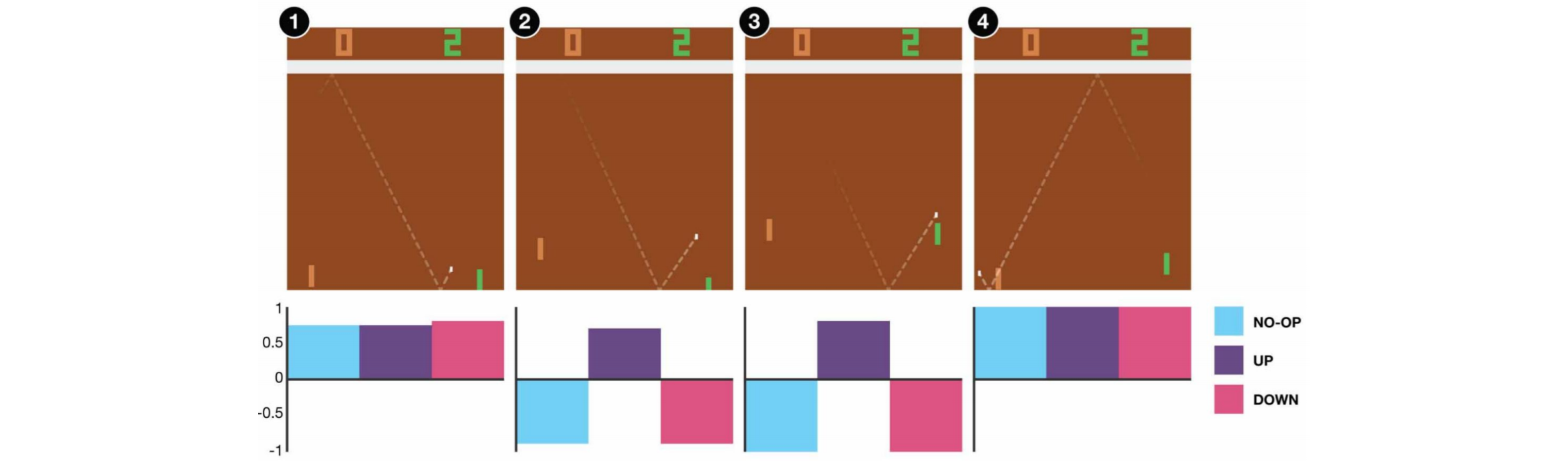

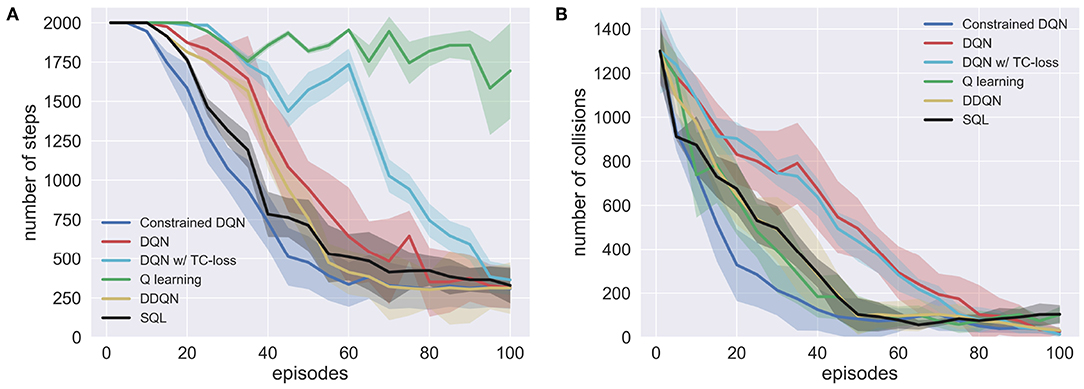

This architecture was trained separately on seven games from Atari 2600 from the Arcade Learning Environment. In recent years there have been many successes of using deep representations in reinforcement learning. Our dueling architecture represents two separate estimators:.

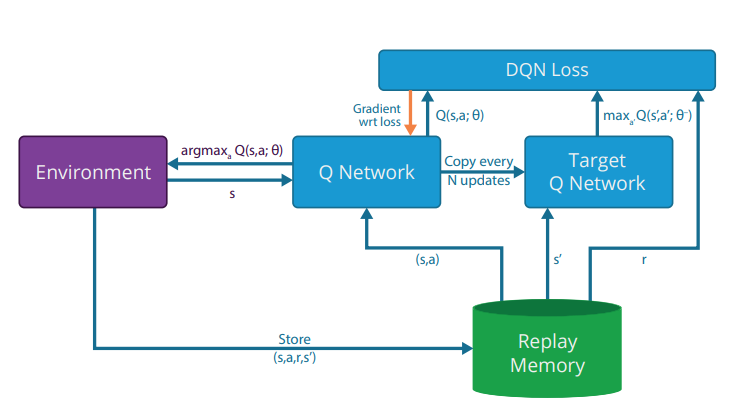

The only thing to do is to modify the DQN architecture by adding these new streams:. Reduce overestimation by decomposing the max operation in the target into action selection and action evaluation 9. The resulting architecture outperforms double DQN with prioritized replay on most Atari games, particularly games with repetitive actions.

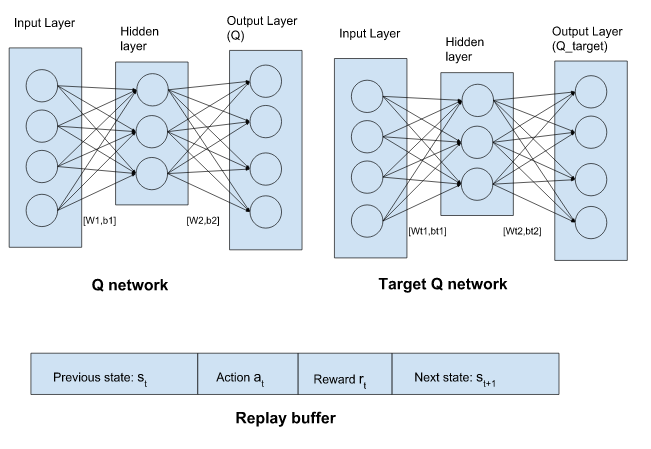

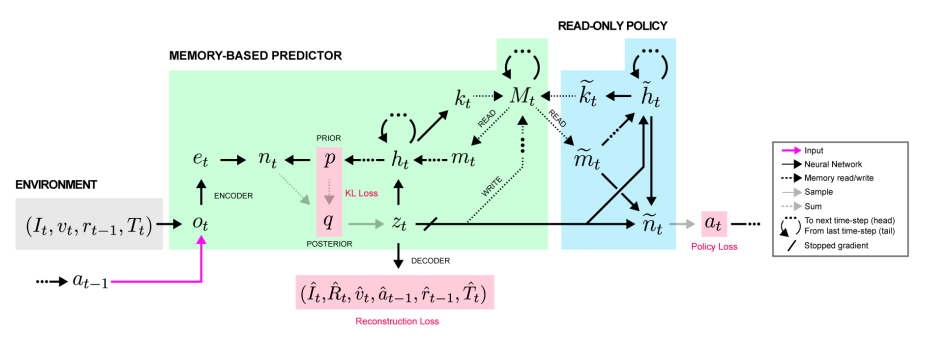

The paper introduces a Rainbow DQN agent (R a i n b o w) and an Actor Critic Hanabi Agent (A C H A). The architecture of DQN, replacing only its first fully con-nected layer with a recurrent LSTM layer of the same size. DQN variants • Double DQN • Prioritized Experience Replay • Dueling Architecture • Asynchronous Methods • Continuous DQN 8.

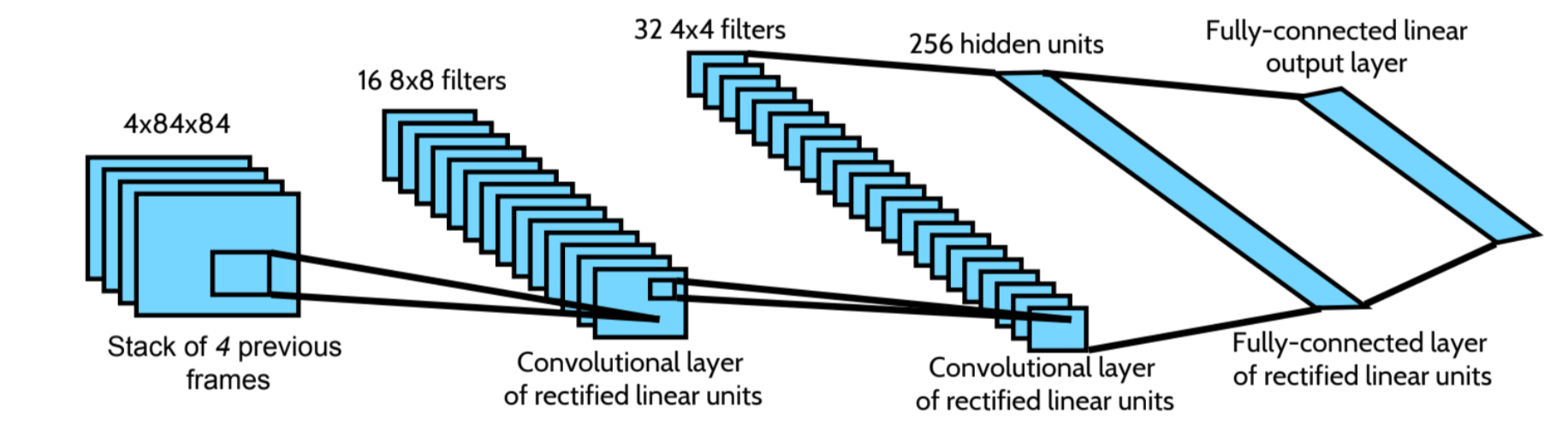

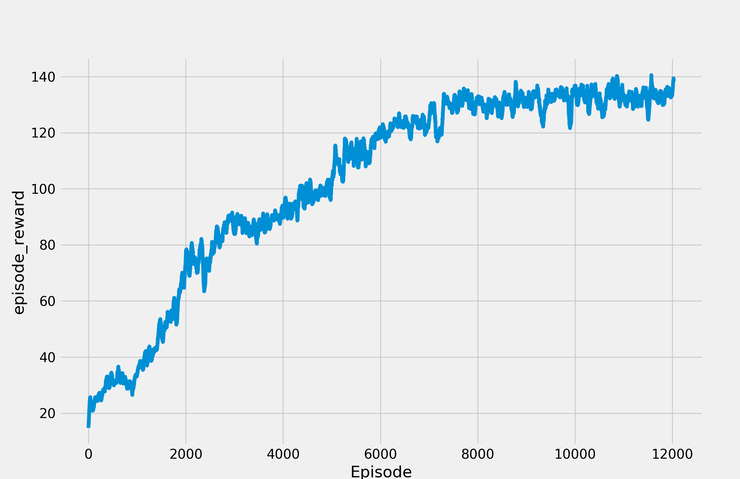

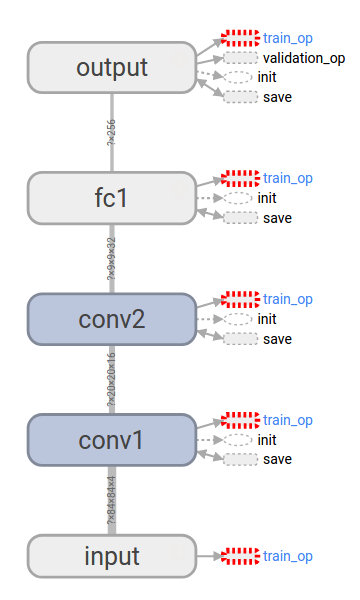

We take the last 4 previous video frames and feed them into convolutional layers followed by fully connected layers to compute the Q values for each. Using a single network architecture and fixed set of hyper-parameters, the resulting agent, Recurrent Replay Distributed DQN, quadruples the previous state of the art on Atari-57, and matches the state of the art on DMLab-30. Dqn.fit(env, nb_steps=5000, visualize=True, verbose=2) Test our reinforcement learning model:.

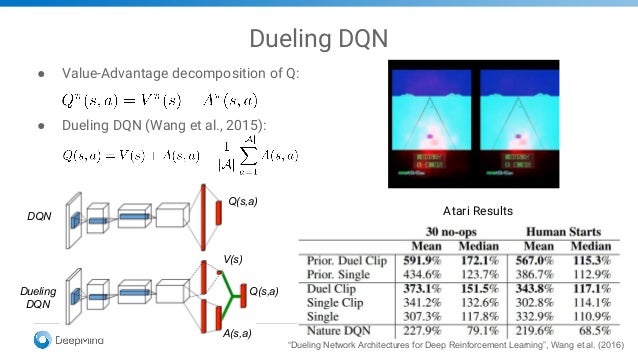

In 16, Wang et al. The network is converged by checking the loss values but the reward of 100 steps still too low. I am thinking about why your implement is of high efficiency.

The one way which fits well with the deep Q network architecture is so-called Bootstrap DQN. Use two Q-networks, one for select action, the other for evaluate action •Limited experience replay –Prioritized Experience Replay (Schaul, T et al. But the raw frames will have 210 x 160 pixels with a 128 color palette and it will clearly take a lot of computation and memory if we feed the raw pixels directly.

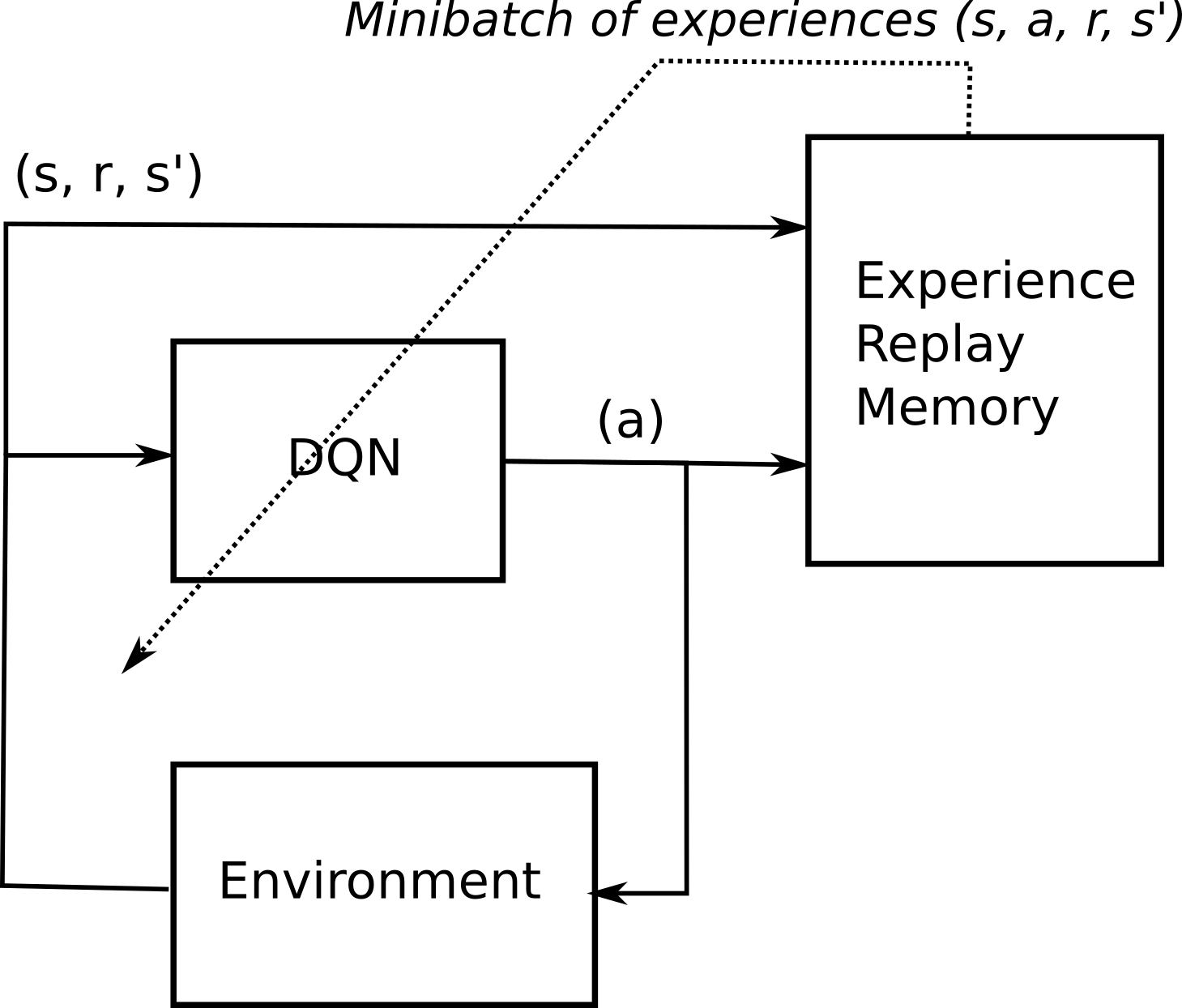

We have also provided the code to demo Tabular GVF/NO-GVF methods. (The original is implemented with Torch) - DRQN has different mechanism from DQN to train - MQN, RMQN, FRMQN have a Key-Value store memory - Implement. The idea is that some experiences may be more important than others for our training, but might occur less frequently.

Architecture Like the standard DQN architecture, we have convolutional layers to process game-play frames. We create unique design solutions for a variety of spaces, such as:. R a i n b o w is based on an architecture by Hessel et al.

THEANO_FLAG="device=cpu" ipython ./dqn/train.py -- --mode hra+1 -o nb_experiments 1 --mode can be either of dqn, dqn+1, hra, hra+1, or all. Deep Q Networks (DQNs) Architecture. In this paper, experience replay mechanism was proposed.

Deep Q learning, as published in (Mnih et al, 13), leverages advances in deep learning to learn policies from high dimensional sensory input. We compared DQN with the best performing methods from the reinforcement learning literature on the 49 games where results were. They must derive efficient.

The DQN architecture from the original paper 4 is implemented, although with some differences. The implementation follows from the paper - Playing Atari with Deep Reinforcement Learning and Human-level control through deep reinforcement learning. We will look at some of the improvements made to DQN architecture, such as double DQN and dueling network architecture.

Backpropagating through a recurrent network requires each backward pass to have many timesteps of game screens and target values. Then it feeds last two screens as an input to the neural network. The first deep -network (DQN) algorithm which successfully combines a powerful nonlinear function approximation technique known as DNN together with the -learning algorithm was proposed by Mnih et al.

To use reinforcement learning successfully in situations approaching real-world complexity, however, agents are confronted with a difficult task:. It is the first agent to exceed human-level performance in 52 of the. We accomplish this by looking at the last Lstates, fs t t(L 1);:::;sg, and feed these into a convolutional neural network (CNN) to get intermediate outputs CNN(s t i) = x t i.

MQN Architecture and Interior Design. In Hanabi, Rainbow achieves a self. In short, the algorithm first rescales the screen to 84x84 pixels and extracts luminance.

Dqn.test(env, nb_episodes=5, visualize=True) This will be the output of our model:. Beyond DQN •More stabled learning –Double DQN (Van, H et al. Try to add epochs, change the architecture, add fixed Q-values, change the learning rate, use a harder environment (such as Health Gathering)…and so on.Have fun!.

The DQN paper was the first to successfully bring the powerful perception of CNNs to the reinforcement learning problem. This image is processed by three convolutional layers (Cun et al. Prioritized Experience Replay Theory.

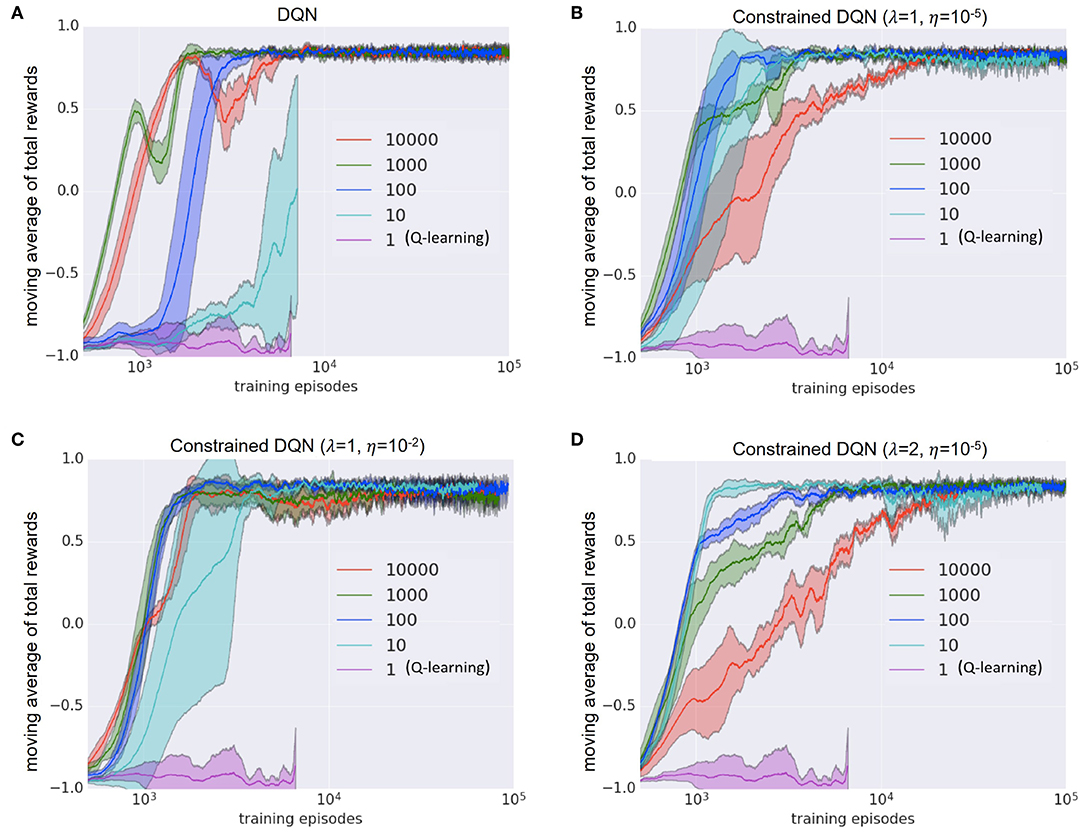

Each action taken by the agent will generate an experience, which consists of the current state s,. The main benefit of this factoring is to generalize learning across actions without imposing any change to the underlying reinforcement learning algorithm. This tutorial shows how to use PyTorch to train a Deep Q Learning (DQN) agent on the CartPole-v0 task from the OpenAI Gym.

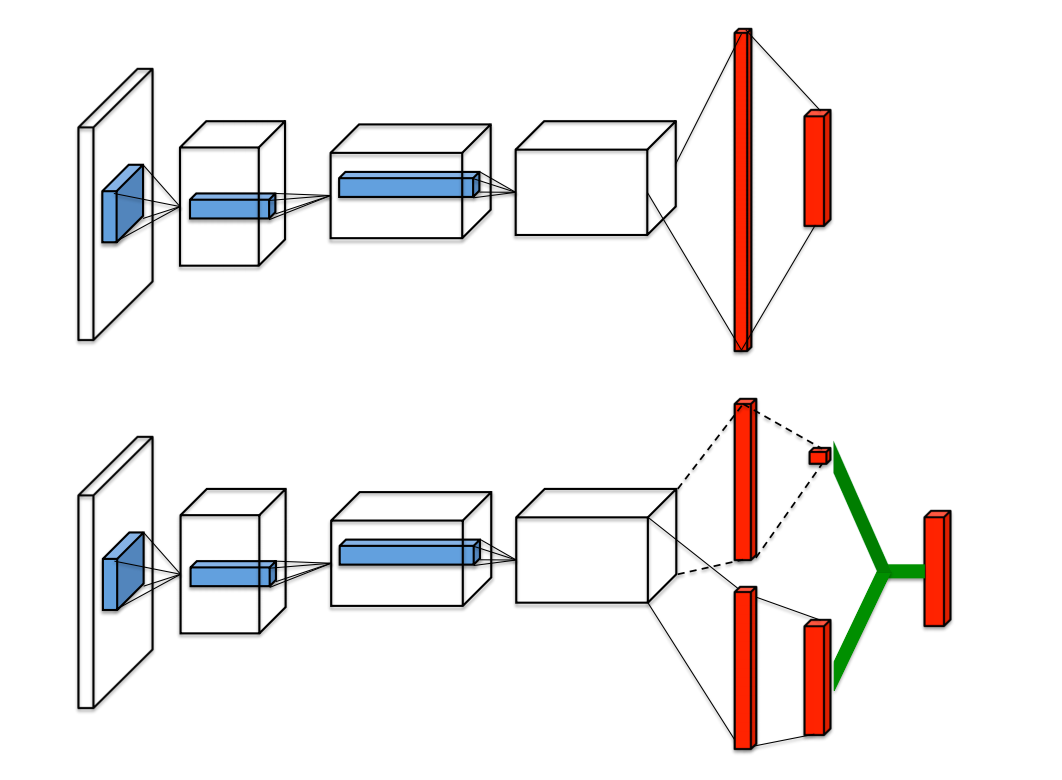

The two streams are combined via a special aggregating layer to produce an estimate of the state-action value function Qas shown in Figure 1. Reinforcement Learning in AirSim#. The dueling architecture consists of two streams that represent the value and advantage functions, while sharing a common convolutional feature learning module.

Prioritized Experience Replay (PER) was introduced in 15 by Tom Schaul. DQN architecture with the loss function described by L( ) = E(r+ max a0 Q(s0;a0j 0) Q(s;aj ))2 where and 0are parameters of the estimation and target deep neural networks respectively. 1998) and the out-.

DQN-Atari Deep Q-network implementation for Pong-vo. Our dueling network represents two separate estimators:. May be I need to do more job on debugging DQN, simplify the network is a choice (I just add too many things into the network:.

From there, we split the network into two separate streams, one for estimating the state-value and the other for estimating state-dependent action advantages. You first need to train the model using one of the above commands (Tabular GVF or no-GVF) and then run the demo. Parameters are learned from scratch.

The architecture of DDPG is shown in Figure 2, the actor network is DQN similar to the architecture in Figure 2. (Created using NN-SVG and draw.io) The state, which is an image of the screen, passes through a series of convolutional layers, where several filters, activations and pooling operations will be applied, to reduce it to a more manageable subspace. With a single architecture and choice of hyperparameters the DQN.

In this chapter, we will explore how DQN works and also learn how to build a DQN that plays any Atari game by taking only the game screen as input. Once the optimal value function is known, an optimal policy can be derived by acting greedily with respect to it. The first layer is an input flatten the observation space followed by three dense.

The first architecture is a basic extension of DQN. Proposed a neural network architecture named dueling architecture (dueling DQN) for model-free RL and experimentally proved that the DQN of dueling architecture can yield better policy. Congratulations on building your very first deep Q-learning model.

Reinforcement Learning (DQN) Tutorial¶ Author:. As a result, this architecture is robust to natural transformations such as changes of. We love to design, plan, and construct spaces that enhance the lives of individuals who use them to live, work, and play.

Double DQN • From Double Q-learning to DDQN 10. We below describe how we can implement DQN in AirSim using CNTK. So, we take a raw frame and pass that to the convolutional layers to understand the game state.

3.6 Distributed DQN (GORILA) The main limitation of deep RL is the slowness of learning, which is mainly influenced by two factors:. The theory of reinforcement learning provides a normative account deeply rooted in psychological and neuroscientific perspectives on animal behaviour, of how agents may optimize their control of an environment. The first fully-connected layer is replaced with a recurrent LSTM layer of the same size.

The original DQN architecture contains a several more tweaks for better training, but we are going to stick to a simpler version for better understanding. In the next article, I will discuss the last improvements in Deep Q-learning:. The inaudible here is that you have to train a set of key, say five or 10 Q value predictors, that all share the same main body parts, so they all have same revolutional layers.

On six of the games, it surpassed all previous approaches, and on three of them, it beat human experts. I wonder if you would like to support more information about the DQN architecture. Notice how the time to learn an acceptable control law increased from approximately 30 to 0 iterations when using the breadth emphasized DQN versus the square DQN.

In the end we will. (DQN) method is achieved by approximating the optimal value function. I will discuss two simple additional improvements to the DQN architecture, Double DQN and Dueling DQN, that allow for improved performance, stability, and faster training time.

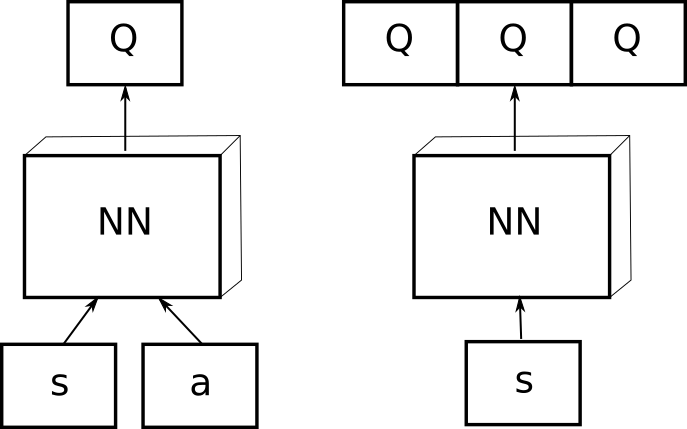

In essence, DQN is a neural network that takes in information about the state of the environment and outputs the estimate of the action-value function for each possible action. OpenAI gym provides several environments fusing DQN on Atari games. A value function plays an important role in RL, because it predicts the expected return, conditioned on a state or state-action pair.

Such like report or references. CNNs / Duel-DQN / Double DQN ). As a San Francisco based Architecture firm, we are dedicated to ensuring all soft story buildings meet the mandatory retrofit requirements led by the Earthquake Safety Implementation Program and enforced by the Department of Building Inspection.

Li Nov 13 '17 at 8:57. As the name might suggest, here, we figuratively make a neural network duel with itself using two separate estimators for the value of a state and the value of a state-action pair. Implementing Remember function One of the specific things for DQN is that neural network used in the algorithm tends to forget the previous experiences as it overwrites them with new experiences.

For high dimensional state spaces, one of the most popular methods for approximating the action-value function is the DQN architecture mnih15humanlevel. In this paper, we present a new neural network architecture for model-free reinforcement learning. Each demon trains a separate general value function (GVF) based on its own policy and pseudo-reward function.

One for the state value function and one for the state-dependent action advantage function. Our team specializes in creative problem solving. Our HRA method builds upon the Horde architecture (Sutton et al., 11).

In Figure 12, it appeared that as the architecture lost nodes in each layer, its ability to efficiently learn a well performing control law significantly decreased. This work bridges the divide between high-dimensional sensory inputs and. This ensures that the algorithm is also aware of a direction of movement of the game elements.

The architecture of DRQN augments DQN’s fully connected layer with a LSTM. Which achieved state-of-the-art perfomance on the Arcade Learning Environment by combining multiple improvements to the DQN variant of Q learning. The first layer of DQN is the convolutional network, and the input to the network will be a raw frame of the game screen.

The difference in network between DQN and dueling DQN is shown in Figure 1:. Restaurants, retail stores, schools, museums, offices, custom homes. These are then fed into a RNN (we use an.

DQN Architecture Our DQNs have an initial input of 64 x 64 x swhere s is the size of the state history. Specifically, it learns with raw pixels from Atari 2600 games using convolutional networks, instead of low-dimensional feature vectors. Double Q-learning • Motivation:.

The easiest way is to first install python only CNTK (instructions).CNTK provides several demo examples of deep RL.We will modify the DeepQNeuralNetwork.py to work with AirSim. Human-level control through deep reinforcement learning - nature.pdf Created Date:. This is a really great approach to solve CartPole problem.

Weight experience according to surprise •High computational time complexity. The DQN architecture is minimally modified:. What I Did in This Internship - Implement the Chainer version DRQN, MQN, RMQN, FRMQN.

Vanilla DQN Experiments 6.1. One for the. For the agent, Figure 3 depicts the DQN’s architecture:.

The Horde architecture consists of a large number of ‘demons’ that learn in parallel via off-policy learning. Depicted in Figure 2, the architecture of DRQN takes a sin-gle 84 84 preprocessed image. After this initial layer, in both DQNs, we apply 3 convolutional layers consisting of 32 filters of size 3 x 3 and stride 1.

The figure below illustrates the architecture of DQN:. DQN with Differentiable Memory Architectures Okada Shintarou in SP team (Mentor:. Algorithm, network architecture and hyperparameters.

Introduction To Dueling Double Deep Q Network D3qn By Rokas Balsys Analytics Vidhya Medium

Best Paper At Icml Dueling Network Architectures For Deep Reinforcement Learning Hado Van Hasselt

Experiments With Dqn Kaustab Pal

Deep Reinforcement Learning With Modulated Hebbian Plus Q Network Architecture Deepai

Improved Robustness Of Reinforcement Learning Policies Upon Conversion To Spiking Neuronal Network Platforms Applied To Atari Games Paper Detail

Torch Dueling Deep Q Networks

Implementing Deep Reinforcement Learning With Pytorch Deep Q Learning

A Dynamic Adjusting Reward Function Method For Deep Reinforcement Learning With Adjustable Parameters

Openreview Net Pdf Id Byxhb3r5tx

Project Inria Fr Paiss Files 18 07 Munos Off Policy Drl Pdf

Hardmaru T Co Yp8asunhph

Training Architecture Of A Deep Q Network Dqn Agent Picture Adapted Download Scientific Diagram

Multi Pass Q Networks For Deep Reinforcement Learning With Parameterised Action Spaces

Genetic Deep Q Learning

Dueling Deep Q Networks Dueling Network Architectures For Deep By Chris Yoon Towards Data Science

Human Level Control Through Deep Reinforcement Learning Nature

Table I From Asynchronous Deep Q Learning For Breakout With Ram Inputs Semantic Scholar

Using Dueling Dqn To Play Flappy Bird Kk S Blog Fromkk

Q Learning Wikipedia

Q Tbn 3aand9gctyjenhhqro05br3k9fqk0yiptj48mmfyjrvkp4z4jj5aq9qkyb Usqp Cau

New Ideas In Reinforcement Learning Cerenaut Research

Deep Reinforcement Learning

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Arxiv Org Pdf 1903

转 Let S Make A Dqn 系列 Ahu Wangxiao 博客园

Deep Q Learning An Introduction To Deep Reinforcement Learning

Deep Reinforcement Learning

Conquering Openai Retro Contest 2 Demystifying Rainbow Baseline Mc Ai

Vanilla Deep Q Networks Deep Q Learning Explained By Chris Yoon Towards Data Science

Our Double Dueling Dqn Architecture Of I Agent I Where The I Agent I Choose The Best I Action I With The Advice Of Online Dueling Q Network And Estimate The I Q I I Value I By Target Dueling Q Network

Advanced Optimization Methods For Dqn Rlprojects

Hal Archives Ouvertes Fr Hal v2 Document

Reinforcement Learning Dqn Tutorial Pytorch Tutorials 1 6 0 Documentation

The Idea Behind Actor Critics And How c And A3c Improve Them Ai Summer

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

3dcnn Dqn Rnn A Deep Reinforcement Learning Framework For Semantic Parsing Of Large Scale 3d Point Clouds

Deep Q Networks A Summary Cyk S Notepad

Python Lessons

Building A Deep Q Network To Play Flappy Bird Pytorch 1 X Reinforcement Learning Cookbook Book

Beyond Dqn A3c A Survey In Advanced Reinforcement Learning

Google Ai Blog An Optimistic Perspective On Offline Reinforcement Learning

Deep Reinforcement Learning

Dqn Architecture With The Loss Function Described By L B E R G Download Scientific Diagram

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

An Illustration Of The Architecture Of The Proposed Multi User Dqn Used Download Scientific Diagram

Deep Q Network How To Exclude Ops At Auto Differential Of Tensorflow Stack Overflow

Beyond Dqn A3c A Survey In Advanced Reinforcement Learning By Joyce Xu Towards Data Science

Python Lessons

Ieeexplore Ieee Org Iel7 Pdf

Dueling Q Networks In Tensorflow 2 Adventures In Machine Learning

Deep Q Learning With Prioritized Sampling Springerlink

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

How Does Implicit Quantile Regression Network Iqn Differ From Qr Dqn Data Science Stack Exchange

The Two Different Hierarchical Network Architectures Of Dqn For Download Scientific Diagram

Understanding Dqn Her Deep Robotics

Lec3 Dqn

Rllib Algorithms Ray V1 1 0 Dev0

Multiagent Cooperation And Competition With Deep Reinforcement Learning

Web Stanford Edu Class Cs234 Slides Lnotes6 Pdf

Figure 4 From Hierarchical Deep Reinforcement Learning Integrating Temporal Abstraction And Intrinsic Motivation Semantic Scholar

Let S Build A Dqn Neural Network Architectures Tom Roth

Dqn And Drqn In Partially Observable Gridworlds Kamal

Arxiv Org Pdf 1707

Doom Deep Q Networks

Relmogen Leveraging Motion Generation In Reinforcement Learning For Mobile Manipulation

Deep Reinforcement Learning Using Capsules In Advanced Game Environments Arxiv Vanity

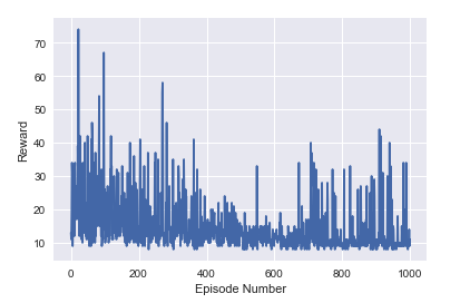

Frontiers Constrained Deep Q Learning Gradually Approaching Ordinary Q Learning Frontiers In Neurorobotics

Http Www Cs Umd Edu Furongh Uploads Rl Lec6 Pdf

Asynchronous Advantage Actor Critic Algorithm Python Reinforcement Learning Projects Book

Ape X Dqn Explained Papers With Code

The Dqn Architecture Applicable To The Sdn Based Wlan Download Scientific Diagram

Dqn Architecture With Map Centering With The Device Map Encoded In Download Scientific Diagram

Deep Reinforcement Learning For Multi Agent Systems A Review Of Challenges Solutions And Applications Deepai

Q Tbn 3aand9gct9nay65nz9jnygtqujzpt3wzpfmhjxi9hohz7mq0n28trmcynw Usqp Cau

Deep Q Learning An Introduction To Deep Reinforcement Learning

Classifying Options For Deep Reinforcement Learning Arxiv Vanity

Frontiers Constrained Deep Q Learning Gradually Approaching Ordinary Q Learning Frontiers In Neurorobotics

Deep Reinforcement Learning From Scratch

Off Policy Actor Critic Algorithms Jon Michaux

Deep Reinforcement Learning For Online Advertising In Recommender Systems

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Advanced Dqns Playing Pac Man With Deep Reinforcement Learning By Jake Grigsby Towards Data Science

V D D3qn The Variant Of Double Deep Q Learning Network With Dueling Architecture Semantic Scholar

Simple Reinforcement Learning With Tensorflow Part 4 Deep Q Networks And Beyond By Arthur Juliani Medium

David Silver Google Deepmind Deep Reinforcement Learning Synced

Rainbow Reinforcement Learning Coach 0 12 0 Documentation

Dueling Dqn Reinforcement Learning Coach 0 12 0 Documentation

Vulnerability Of Deep Reinforcement Learning To Policy Induction Attacks Arxiv Vanity

Deep Q Networks 知乎

The Dqn Architecture Applicable To The Sdn Based Wlan Download Scientific Diagram

Dqn Architecture For End To End Learning Of Atari 2600 Game Plays Download Scientific Diagram

Rllib Algorithms Ray V1 1 0 Dev0

Google Ai Blog An Optimistic Perspective On Offline Reinforcement Learning

Deep Q Network Dqn Double Dqn And Dueling Dqn Springerlink

Reinforcement Learning Via Atari Games Part 1 Chan Y Park

Q Tbn 3aand9gcq0hnlaqiyn34oyeshchpt6yitrgknpmjavifzui9uhgtnuu7sh Usqp Cau

Figure 1 From Deep Reinforcement Learning With A Natural Language Action Space Semantic Scholar

The System Architecture Used In Dqn Download Scientific Diagram