Dqn Atari Paper

Web Stanford Edu Class Psych9 Readings Mnihetalhassibis15naturecontroldeeprl Pdf

Deep Reinforcement Learning Doesn T Work Yet

That S So Deep Dude

What Is The Difference Between Episode And Epoch In Deep Q Learning Cross Validated

Demystifying Deep Reinforcement Learning Computational Neuroscience Lab

Rainbow Dqn Explained Papers With Code

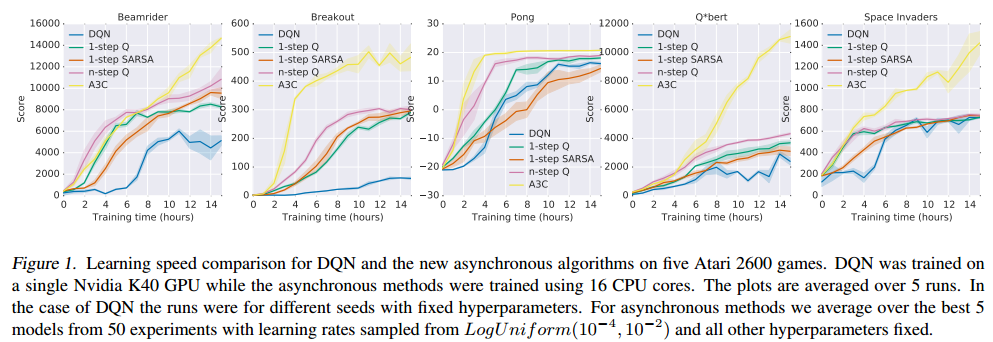

Google’s DeepMind published its famous paper Playing Atari with Deep Reinforcement Learning, in which they introduced a new algorithm called Deep Q Network (DQN for short) in 13.

Dqn atari paper. If no match. Breakout-Deep-Q-Network 🏃 Reinforcement Learning tensorflow implementation of Deep Q Network (DQN), Dueling DQN and Double DQN performed on Atari Breakout Game Installation. Combining Improvements in Deep Reinforcement Learning.

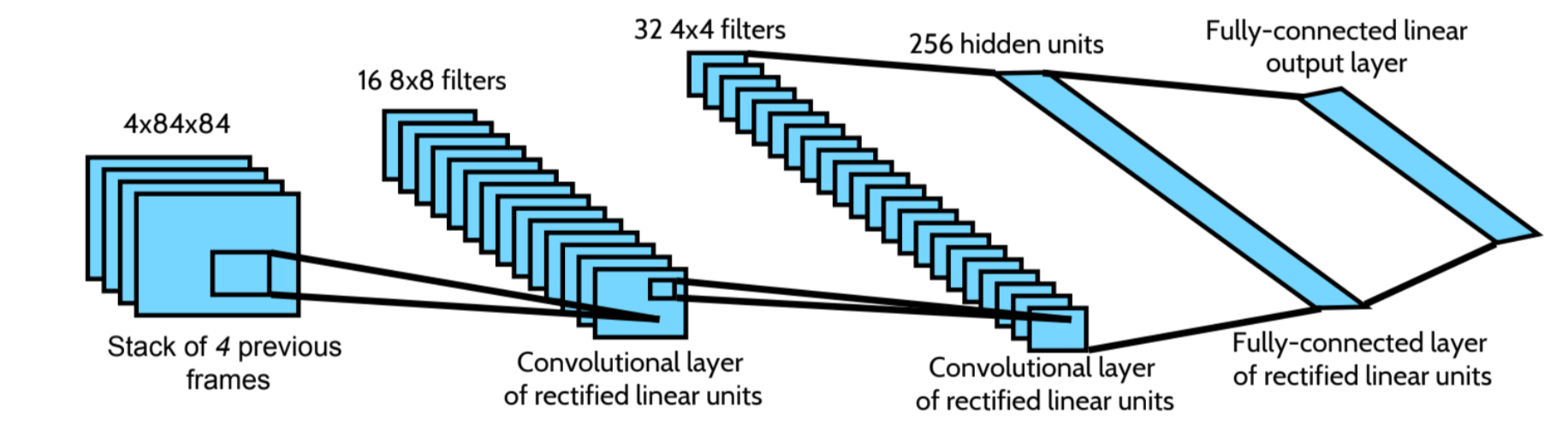

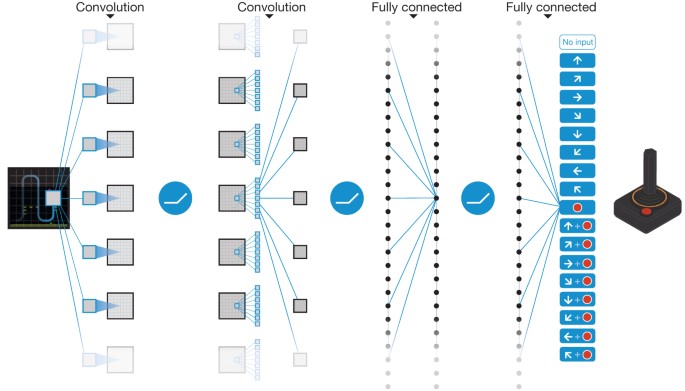

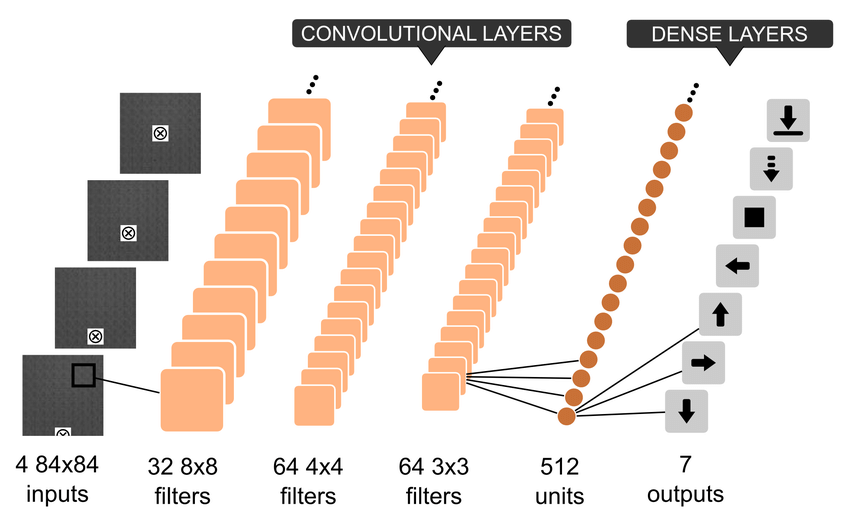

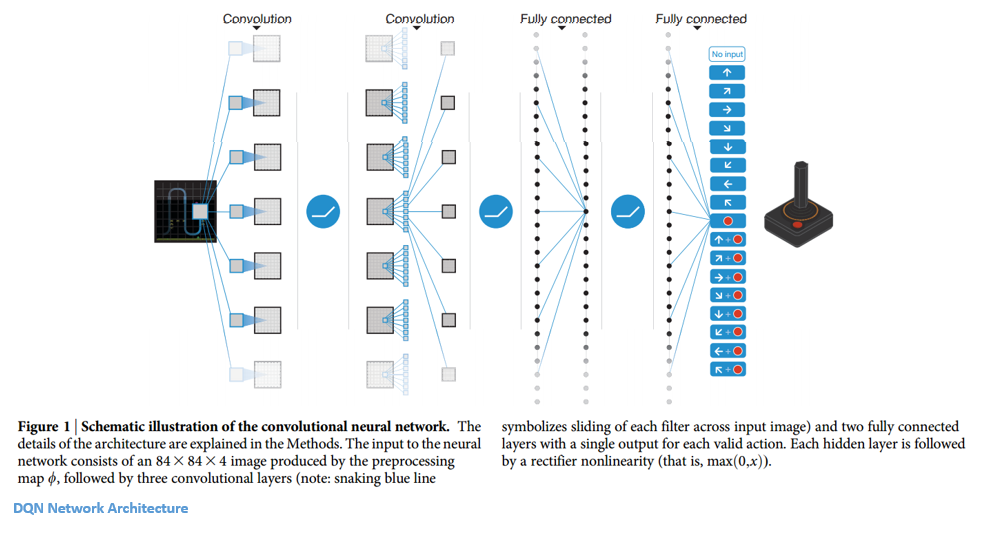

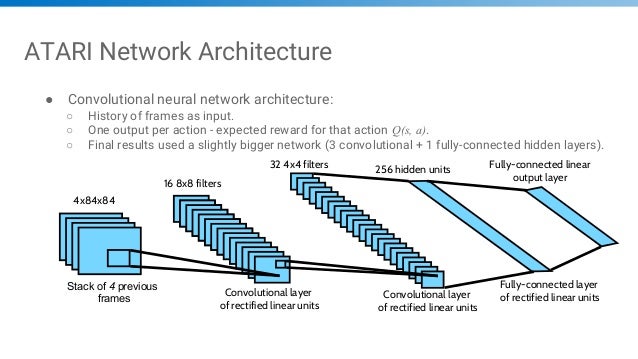

We apply our method to seven Atari 2600 games from the Arcade. Detail implementation is as follows:. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw pixels and whose output is a value function estimating future rewards.

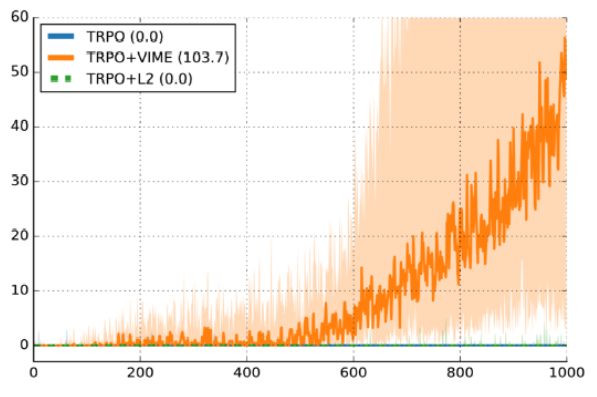

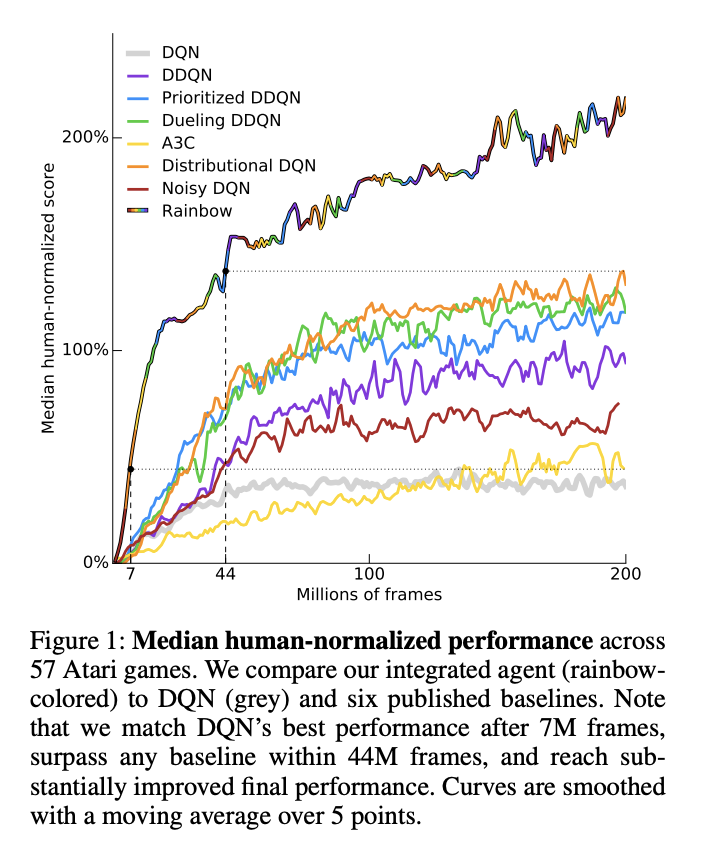

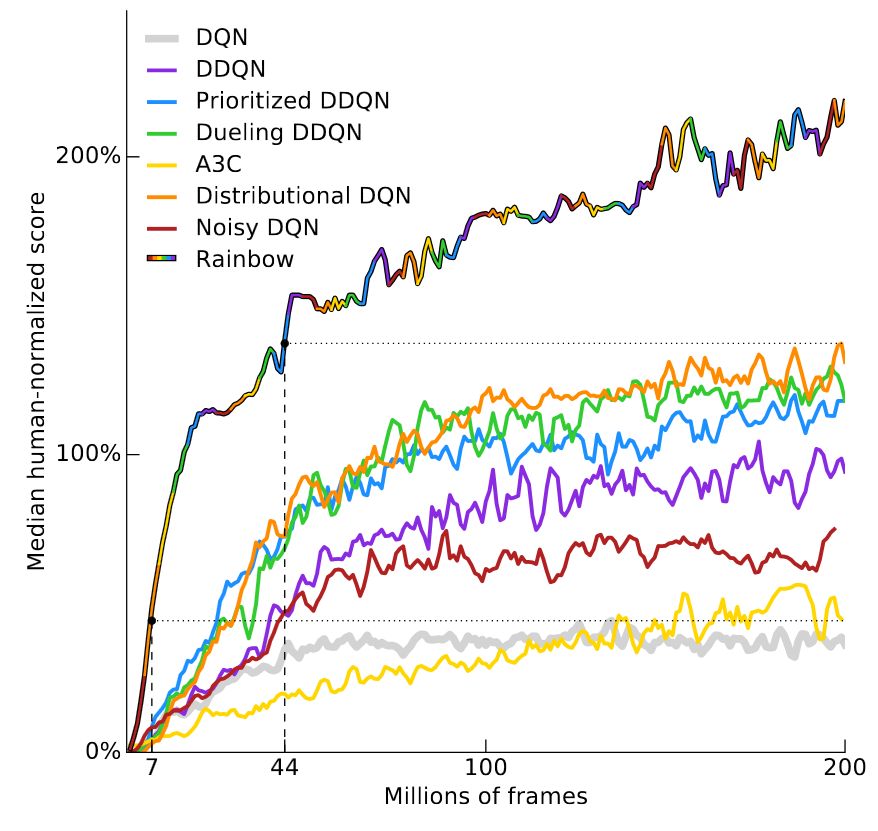

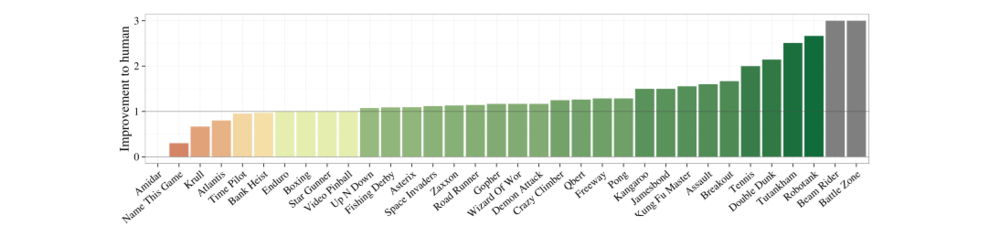

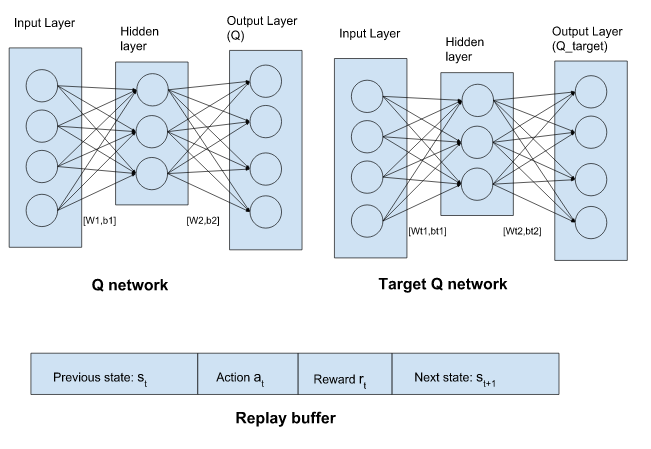

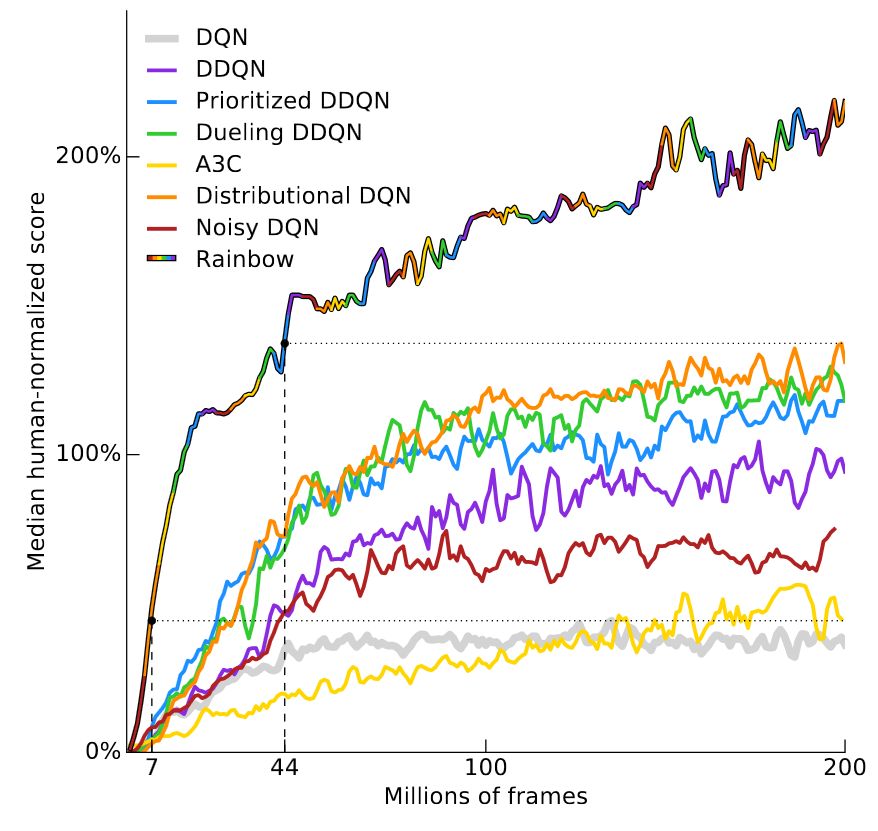

The paper describes Simulated Policy Learning (SimPLe), a complete model-based deep RL algorithm based on video prediction models and present a comparison of several model architectures, including a. This paper does an ablation study over several incremental advances made to the original DQN architecture, demonstrating that a combination of all advances gives the best performance. In the paper, the target network is held static for C/ 10,000 parameter.

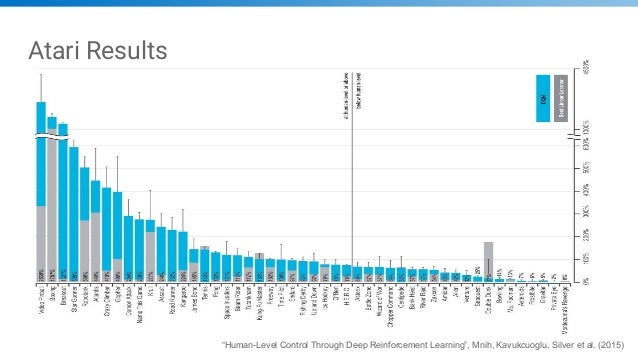

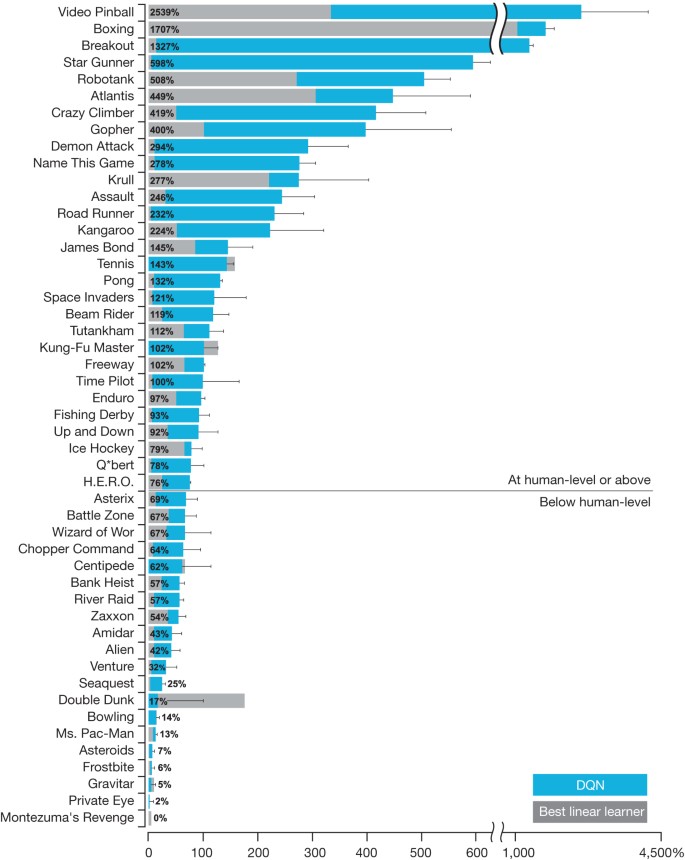

Rainbow which combines 6 separate DQN improvements each contributing to the final performance. Developed a single Deep Q-Network (DQN) that is able to play multiple Atari games, in many cases surpassing hu-man expert players. DQN was the first algorithm to achieve human-level control in the ALE.

Finally, we will understand and implement DQN presented in Deepmind’s paper “ Playing Atari with Deep Reinforcement Learning (Mnih et al. #ai #dqn #deepmind After the initial success of deep neural networks, especially convolutional neural networks on supervised image processing tasks, this paper was the first to demonstrate their. It speeds up roughly by 4 time the training without loosing much information.

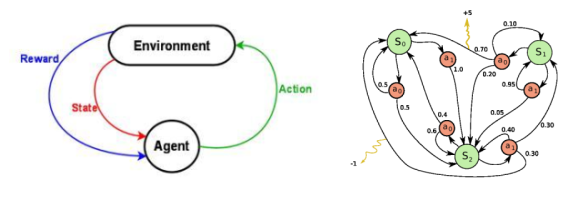

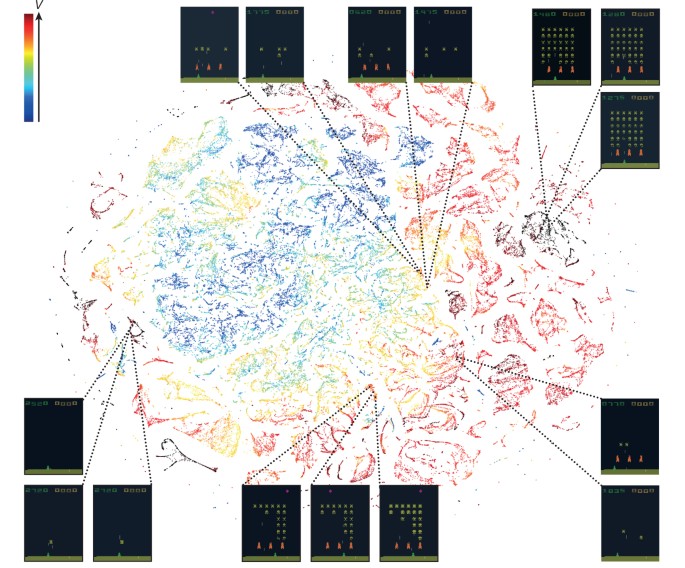

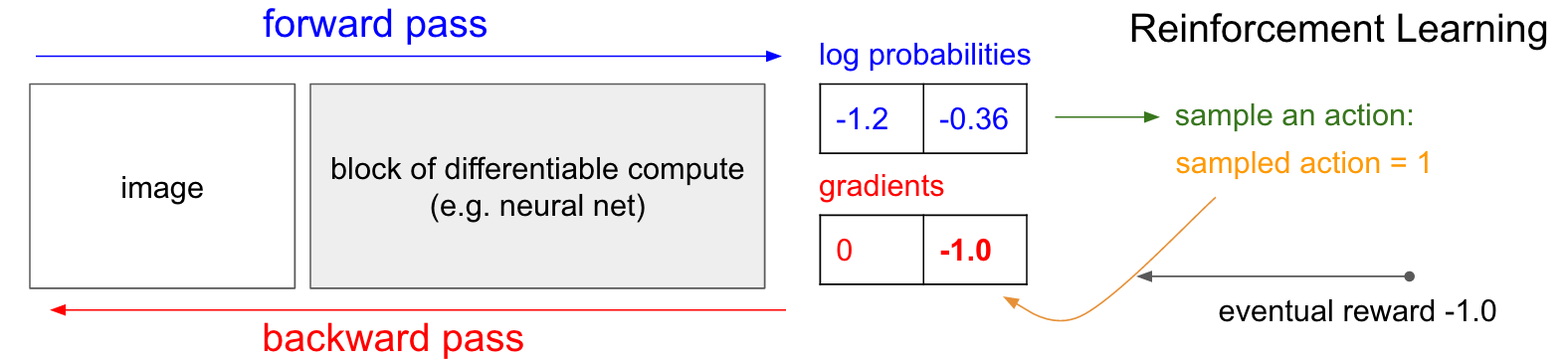

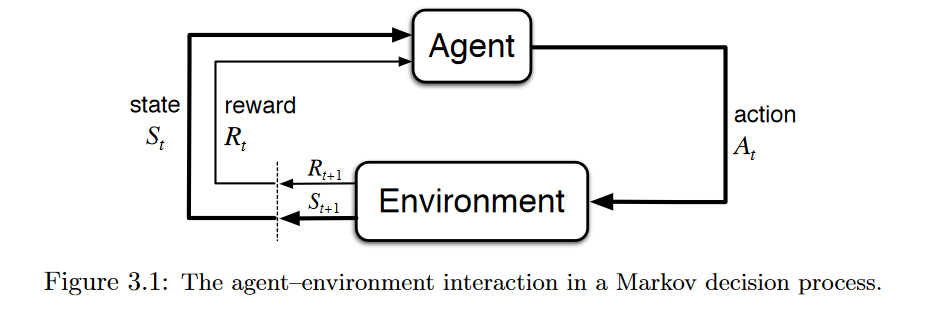

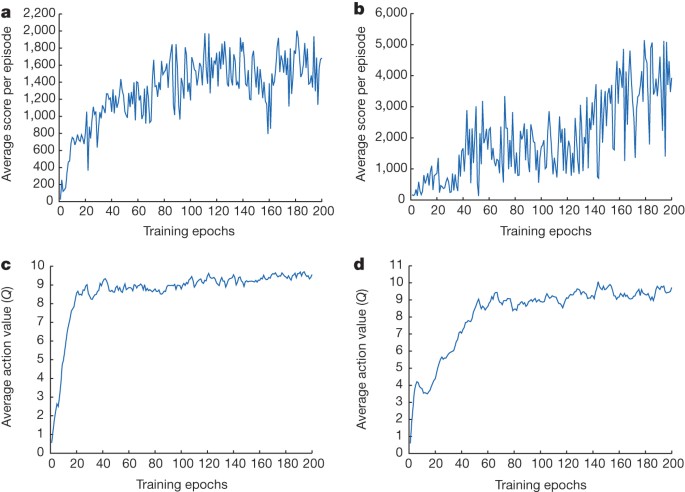

The theory of reinforcement learning provides a normative account deeply rooted in psychological and neuroscientific perspectives on animal behaviour, of how agents may optimize their control of an environment. We now present some more insight to how bootstrapped DQN drives deep exploration in Atari. Received 10 July 14;.

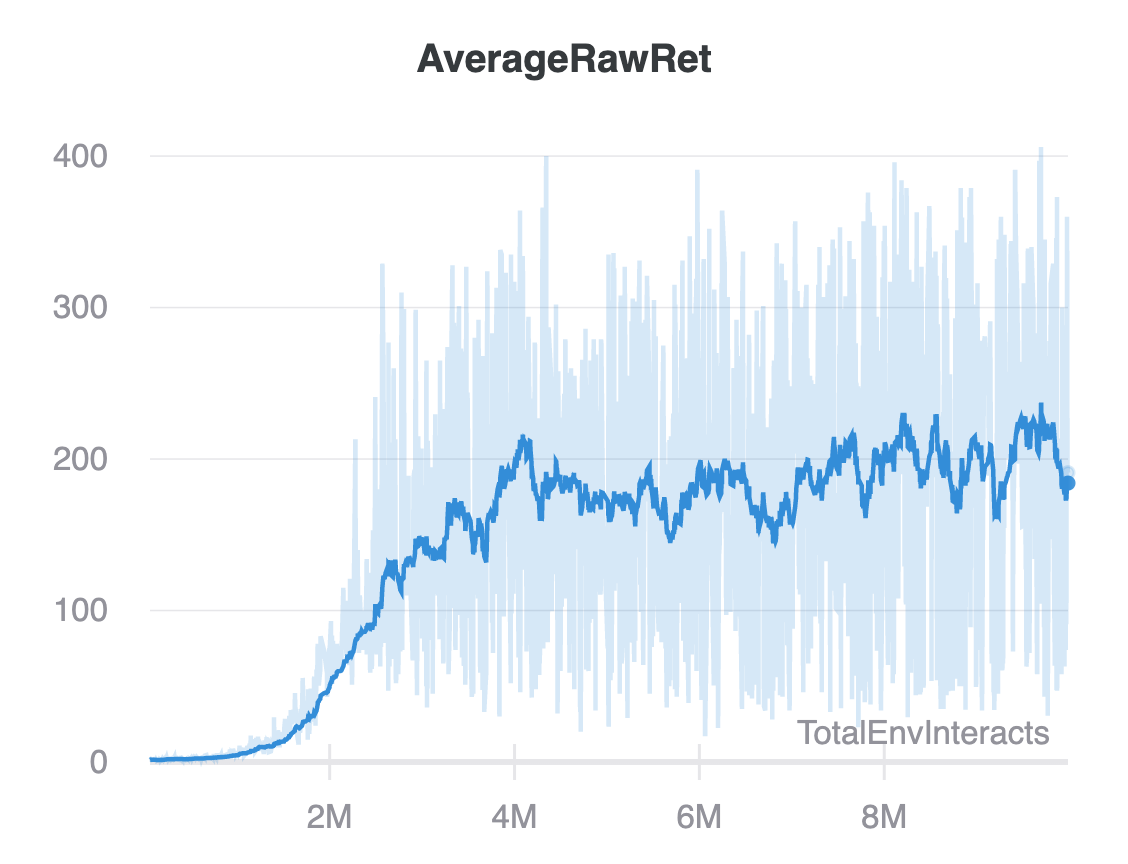

DQN noop Score 16.0. In this post, we will look into training a Deep Q-Network (DQN) agent (Mnih et al., 15) for Atari 2600 games using the Google reinforcement learning library Dopamine.While many RL libraries exists, this library is specifically designed with four essential features in mind:. It demonstrated how an AI agent can learn to play games by just observing the screen without any prior information about those games.

I read, in the pre-processing and model architecture section (section 4.1), that for each state that is input to the CNN, that this state is actually stacked frames of the game, so basically what has to be done, to my understanding, is that for each time step you stack 4 frames (current frame and 3 previous. DQN is introduced in 2 papers, Playing Atari with Deep Reinforcement Learning on NIPS in 13 and Human-level control through deep reinforcement learning on Nature in 15. Type the following command to install OpenAI Gym Atari environment.

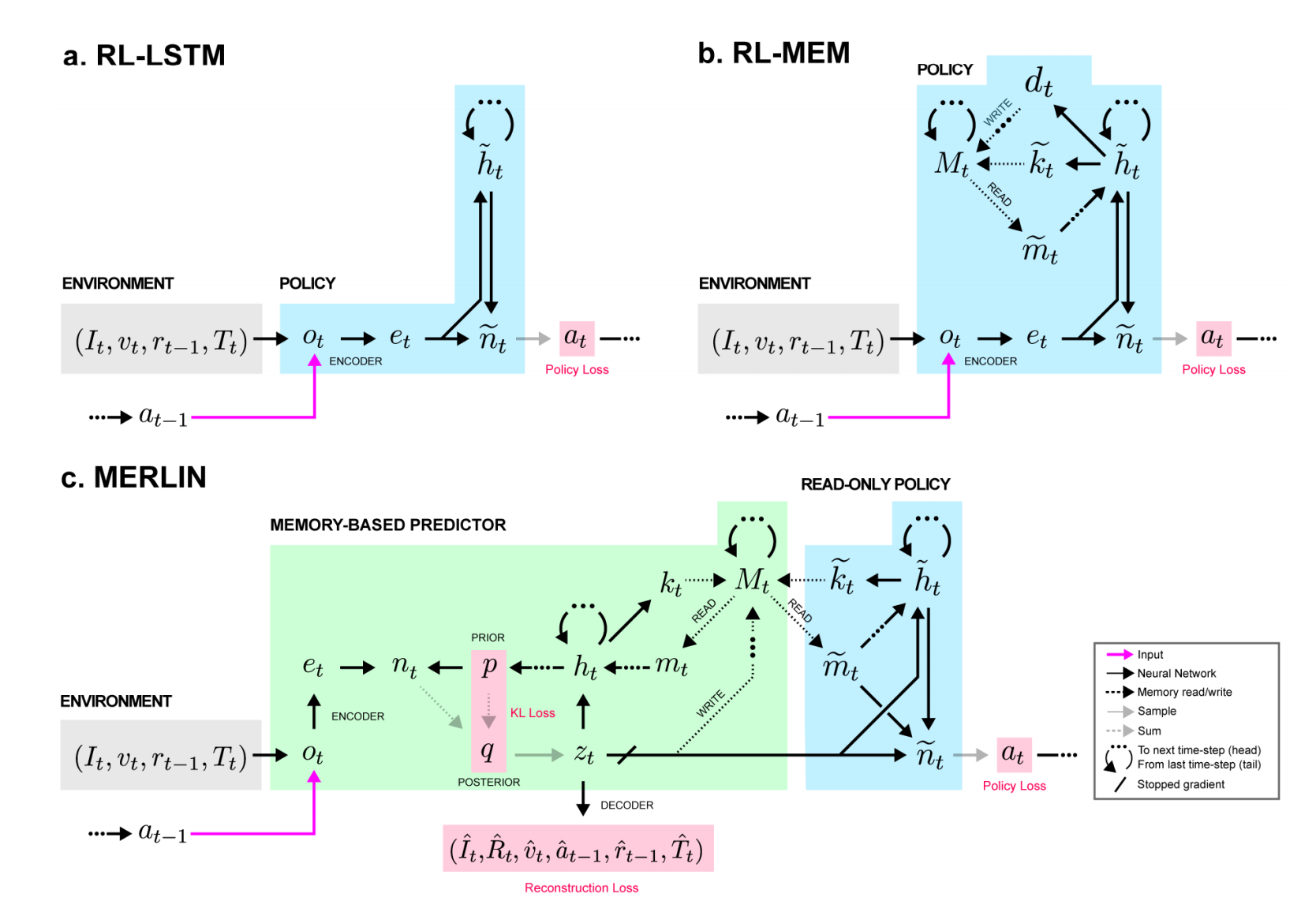

In this paper, we answer all these questions affirmatively. Still, many of these applications use conventional architectures, such as convolutional networks, LSTMs, or auto-encoders. The implementation follows from the paper - Playing Atari with Deep Reinforcement Learning and Human-level control through deep reinforcement learning.

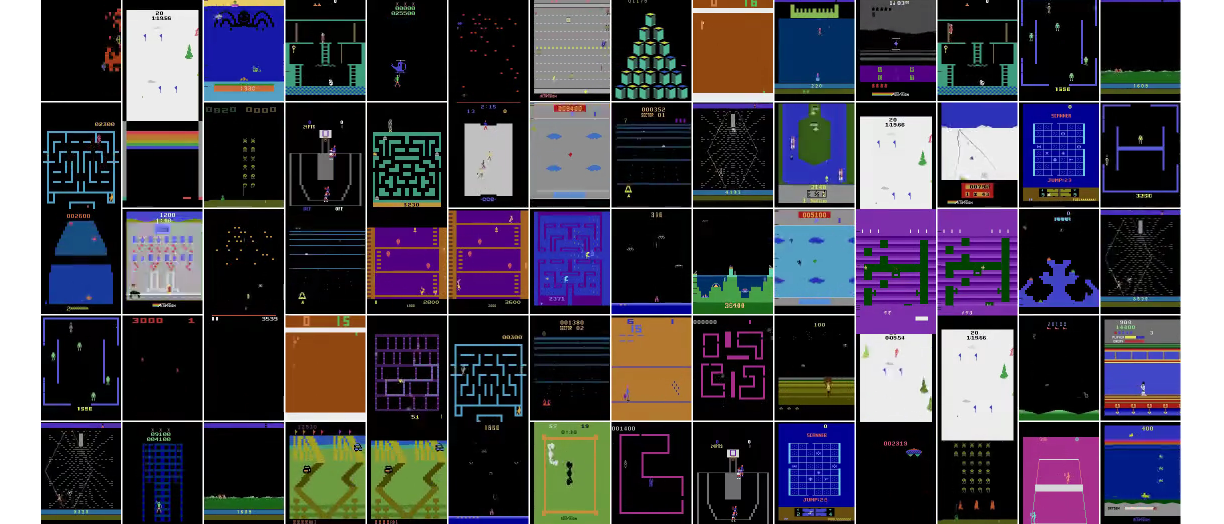

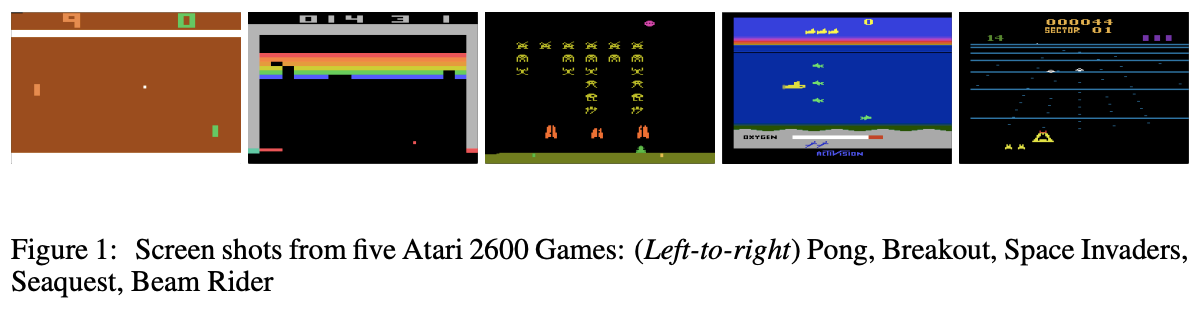

Toy example and Atari 2600 games. From 1977 to 1990, Atari released 57 games for its Atari 2600, including classic titles like. The implementation of the DQN algorithm I used has a few changes from the DQN Atari paper:.

For the following 3 images, the last action is repeated. APA Abhi Savaliya, Chirag Ahuja, Chirayu Shah, Sagar Parikh (19). Number of steps before bootstrapping - 1, 3, 10.

We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning. I recommend watching the whole series, which. IEEE Abhi Savaliya, Chirag Ahuja, Chirayu Shah, Sagar Parikh.

The method of learning Atari games, as presented above and even with neural networks employed to approximate the Q-values, would not yield good results. Our dueling network represents two separate estimators:. We will briefly go through general policy iteration and temporal difference methods.

To these sections appear only in the online paper. In their groundbreaking paper “Playing Atari with Deep Reinforcement learning” (Mnih et al.,13), Mnih et al. I read the DQN paper titled:.

Bellemare et al.’s paper provides evidence that the distributional perspective leads to improved performance and more stable reinforcement learning. The challenging domain of classic Atari 2600 games12. I strongly recommend that you skim through the paper before reading this tutorial, and then read it more deeply when you are done.

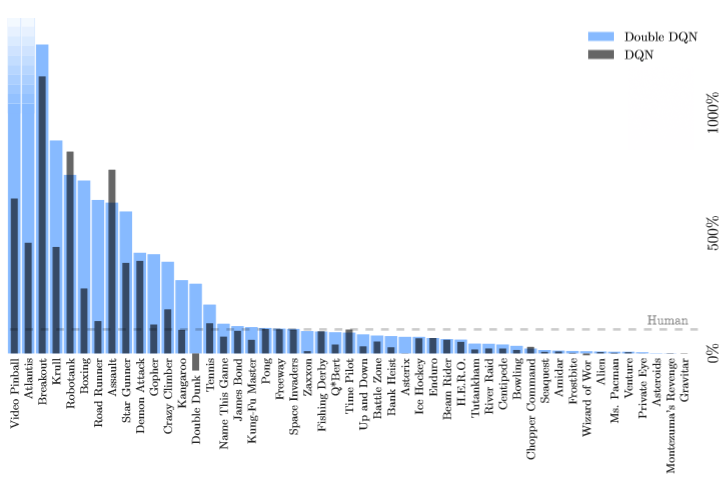

We’ll implement DQN, and likely all future work, in PyTorch, making use of automatic. From looking at Figure 3 (which uses Double DQN, not DQN), perhaps Robotank, Defender, Tutankham, Boxing, Bowling, BankHeist, Centipede, and Yar’s Revenge?. The problem is studied in two contexts:.

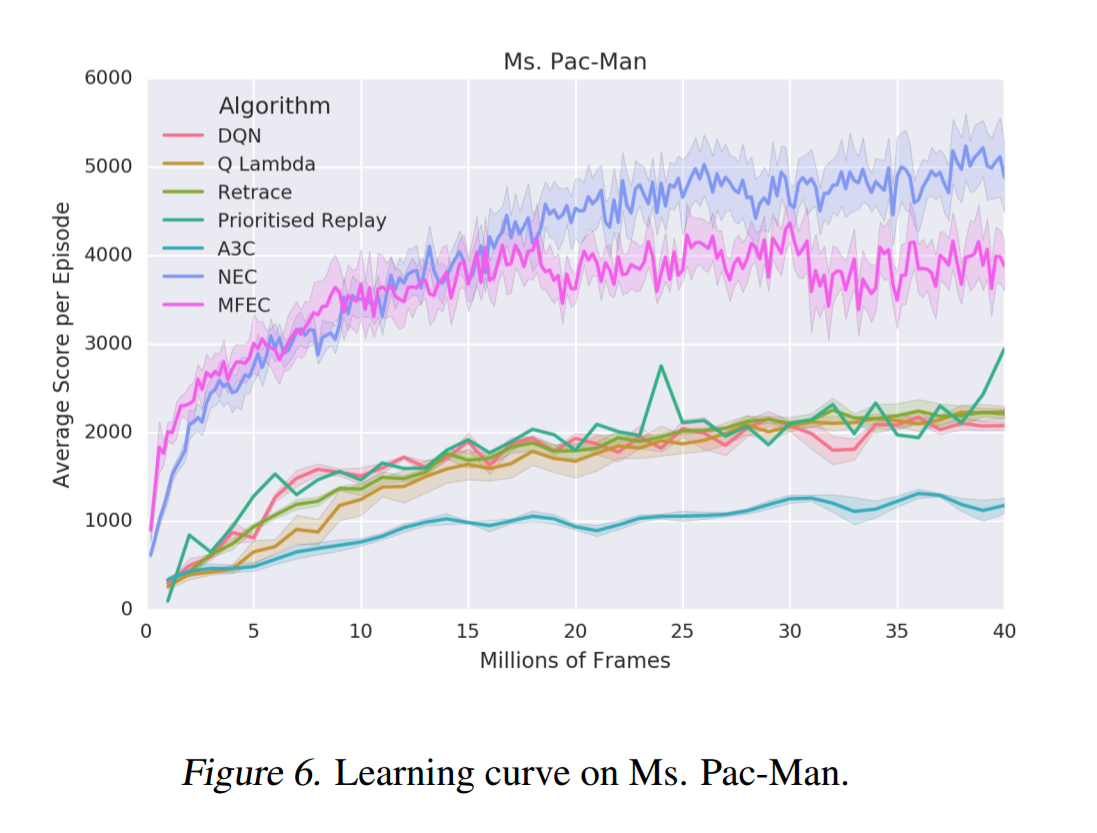

It speeds up rougly by 4 time the training without loosing much information. Figure 6 shows a significant percentage improvement of the C51 agent against the Double DQN agent per Atari game. $ pip3 install opencv-python gym gymatari.

To make it work, the original DQN paper’s authors and we in our experiments, employed a few improvements to the basic algorithm. The DQN method proposed in this paper can successfully solve problems with high-dimensional observation spaces but cannot handle high-dimensional action spaces. We apply our approach to a range of Atari 2600 games implemented in The Arcade Learning Envi-ronment (ALE) 3.

Google DeepMind created an artificial intelligence program using deep reinforcement learning that plays Atari games and improves itself to a superhuman level. They must derive efficient. Atari 2600 games run 60 MHz and each frame is 210×160 with 128-bit pallette.

We will then understand Q learning as a general policy iteration. DeepMind released a paper in scientific journal Nature this week detailing its deep Q-network (DQN) algorithm's ability to play 49 computer games originally designed for the Atari 2600 - including. For the following 3 images, the last action is repeated.

The full DQN model — learning to play Atari games from visual information — will be covered in a later post. Atari 2600 is a challenging RL testbed that presents agents with a high dimen-sional visual input (210 160 RGB video at 60Hz) and a diverse and interesting set of tasks that were designed to be difficult for humans players. The recently introduced DQN (Deep Q-Network) algorithm, which is a combination of Q-learning with a deep neural network, has achieved good performance on several games in the Atari 2600 domain.

#6 best model for Atari Games on Atari 2600 Beam Rider (Score metric) Browse State-of-the-Art. Accepted 16 January 15. General Policy Iteration (GPI).

Reward is change in score for that step (This is a CNN) Network architecture and hyperparameters fixed across all games. The authors demonstrate the impact of these two ideas on a subset of the Atari 2600 video games:. Y Q t.

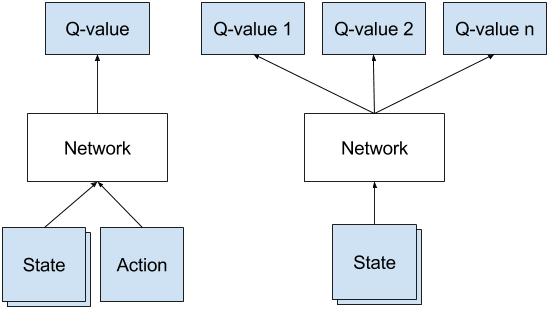

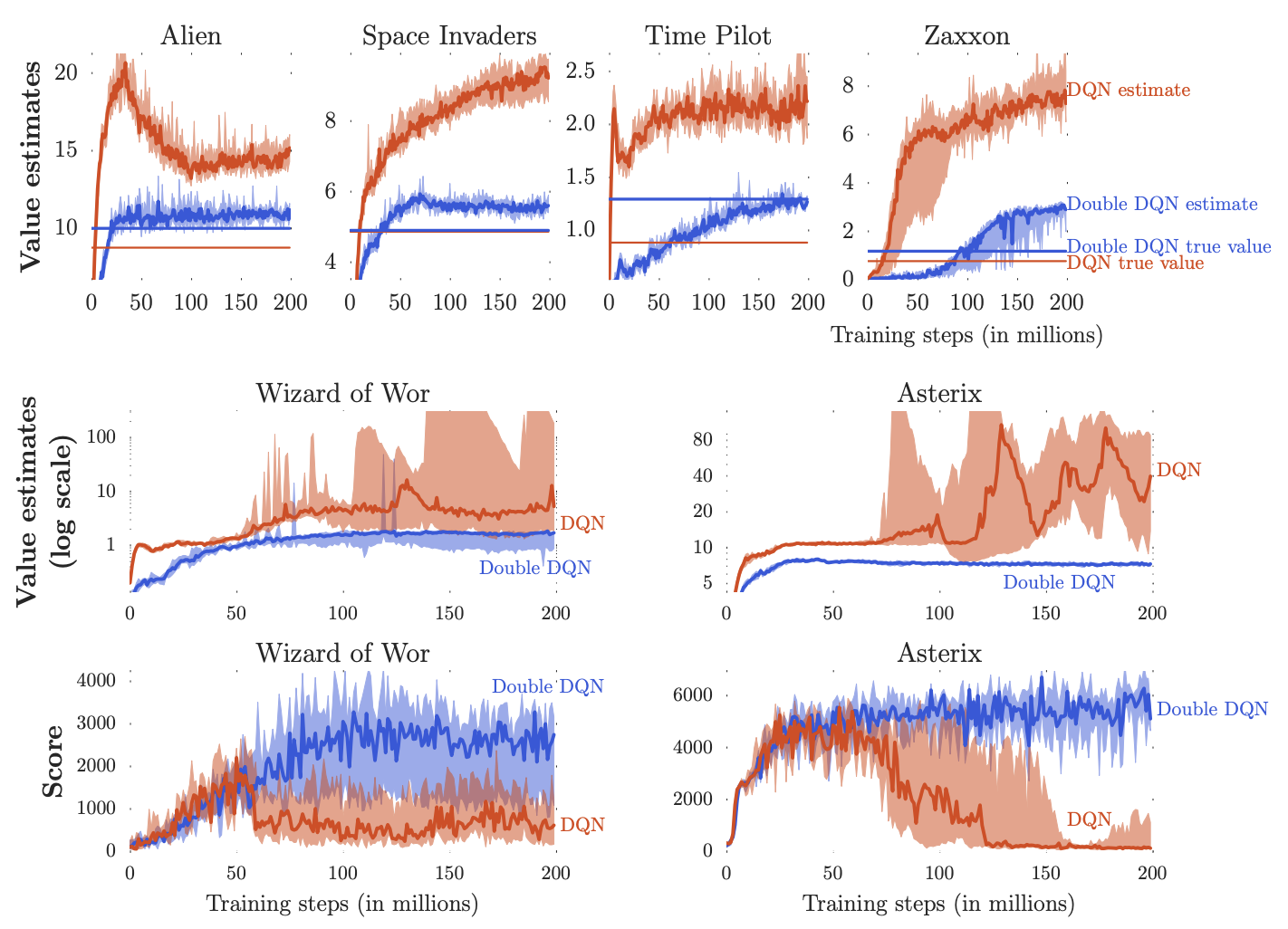

Output is Q(s,a) for 18 joystick/button positions;. In particular, we first show that the recent DQN algorithm, which combines Q-learning with a deep neural network, suffers from substantial overestimations in some games in the Atari 2600 domain. When testing DDQN on 49 Atari games, it achieved about twice the average score of DQN with the same hyperparameters.

Playing Atari with Deep Reinforcement Learning again. To reduce the computational and memory needs of the DQN algorithm, each frame is downsampled to 84×84 and converted to grayscale. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw pixels and whose output is a value function estimating future rewards.

The ALE owes some of its success to a Google DeepMind algorithm called Deep Q-Networks (DQN), which recently drew world-wide attention to the learning environment and to reinforcement learning (RL) in general. In this post, adapted from our paper, “State of the Art Control of Atari Games Using Shallow Reinforcement. In recent years there have been many successes of using deep representations in reinforcement learning.

We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning. In each game, although each head Q 1,. In the original atari paper, only 1 in 4 frame is actually processed.

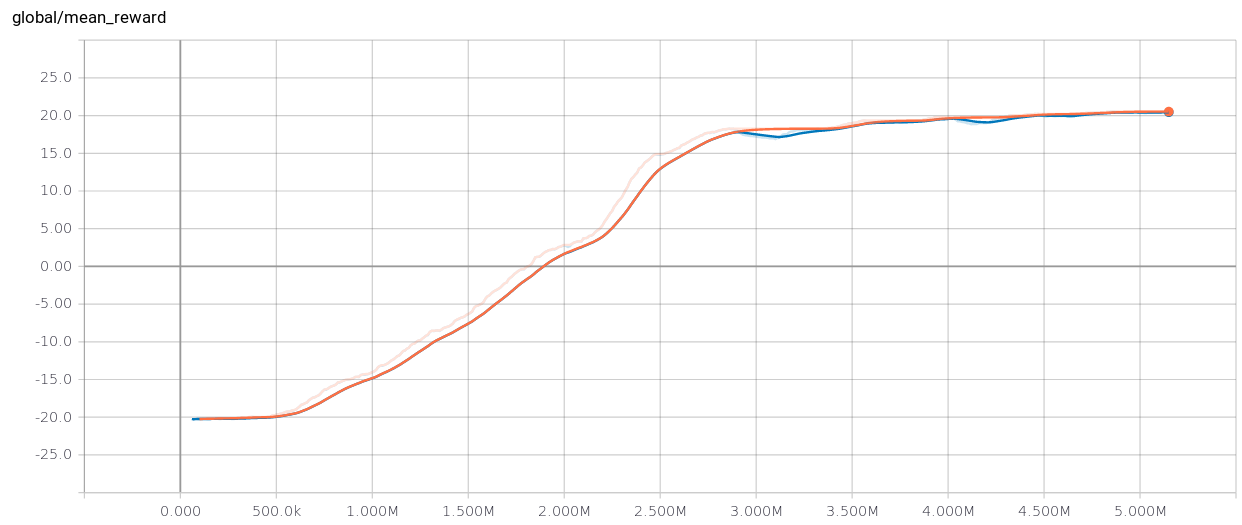

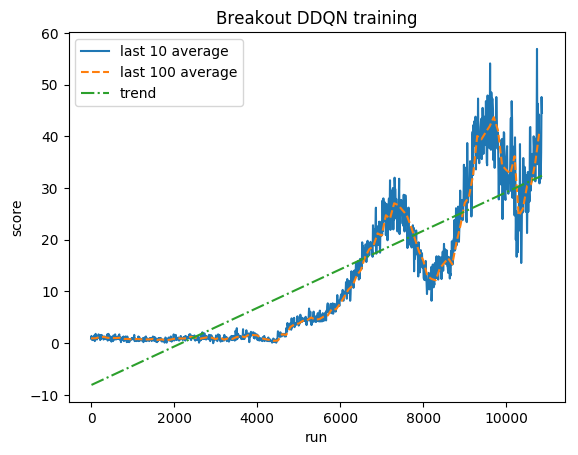

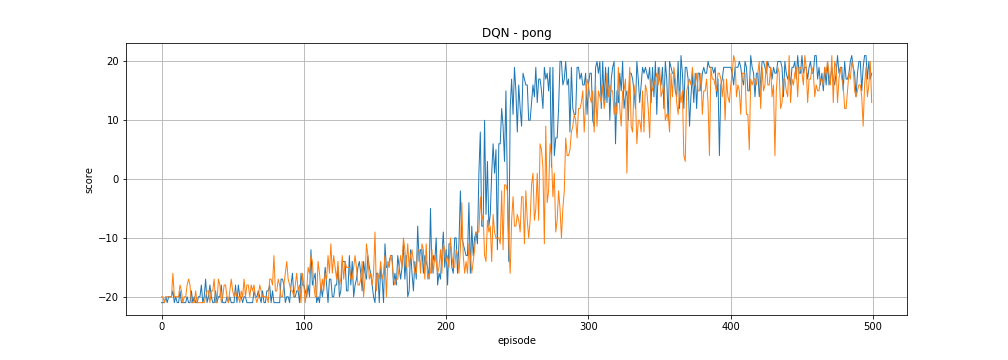

The DQN I trained using the methods in this post. 🏆 SOTA for Atari Games on Atari 2600 Pong (Score metric) 🏆 SOTA for Atari Games on Atari 2600 Pong (Score metric). DQN-Atari Deep Q-network implementation for Pong-vo.

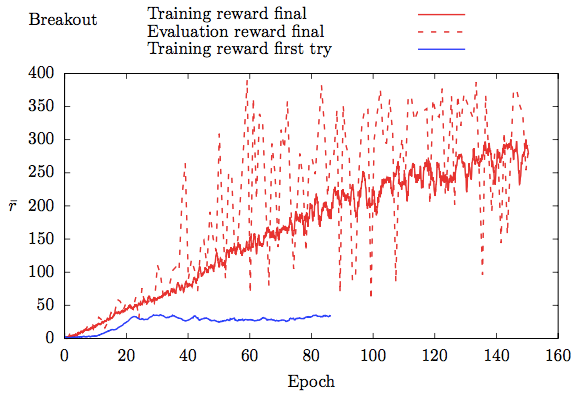

It exceeds human-level performance on over 40 of the 57 Atari games attempted. Similarity, we will use another deep learning toolkit Tensorflow to develop the DQN and Double DQN and to play the another game Breakout (Atari 3600). The paper makes several hypotheses about how different components may interact in the triad and evaluate these hypotheses by training DQN with different hyperparameters:.

Without any of the incremental DQN improvements, with final performance still coming close to that of Rainbow. The PER paper shows that PER+(D)DQN it outperforms uniform sampling on 41 out of 49 Atari 2600 games, though which of the exact 8 games it did not improve on is unclear. We apply our method to seven Atari 2600 games from the Arcade.

IQN (Implicit Quantile Networks) is the state of the art ‘pure’ q-learning algorithm, i.e. Paper where method was first introduced:. Double DQN van15deep modifies the target y Q t and helps further 1 1 1 In this paper we use the DDQN update for all DQN variants unless explicitly stated.:.

Input state s is stack of raw pixels from last 4 frames;. Multi-step DQN with experience-replay DQN is one of the extensions explored in the paper Rainbow:. Table 1 lists the results obtained by ChainerRL ’s reproducibility scripts for DQN, IQN, Rainbow, and A3C on the Atari benchmark, with comparisons against a published result.

Updated with the latest ranking of this paper. Human-level control through deep reinforcement learning - nature.pdf Created Date:. Playing Atari with Deep Reinforcement Learning Abstract.

We recently published a paper on deep reinforcement learning with Double Q-learning, demonstrating that Q-learning learns overoptimistic action values when combined with deep neural networks, even on deterministic environments such as Atari video games, and that this can be remedied by using a variant of Double Q-learning. Interestingly, there were only few papers about DRN between 13 and 15. Here, we will use the OpenAI gym toolkit to construct out environment.

Implementation of the DQN Algorithm. Just by “watching” the screen and making movements, these algorithms were able to acheive the impressive accomplishment of surpassing human performance for many games. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw pixels and whose output is a.

As introduced in Chapter 2, one typical example of DRL is the Deep Q Network (DQN), which, after learning to play Atari 2600 games over 38 days, is able to match human performance when playing the. Table 2 depicts the evaluation protocol used for each algorithm, with a citation of the source paper whose results we compare against. In this section, we discuss some of them.

Learn (total_timesteps = ) model. Indeed, atari game are not supposed to be played frame perfect and for most action it makes more sense to keep them for at least 4 frames. This paper explores how video prediction models can similarly enable agents to solve Atari games with fewer interactions than model-free methods.

In the original atari paper, only 1 in 4 frames is actually processed. Save ("deepq_breakout") del model # remove to. This improved stability directly translates to ability to learn much complicated tasks.

One for the. The Deep Reinforcement Learning with Double Q-learning 1 paper reports that although Double DQN (DDQN) does not always improve performance, it substantially benefits the stability of learning. I will be quoting it throughout.

To use reinforcement learning successfully in situations approaching real-world complexity, however, agents are confronted with a difficult task:. The reasonable way of implementing the downsampling is to use a sequence of TF operations, which then can be run on GPU. To evaluate our DQN agent, we took advantage of the Atari 2600 platform, whichoffersa diverse arrayoftasks(n549)designed tobe.

Further, I recommend you really do try to implement your DQN from what I am writing here. DQN Best Score. In this paper, we present a new neural network architecture for model-free reinforcement learning.

It reaches a score of 251. Starting by learning to play CartPole with a DQN is a good way to test your algorithm for bugs, here we’ll push it to do more by following the DeepMind paper to play Atari from the pixels on the screen. We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning.

Improving generalization in reinforcement learning on Atari 2600 games. Earlier this week, the team published their results in a paper posted to the preprint server ArXiv. The model consists of convolutional layers followed by fully connected layers.

Indeed, atari game are not supposed to be played frame perfect and for most action it makes more sense to keep them for at least 4 frames. Update to the target network:.

Atari Dqn Reinforcement Learning Experiments Youtube

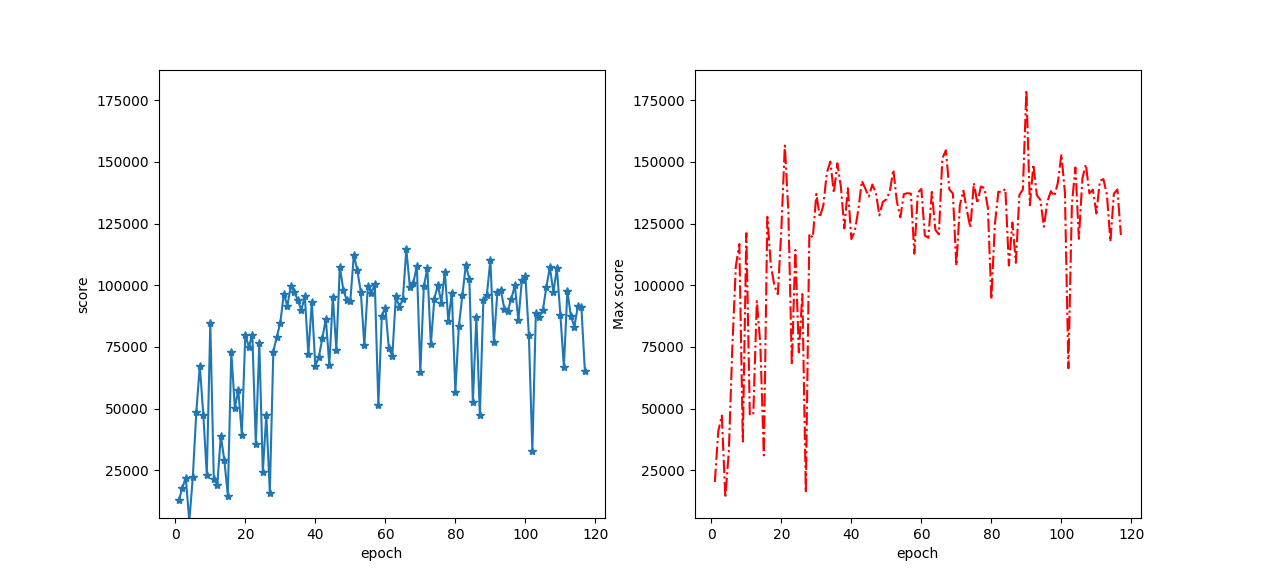

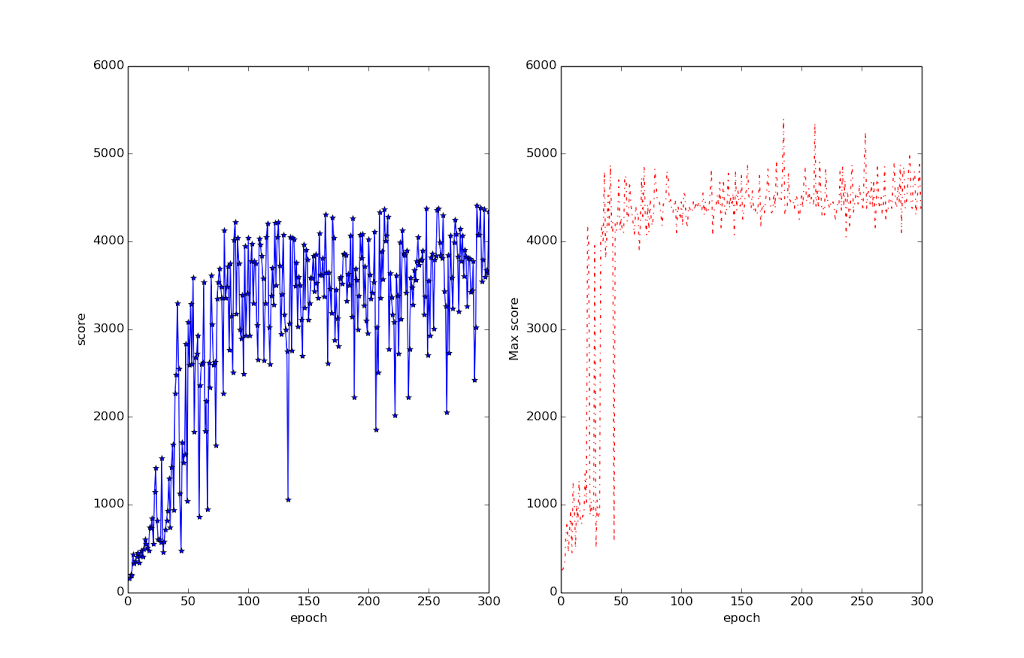

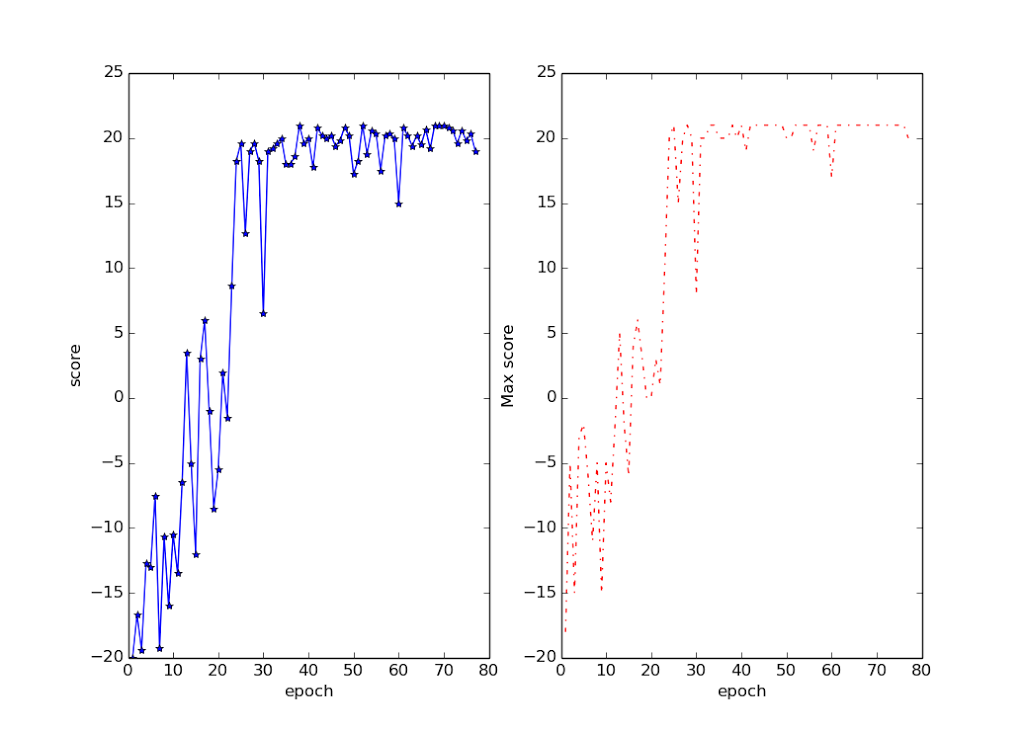

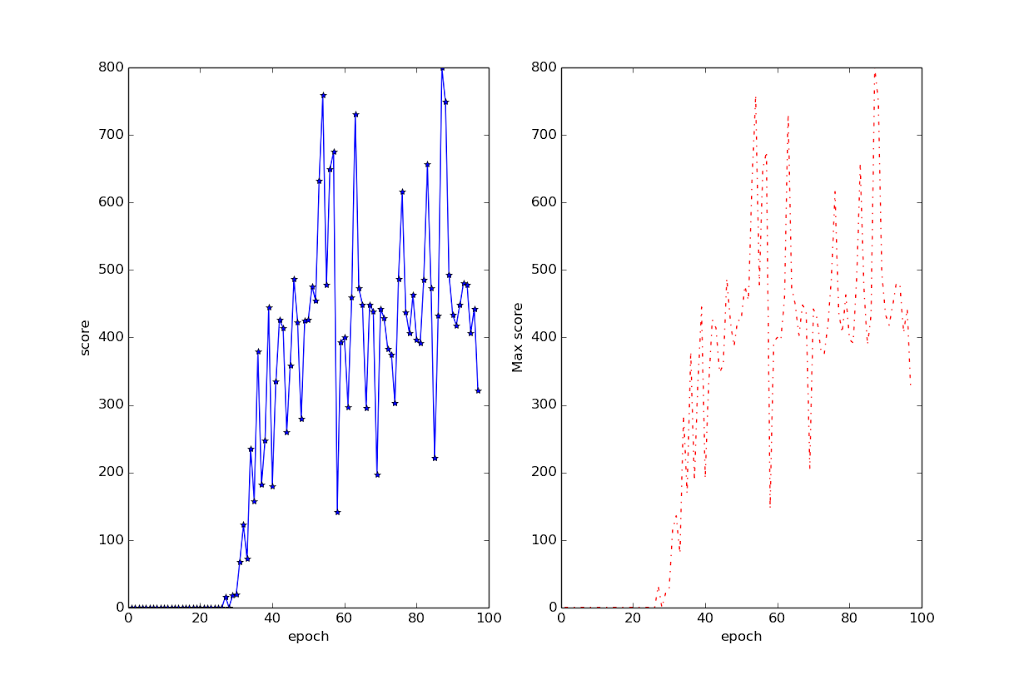

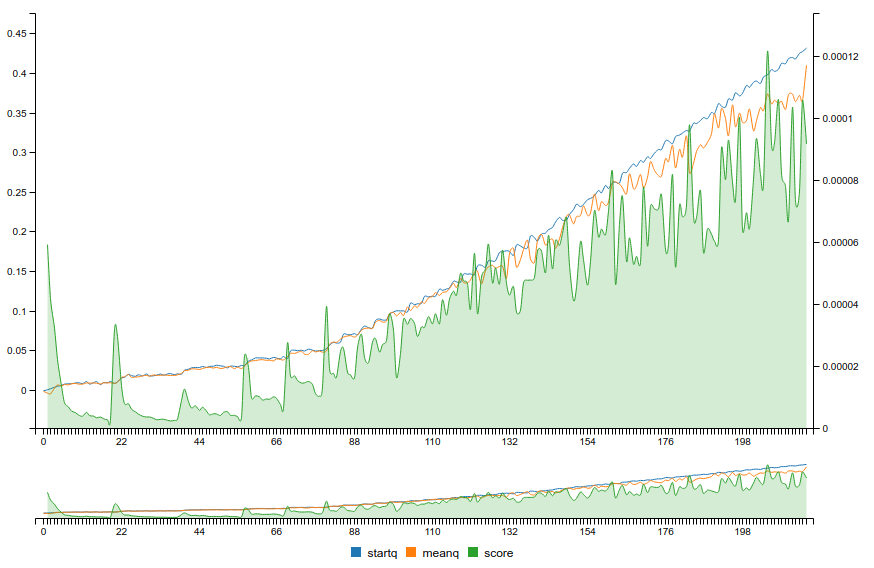

Deepmind S Atari Paper Replicated That S So Deep Dude

Deepmind S Atari Paper Replicated That S So Deep Dude

Q Tbn 3aand9gctg Vyl6zg6vlrc Sdwqx3uixkbkqjsjhc Jry87lvacostw4el Usqp Cau

Rem Explained Papers With Code

How To Match Deepmind S Deep Q Learning Score In Breakout By Fabio M Graetz Towards Data Science

Beat Atari With Deep Reinforcement Learning Part 2 Dqn Improvements By Adrien Lucas Ecoffet Becoming Human Artificial Intelligence Magazine

Openai Baselines Dqn

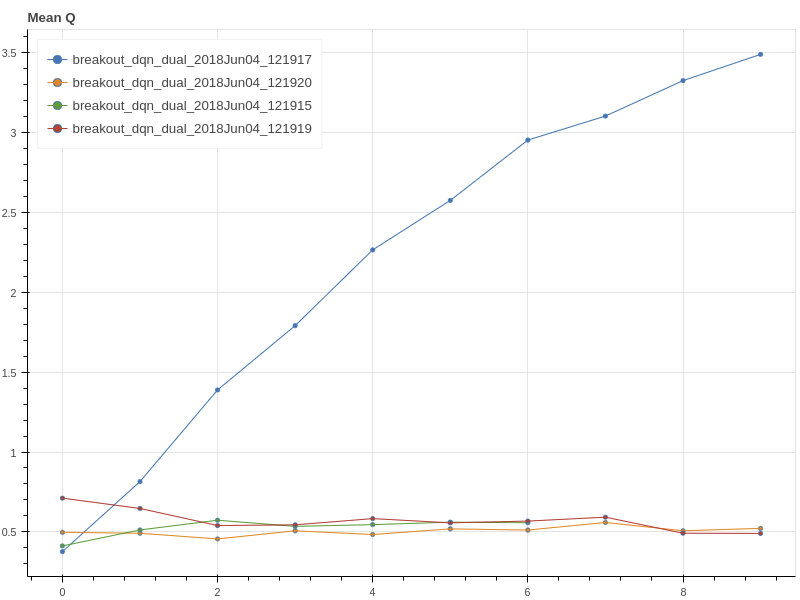

Dqn And Averaged Dqn Performance In The Atari Game Of Breakout The Download Scientific Diagram

Q Tbn 3aand9gctuvlldnunwqvd0dybme1l6s6us Qccwozxsq Usqp Cau

Asynchronous Methods For Deep Reinforcement Learning The Morning Paper

Www Cs Toronto Edu Vmnih Docs Dqn Pdf

Deep Q Learning In Atari Game Failed To Improve Cross Validated

Q Tbn 3aand9gcshbvljbgidgixewsl4raqpzcruid5jdfoiw Sqymfmdzah0qp Usqp Cau

Lec3 Dqn

Human Level Control Through Deep Reinforcement Learning Nature

Neural Episodic Control

How To Match Deepmind S Deep Q Learning Score In Breakout By Fabio M Graetz Towards Data Science

Www Cs Toronto Edu Vmnih Docs Dqn Pdf

Www Cs Toronto Edu Vmnih Docs Dqn Pdf

Deepmind S Gaming Streak The Rise Of Ai Dominance

Deep Reinforcement Learning Deepmind

Week 5 Deep Q Networks And Rainbow Algorithm Holly Grimm

Q Tbn 3aand9gctqpdybrzhenob1jp4kpkhwb5exwzbzafk2xq Usqp Cau

Deep Learning Research Review Reinforcement Learning

Human Level Control Through Deep Reinforcement Learning Nature

Q Learning And Dqn Efavdb

Performing Deep Recurrent Double Q Learning For Atari Games Deepai

Dqn Architecture For End To End Learning Of Atari 2600 Game Plays Download Scientific Diagram

Gyu3kcoo1pdcmm

Best Paper At Icml Dueling Network Architectures For Deep Reinforcement Learning Hado Van Hasselt

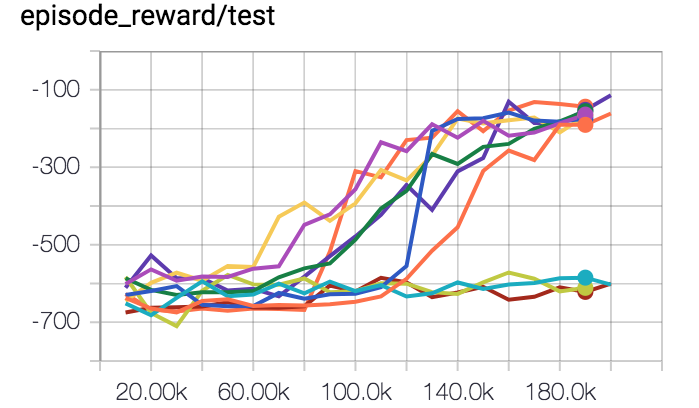

Deep Exploration Via Bootstrapped Dqn Statwiki

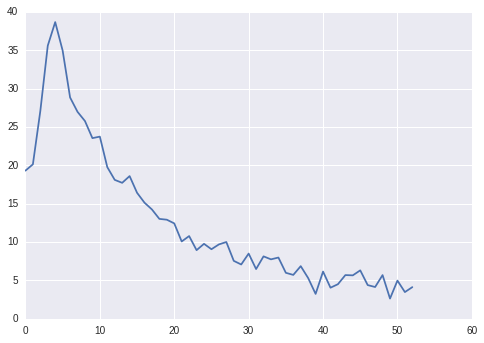

Dqn Solution Results Peak At 35 Reward Issue 30 Dennybritz Reinforcement Learning Github

Www Cs Toronto Edu Vmnih Docs Dqn Pdf

转载 Welcome To Deep Reinforcement Learning Dqn 知乎

David Silver Google Deepmind Deep Reinforcement Learning Synced

Web Stanford Edu Class Psych9 Readings Mnihetalhassibis15naturecontroldeeprl Pdf

Papers Nips Cc Paper 6501 Deep Exploration Via Bootstrapped Dqn Pdf

Hardmaru Interesting Result We Find That The Full Experience Replay Of A Dqn Agent Comprising About 50 Million Tuples Of Observation Action Reward Next Obs Is Enough To Learn Strong

Agent57 Outperforming The Human Atari Benchmark Deepmind

Striving For Simplicity In Off Policy Deep Reinforcement Learning Arxiv Vanity

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Arxiv Org Pdf 1803

Deep Reinforcement Learning Deepmind

Www Cs Toronto Edu Vmnih Docs Dqn Pdf

Dqn With Cnn Recreating The Google Deepmind Network Datahubbs

Atari Solving Games With Ai Part 1 Reinforcement Learning By Greg Surma Towards Data Science

Deep Reinforcement Learning Pong From Pixels

Q Tbn 3aand9gctxcype9a6bgkw4ikxynexnmwsmtorkzm1cbq Usqp Cau

Google Deepmind Dqn Plays Atari Breakout Nickyschannel13 Youtube

Vanilla Deep Q Networks Deep Q Learning Explained By Chris Yoon Towards Data Science

Dqn And Averaged Dqn Performance In The Atari Game Of Breakout The Download Scientific Diagram

Jane Street Tech Blog Playing Atari Games With Ocaml And Deep Reinforcement Learning

Google Ai Blog An Optimistic Perspective On Offline Reinforcement Learning

The Fairly Accessible Guide To The Dqn Algorithm Mc Ai

Dqn Solution Results Peak At 35 Reward Issue 30 Dennybritz Reinforcement Learning Github

Sridhar Thiagarajan Deep Q Networks And Double Dqn

Multiagent Cooperation And Competition With Deep Reinforcement Learning

Beyond Dqn A3c A Survey In Advanced Reinforcement Learning By Joyce Xu Towards Data Science

Welcome To Deep Reinforcement Learning Part 1 Dqn By Takuma Seno Towards Data Science

Deepmind S Atari Paper Replicated That S So Deep Dude

Creating A Zoo Of Atari Playing Agents To Catalyze The Understanding Of Deep Reinforcement Learning Uber Engineering Blog

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Github Kaleabtessera Dqn Atari Deep Q Learning Dqn Implementation For Atari Pong

Human Level Control Through Deep Reinforcement Learning Nature

Web Stanford Edu Class Psych9 Readings Mnihetalhassibis15naturecontroldeeprl Pdf

Dqn Breakout Youtube

Understanding Dqn Her Deep Robotics

Ape X Dqn Explained Papers With Code

Human Level Control Through Deep Reinforcement Learning Nature

Dqn Explained Papers With Code

Normalized Scores On 57 Atari Games Tested For 100 Episodes Per Game Download Scientific Diagram

Deep Learning Research Review Reinforcement Learning

Reinforcement Learning And Dqn Learning To Play From Pixels Ruben Fiszel S Website

Deep Reinforcement Learning Doesn T Work Yet

Dqn Stuck At Suboptimal Policy In Atari Pong Task Artificial Intelligence Stack Exchange

Demystifying Deep Reinforcement Learning Computational Neuroscience Lab

Deepmind Dqn Tutorial Installation And Explanation Of The Atari Playing Agent Youtube

Is Deep Reinforcement Learning Really Superhuman On Atari Mc Ai

Artificial Intelligence Can Beat Humans At 31 Atari Games Nvidia Developer News Center

Deep Reinforcement Learning Doesn T Work Yet

Dqn Solution Results Peak At 35 Reward Issue 30 Dennybritz Reinforcement Learning Github

Lec3 Dqn

Demystifying Deep Reinforcement Learning Computational Neuroscience Lab

Beat Atari With Deep Reinforcement Learning Part 1 Dqn By Adrien Lucas Ecoffet Becoming Human Artificial Intelligence Magazine

Q Tbn 3aand9gcrvwp8h1jy 6no6fo556ugdxbz33nri07w Rq Usqp Cau

Python Lessons

Dqn The Startup Medium

Deep Q Learning An Introduction To Deep Reinforcement Learning

Q Tbn 3aand9gcrrbfxo3sj1tsfucd3p6jyoqwaqowkqeypq Usqp Cau

Deep Reinforcement Learning With Decorrelation Deepai

Github Neo 47 Atari Dqn The Code For The Famous Dqn Paper Applied On Atari S Breakout

Why Isn T My Dqn Agent Improving When Trained On Atari Breakout Artificial Intelligence Stack Exchange

Dqn And Averaged Dqn Performance In The Atari Game Of Breakout The Download Scientific Diagram

Double Dqn Explained Papers With Code

David Silver Google Deepmind Deep Reinforcement Learning Synced