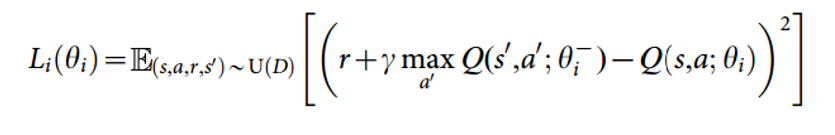

Dqn Loss Function

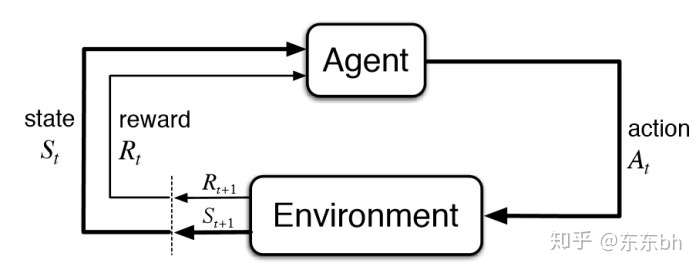

Deep Learning Research Review Reinforcement Learning

Let S Make A Dqn Debugging ヤロミル

Reinforcement Learning Part 2 Getting Started With Deep Q Networks Novatec

Mit 6 S094 Deep Learning For Self Driving Cars 18 Lecture 3 Notes Deep Reinforcement Learning Hacker Noon

Deep Q Learning An Introduction To Deep Reinforcement Learning

Double Deep Q Networks Tackling Maximization Bias In Deep By Chris Yoon Towards Data Science

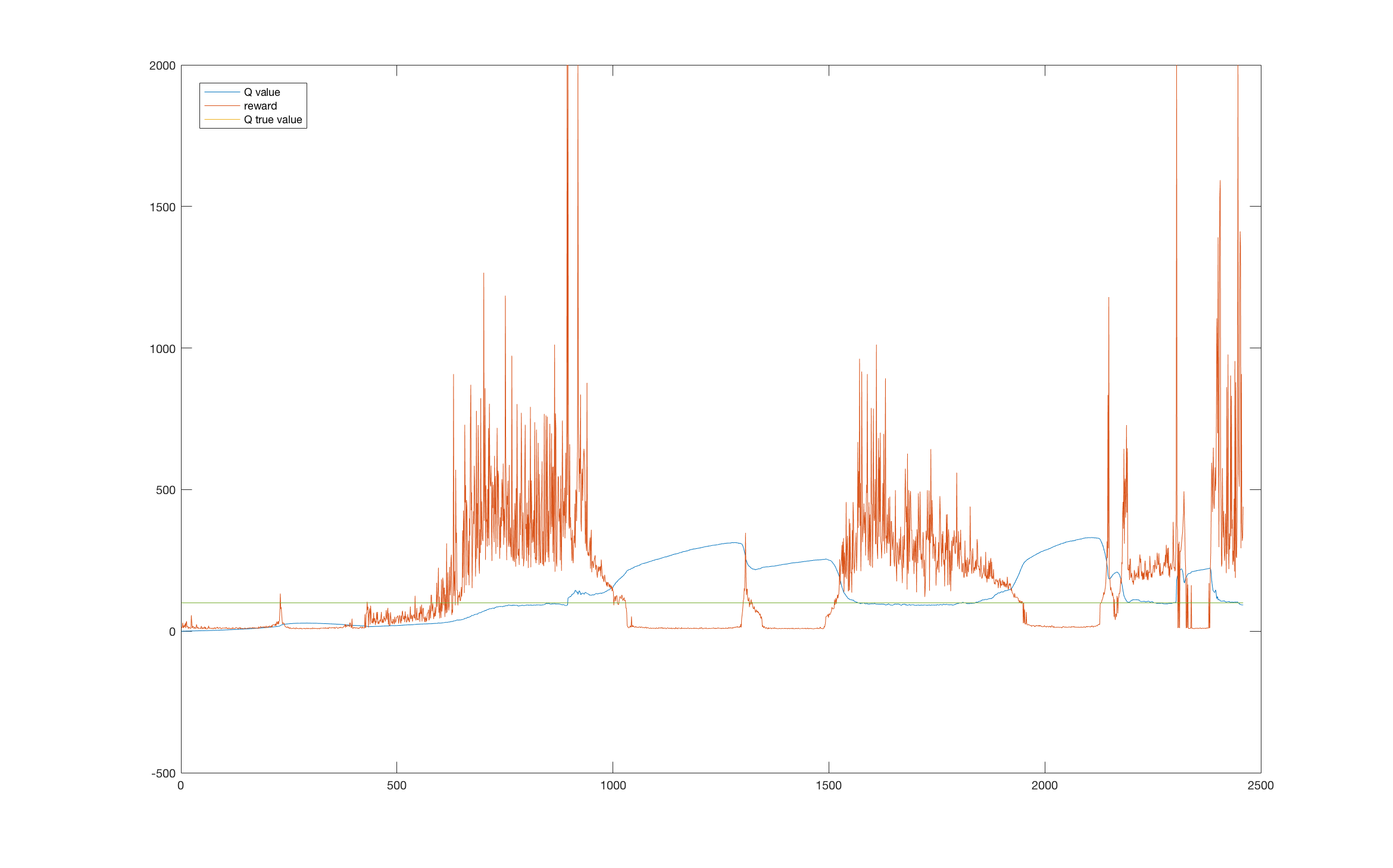

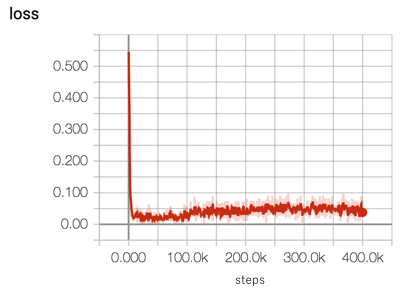

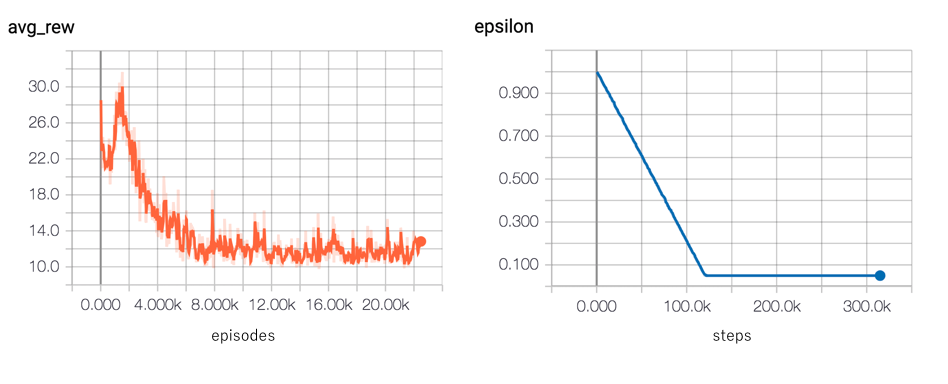

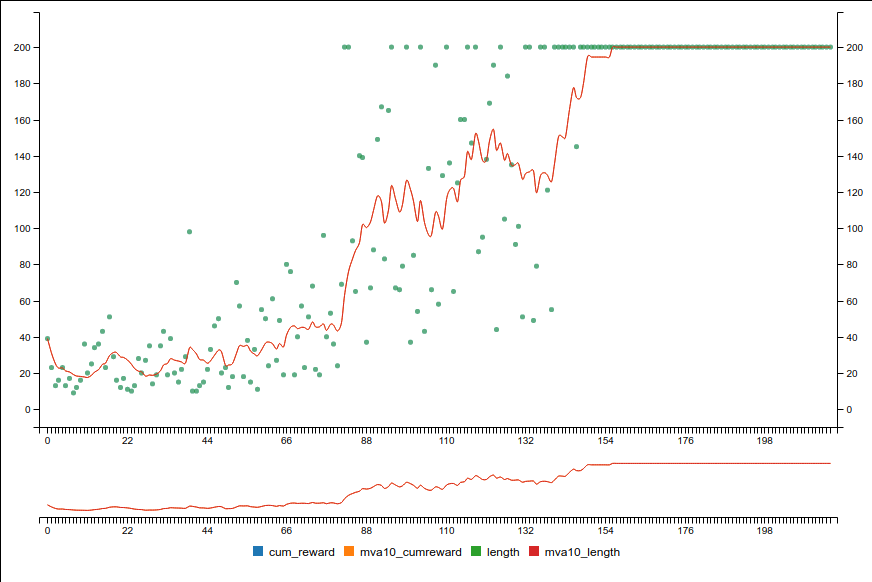

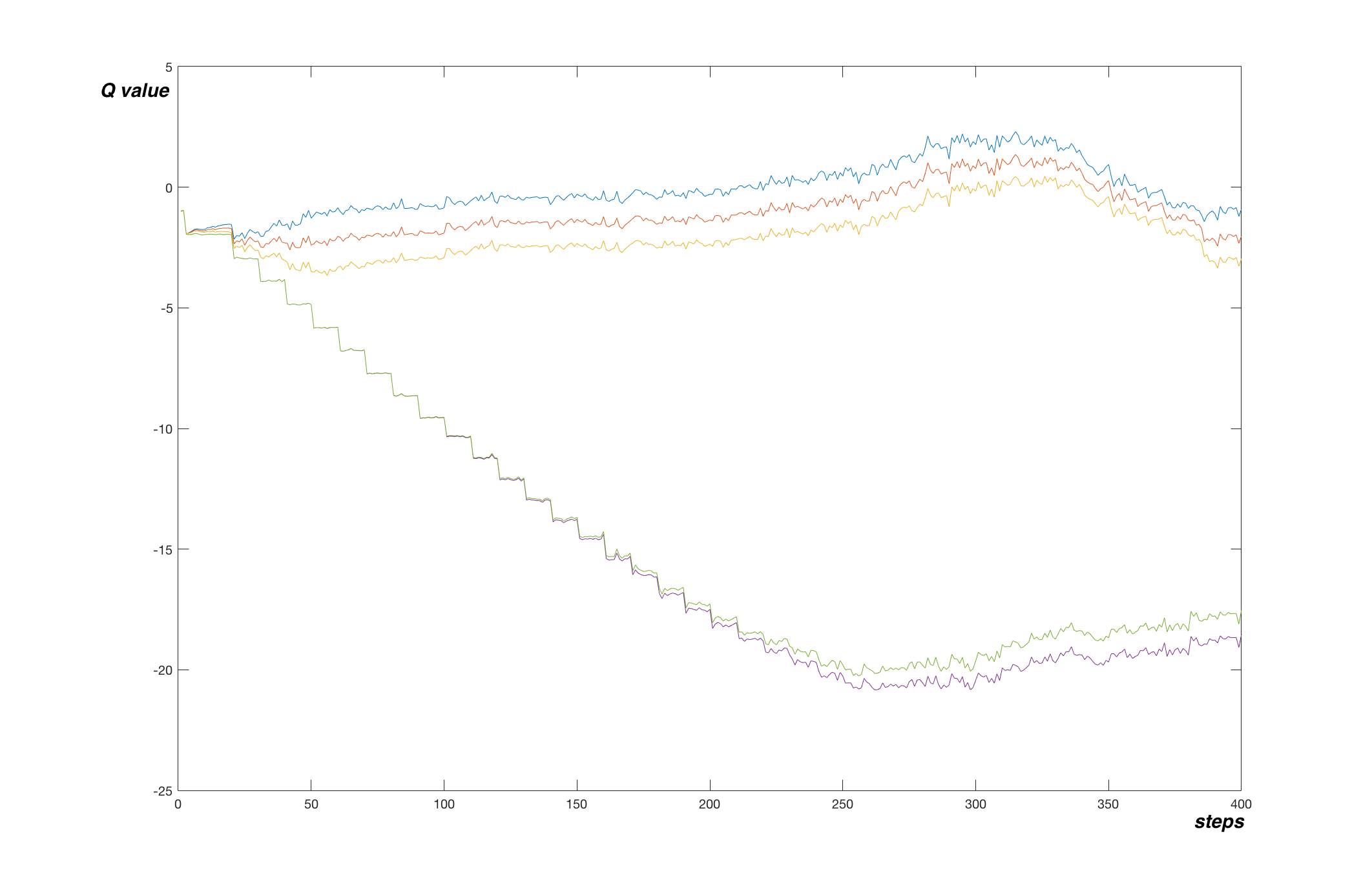

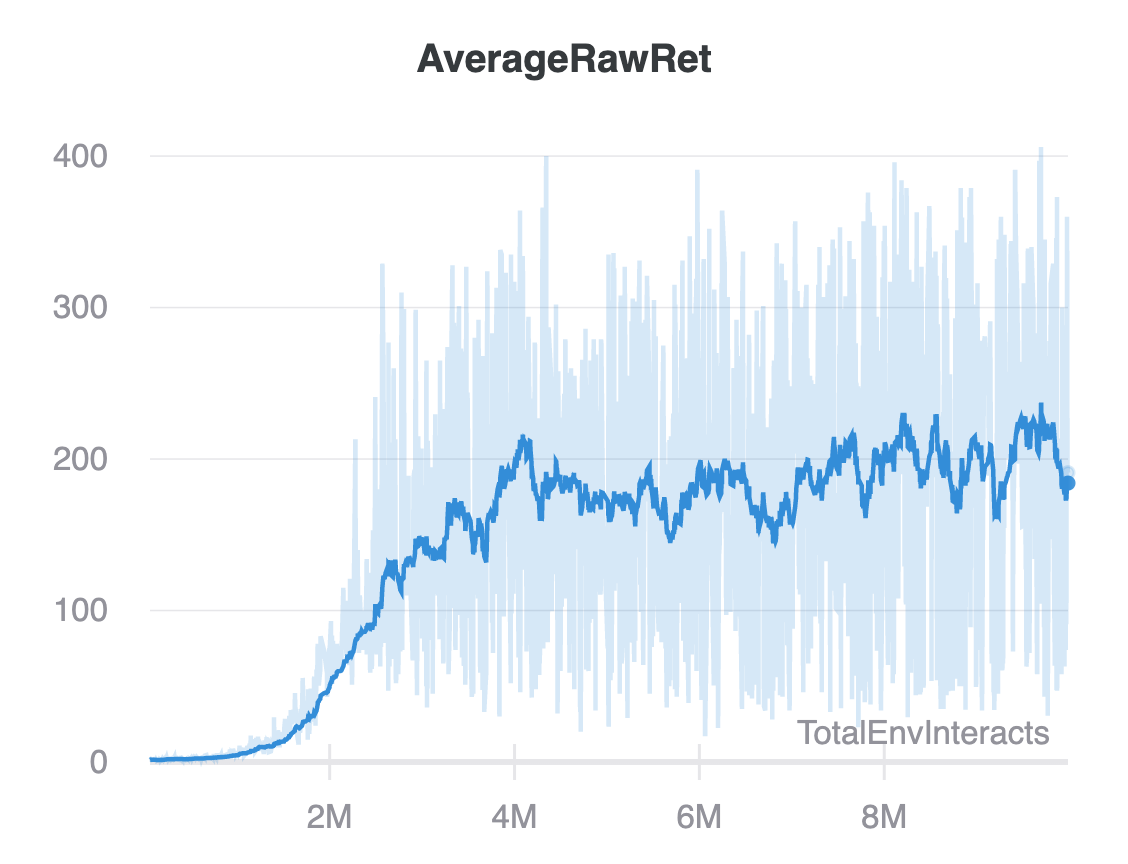

Attached is the plot for loss function.

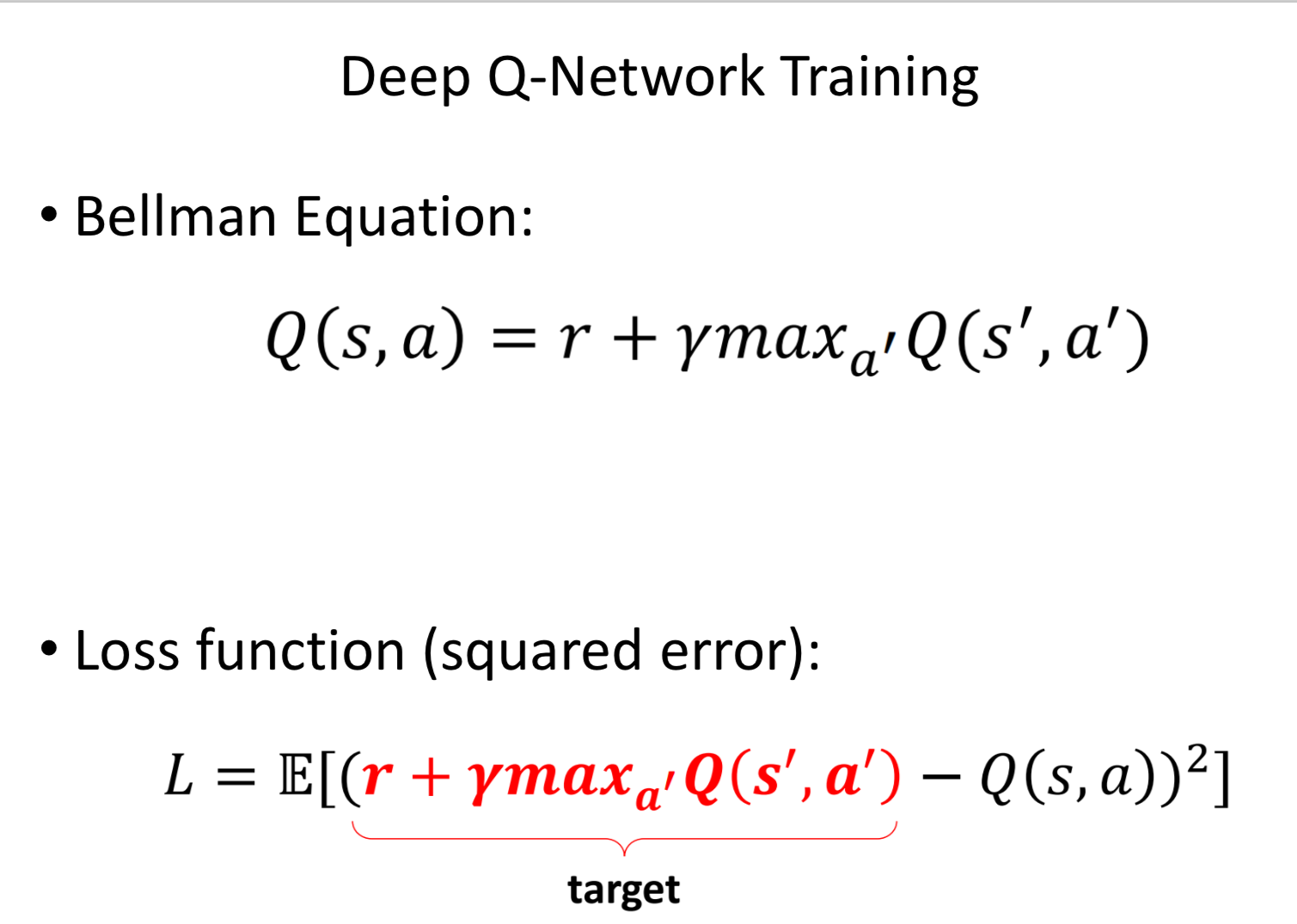

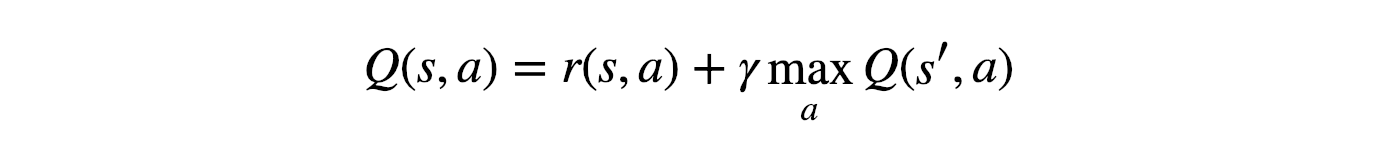

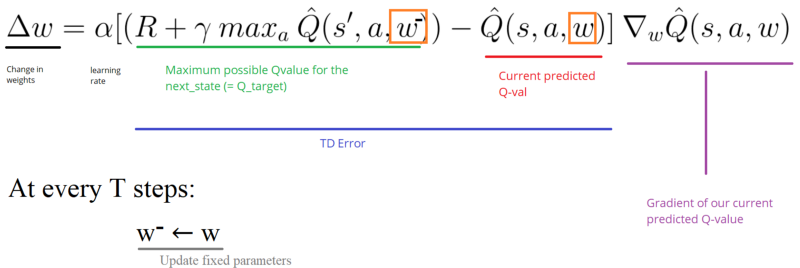

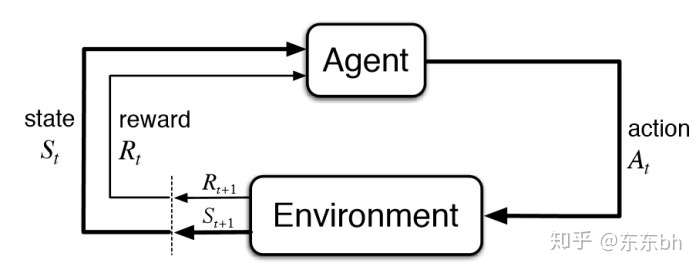

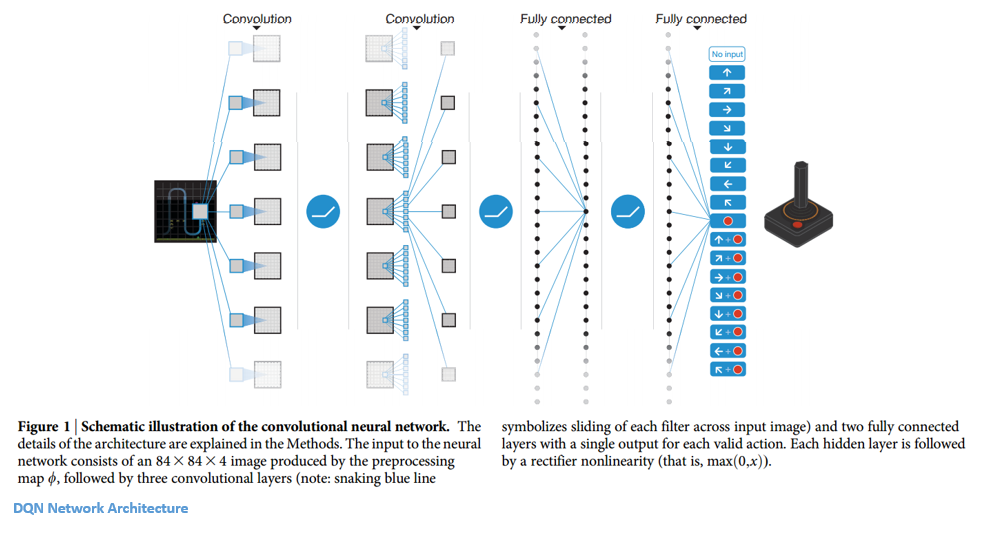

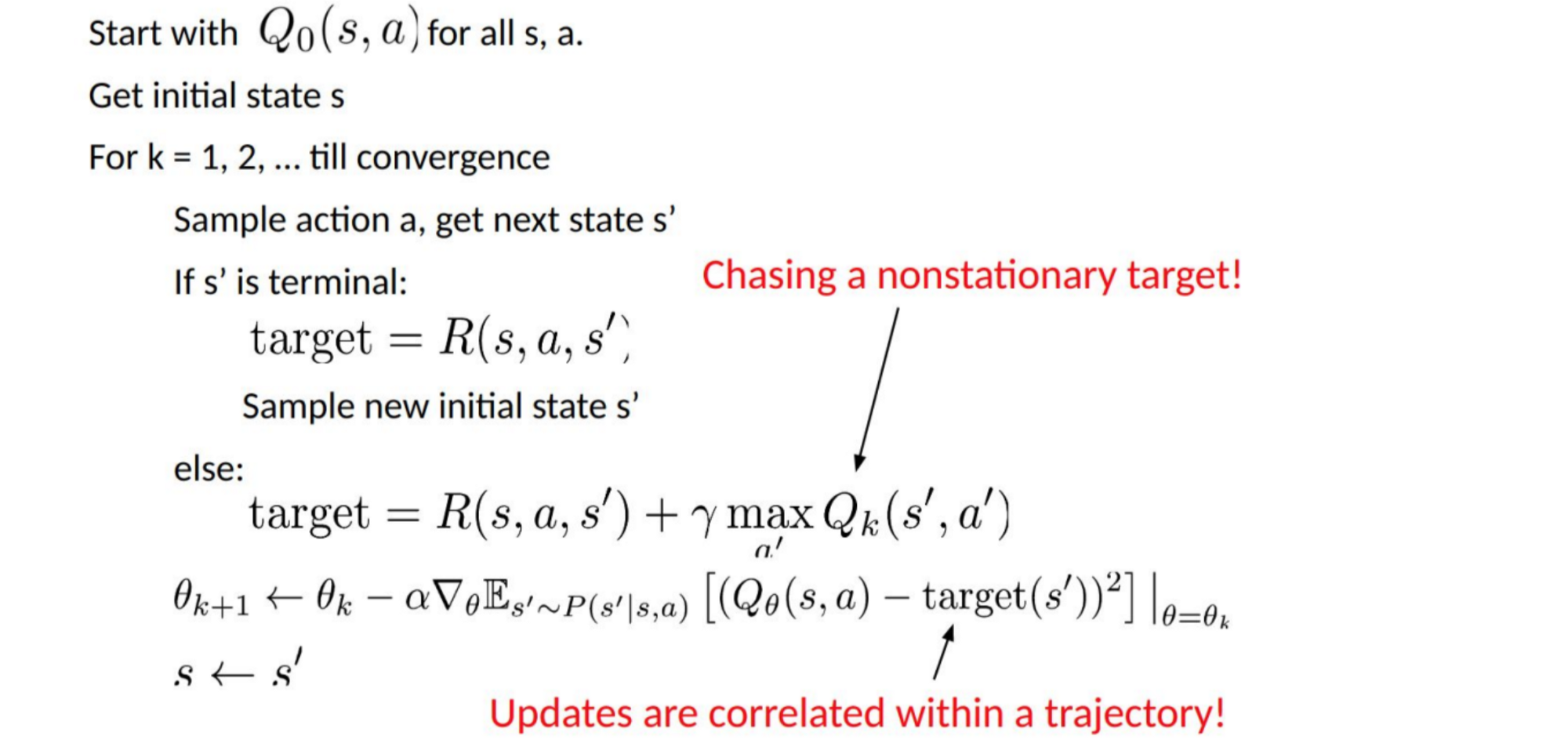

Dqn loss function. As shown in equation (), the dueling DQN divides the output of the neural network into two parts:. Final glue – loss functions and optimizers The network which transforms input data into output is not enough to start training it. Hard update from the local model to the target model.

(5) Thus, the modied loss function forces the model to learn that the most valuable actions are those. We used GAE to estimate advantage functions. For some reason, the loss function seems to converge too quickly before the network has learned anything valuable.

Instead, all gradients are truncated to norm 10. By adding value function regression loss and entropy bonus we have the loss function:. This also generalizes to multiple actions, unlike the threshold we were using before.

As such, the objective function is often referred to as a cost function or a loss function and the value calculated by the loss function is referred to as simply “ loss.” The function we want to minimize or maximize is called the objective function or criterion. Both are pretty simple concepts. In the later parts of this series, we will explore numerous variants of DQN that improves the original in numerous areas.

Further, whenever we call load_model(remember, we needed it for the target network), we will need to pass custom_objects={'huber_loss':. Krizhevsky et al., 12) together with the Q-learning algorithm. Passing these two values through a softmax function gives the probabilities of taking the respective actions, given a set of observations.

How is the expected value in the loss function of DQN approximated?. Thus, given the same length of history, recurrency is a viable alternative to stacking a history of frames in the DQN’s input layer and while. 2 and modified reward function in Eq.

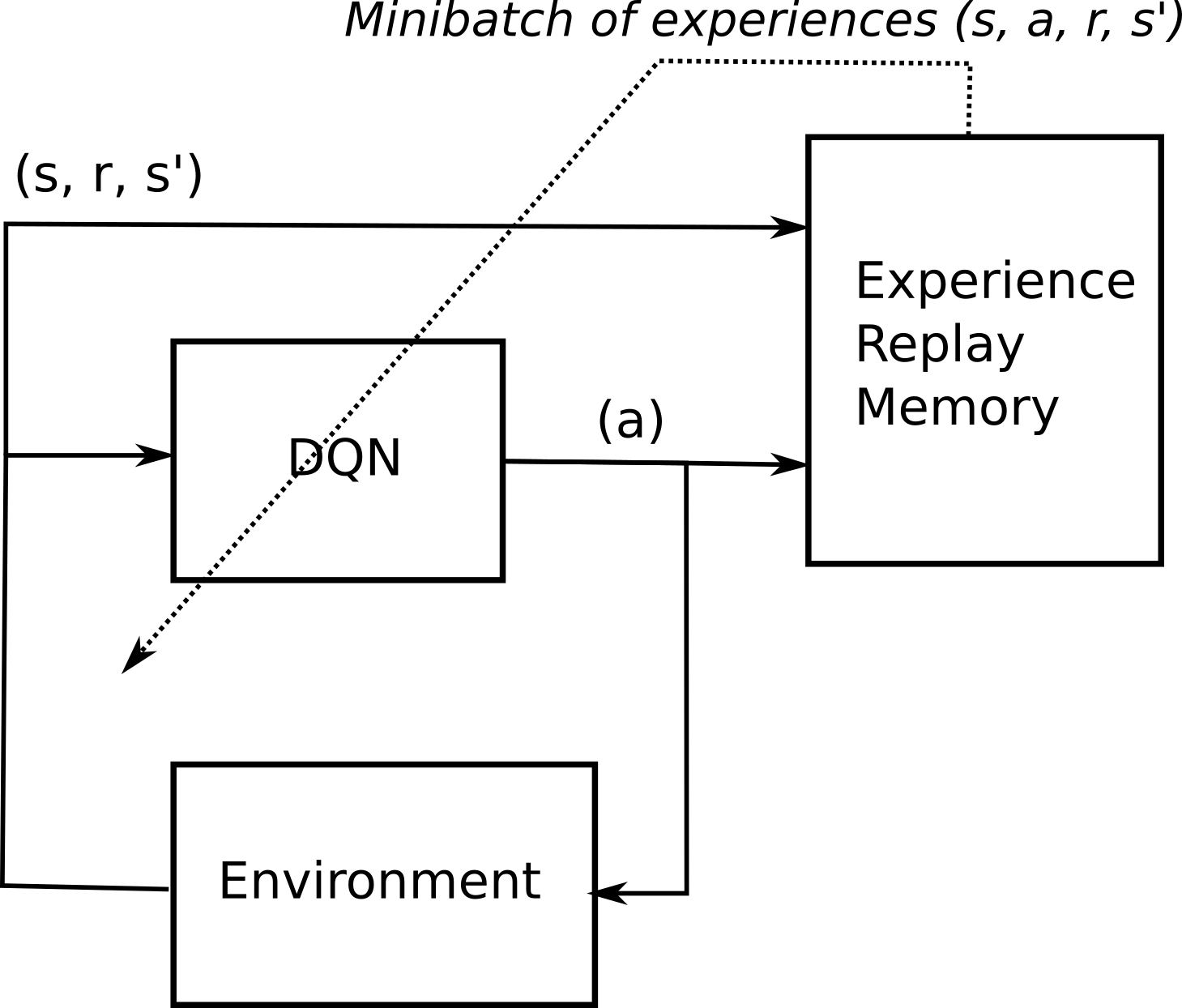

The most notable features of the DQN algorithm are memorize and replay methods. I) r i Q(s;a;. This is basically a regression problem.

Use this loss function:. Below we see how the Q function, basically updates to give the biggest Q value on state. 3, the new loss function and learned policy for our model are, L( ) = E (logp(ajs) + 1 c r MT(a;s) + max a0 Q(s0;a0;.

Loss should decrease w.r.t to a single target network. Reinforcement-learning q-learning deep-rl dqn loss-functions. The network's output and the desired output.

How can I create a diagram in tikz that contains both images, blocks of text and tikzpictures, which are all connected by arrows?. θ i)) 2 (I added in an extra 1 / 2 that they were missing, and note that their r = R ( s, a, s ′) .) This looks very similar to the loss function I had before. The loss will decrease and score will grow higher.

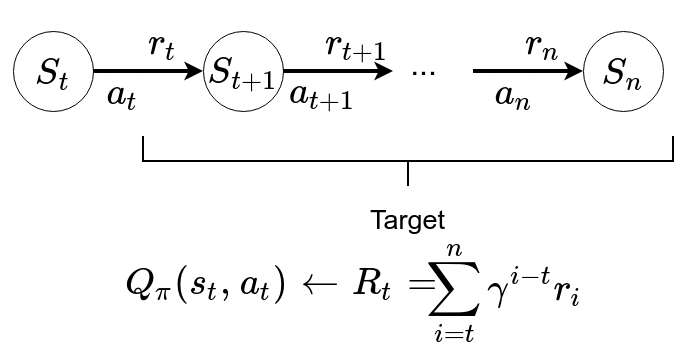

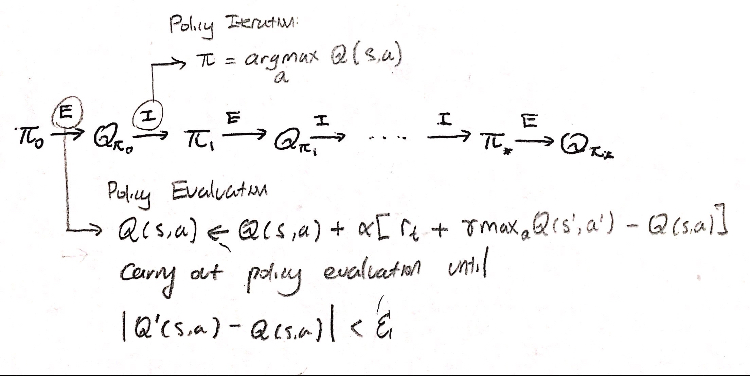

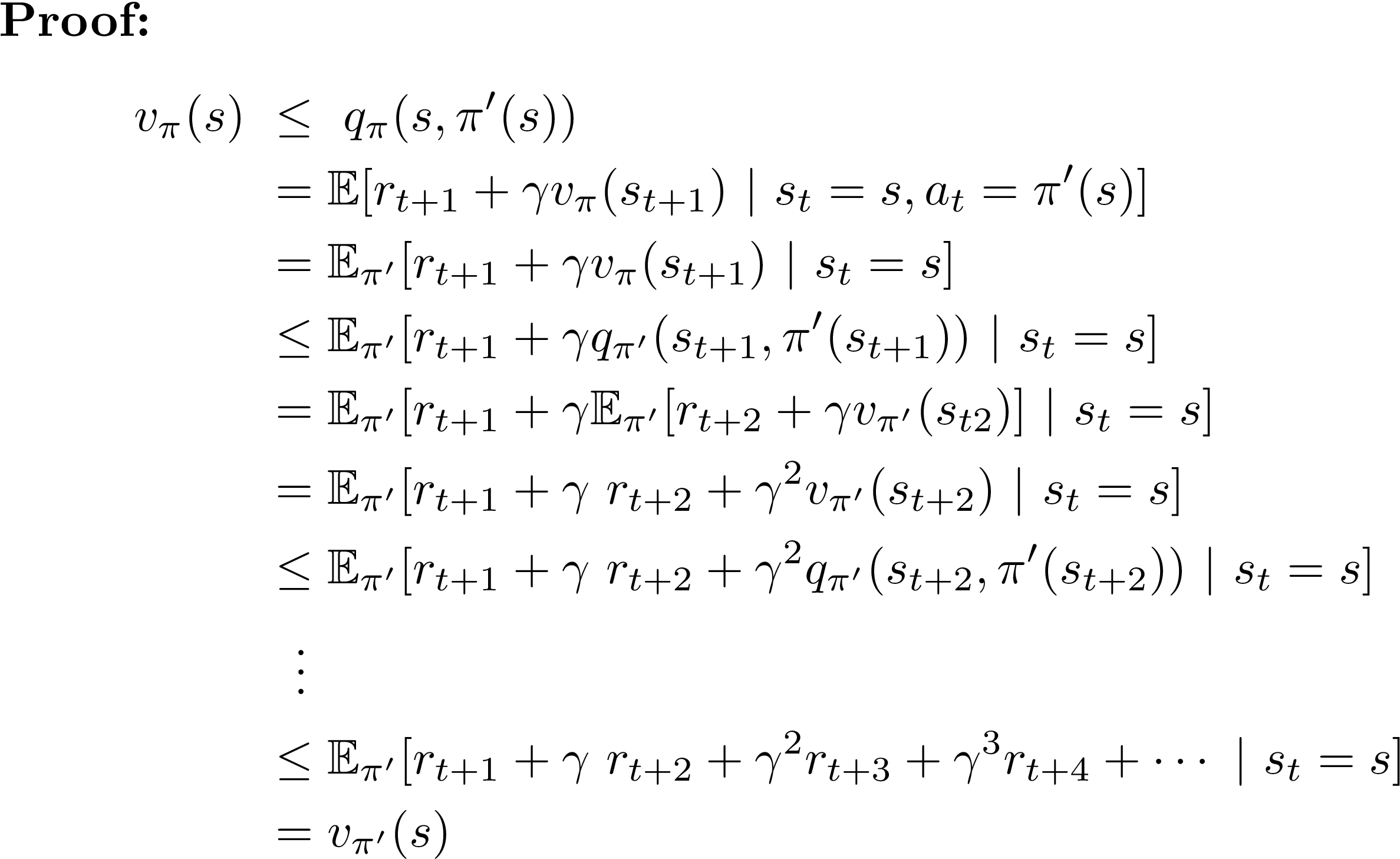

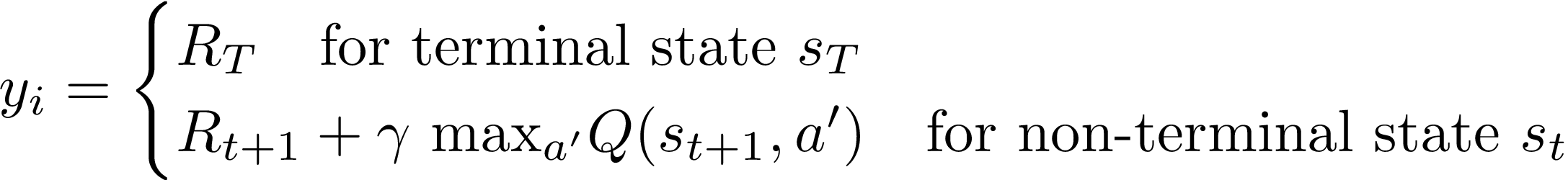

The approximation of the Q-value converges to the true Q-value as we repeat the updating process. Improve policy with advantage weighted gradients, maximize the entropy, and minimize value estimate errors. Given the DQN loss function in Eq.

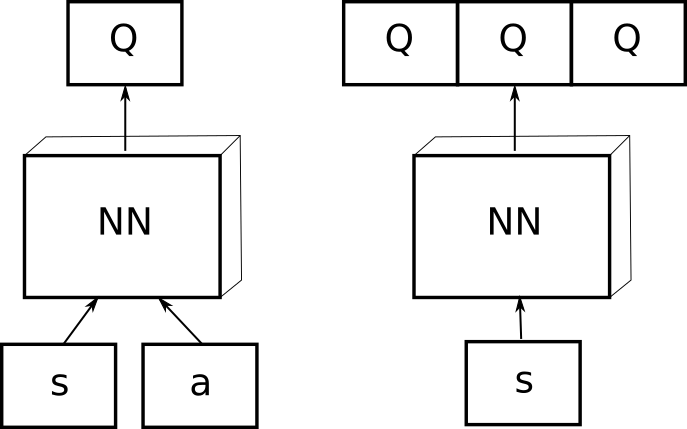

I've attempted several loss functions and optimizers with the same result. In ‘normal’ neural networks the loss function is straigtforward as for each training example there is a known expected outcome. The tricky and crucial part is the loss function.

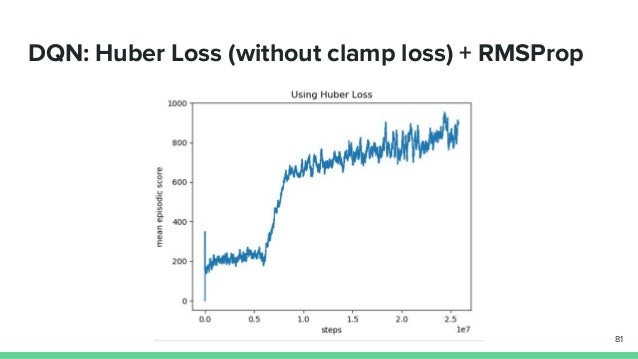

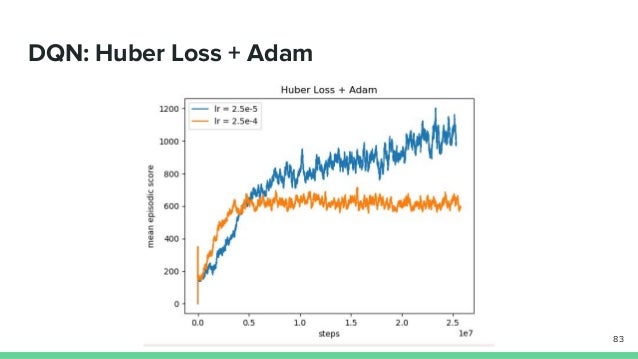

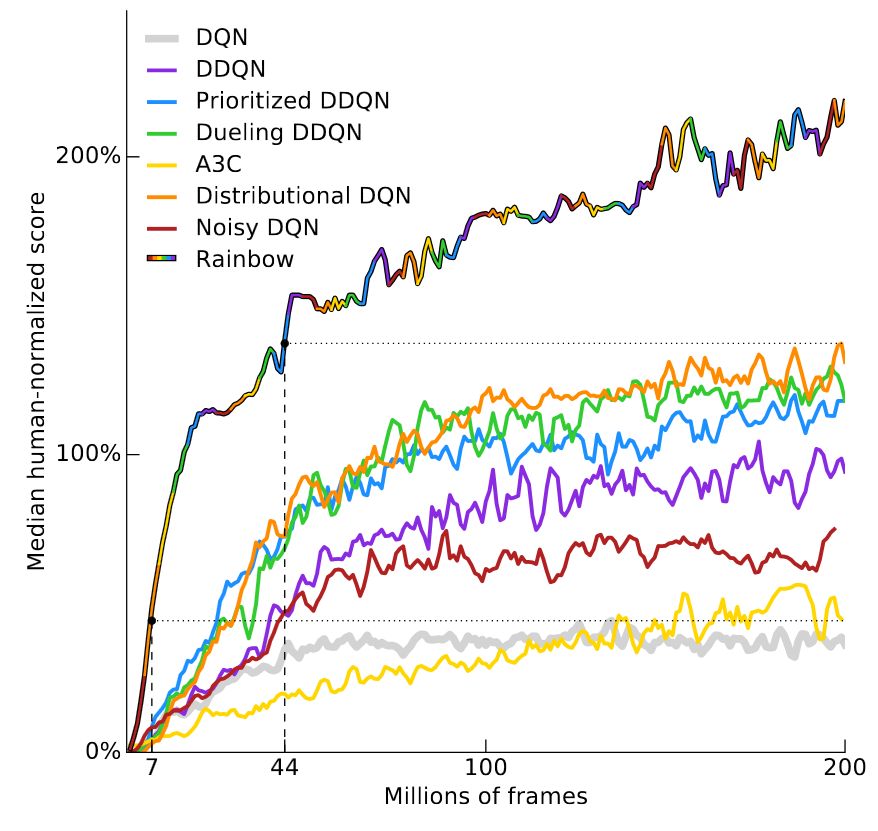

Now that we have Huber loss, we can try to remove our reward clipping from last time!. (A3C) method which generally performs better than variants of DQN on almost all games in the Atari suite, including Space Invaders. The agent is not learning the proper policy in this case.

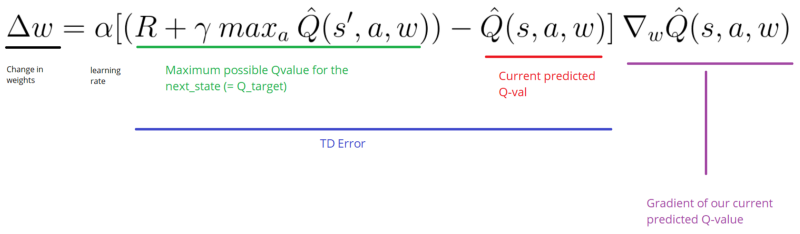

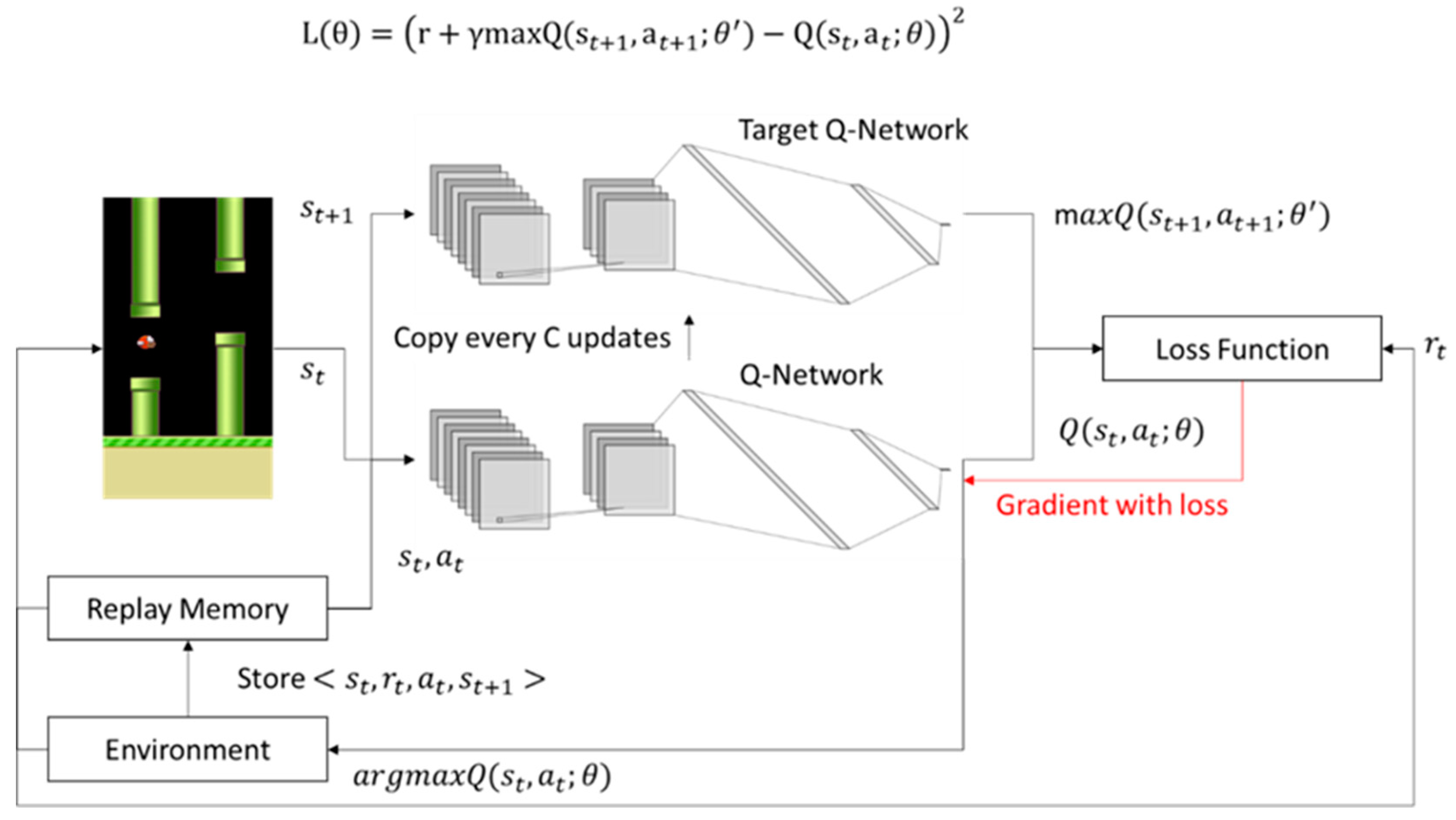

We backpropagate this loss through the DQN and tweak the weights of the Q-network ever so slightly to reduce the loss. Furthermore, keras-rl works with OpenAI Gym out of the box. Given the DQN loss function in Eq.

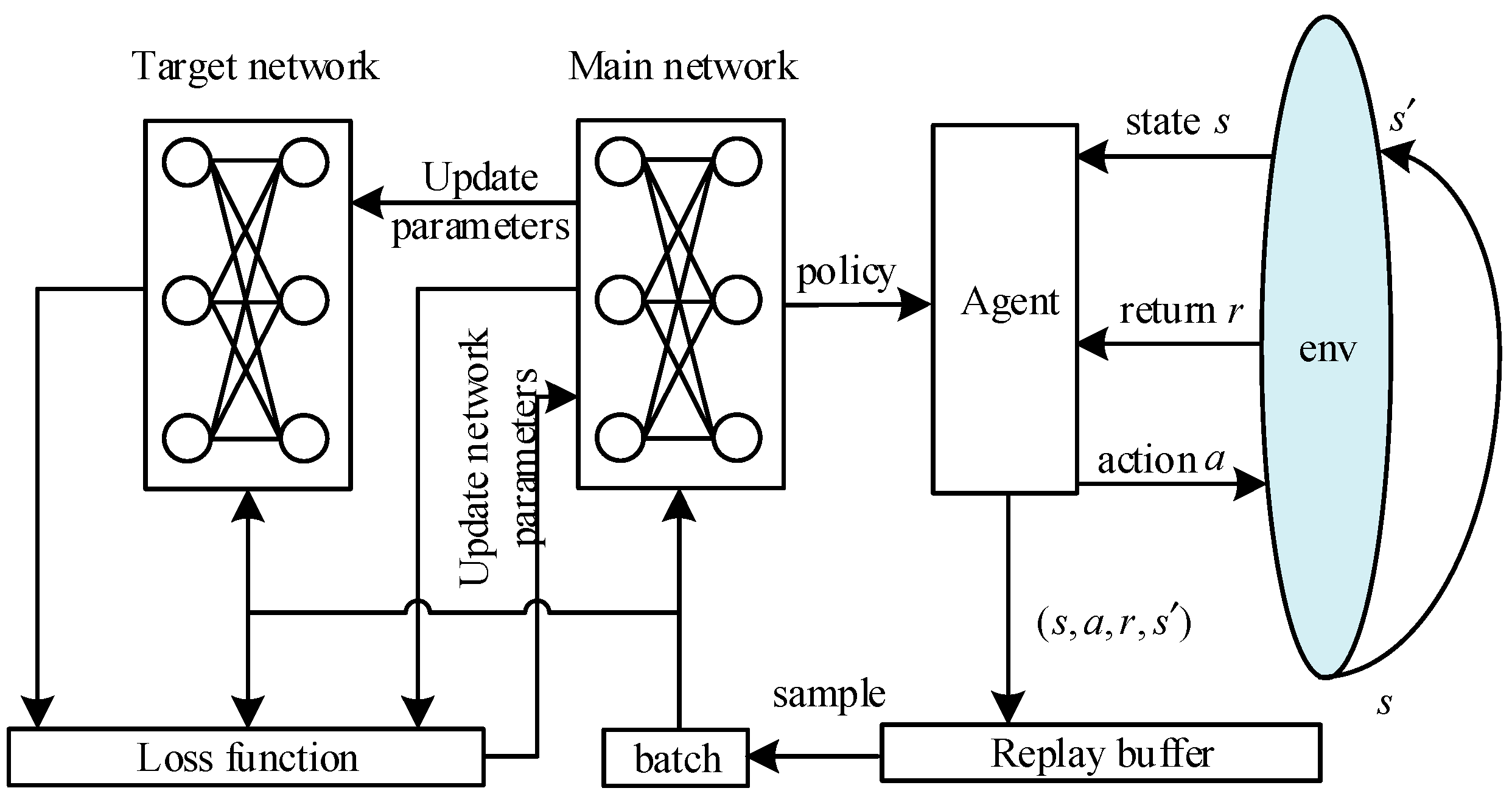

The Atari DQN work introduced a technique called Experience Replay to make the network updates more stable. The mean Q-values should smoothly converge towards a value proportionnal to the mean expected reward. Modification of internal convolutional structure follows with respect to newly learnt action/reward mappings.

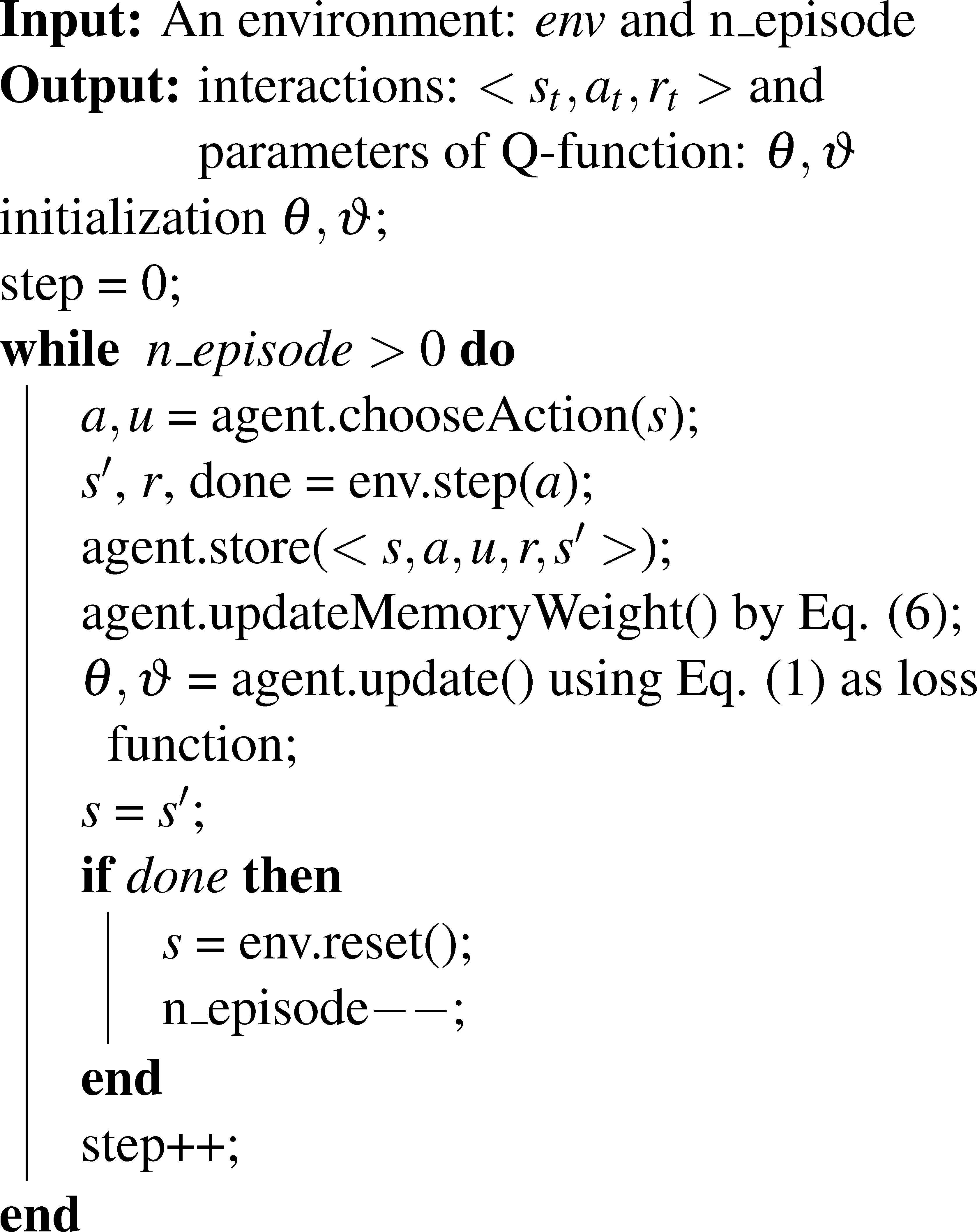

If the loss is computed using just the last transition ${s, a, r, s'}$, this reduces to standard Q-Learning. Loss function Let’s go through a few more implementation details to improve performance. Update the model by gradient descent.

Does the Q-Loss have to converge for DQN algorithm?. Playing Atari with Deep Reinforcement Learning (Mnih et al. The state-value function and the state-dependent action advantage function.

The loss formula given is also the one you would use for DQN without experience replay. Hot Network Questions Pay off half mortgage vs invest in stocks Drop-down Form and Check Box Combined—Bad Practice?. Although an MLP is used in these examples, the same loss functions can be used when training CNN and RNN models for binary classification.

Does this look familiar?. Going back to the Q-value update equation derived fromthe Bellman equation. 3, the new loss function and learned policy for RL Tuner are, L ( ) = E (log p(ajs)+ rMT (a;s )=c + max a 0 Q (s0;a0;.

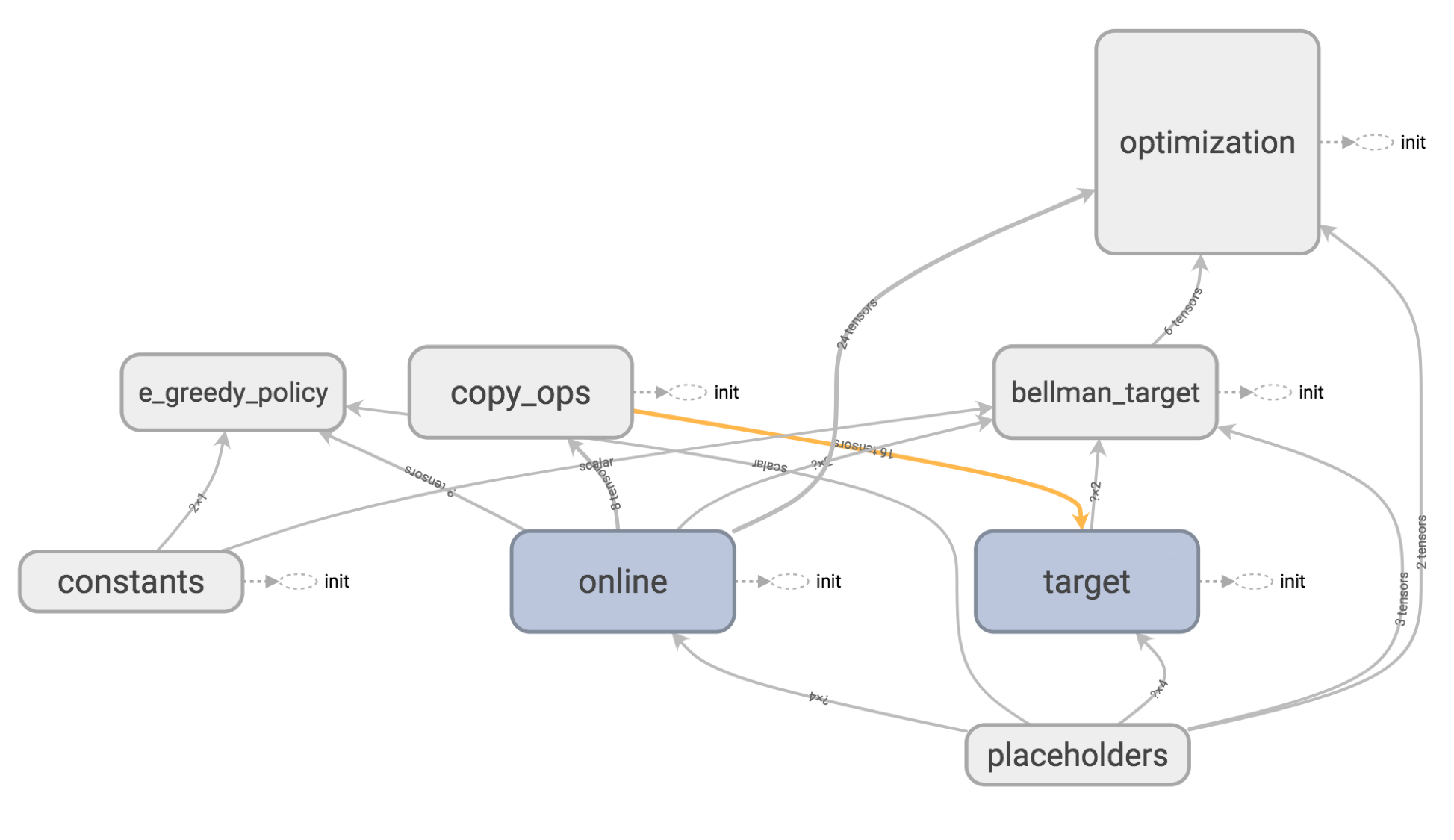

Variable (0, trainable=False) self. Keras-rl implements some state-of-the art deep reinforcement learning algorithms in Python and seamlessly integrates with the deep learning library Keras. Loss Function and Target Networks.

11 1 1 bronze badge. Where and represent the parameters of the state-value function and action advantage function, respectively and represents the rest parameters of the network.3. Therefore, with the current code whenever the discrepancy is above min/max delta, the gradient becomes exactly zero in backprop.

Neural network loss function for predicting the Q values l2_loss = (predicted — actual) **2 assume the “ predicted ” gives the list of action values from the neural network where we take the. In the Nature paper, they express the loss function as:. When writing the call method of a custom layer or a subclassed model, you may want to compute scalar quantities that you want to minimize during training (e.g.

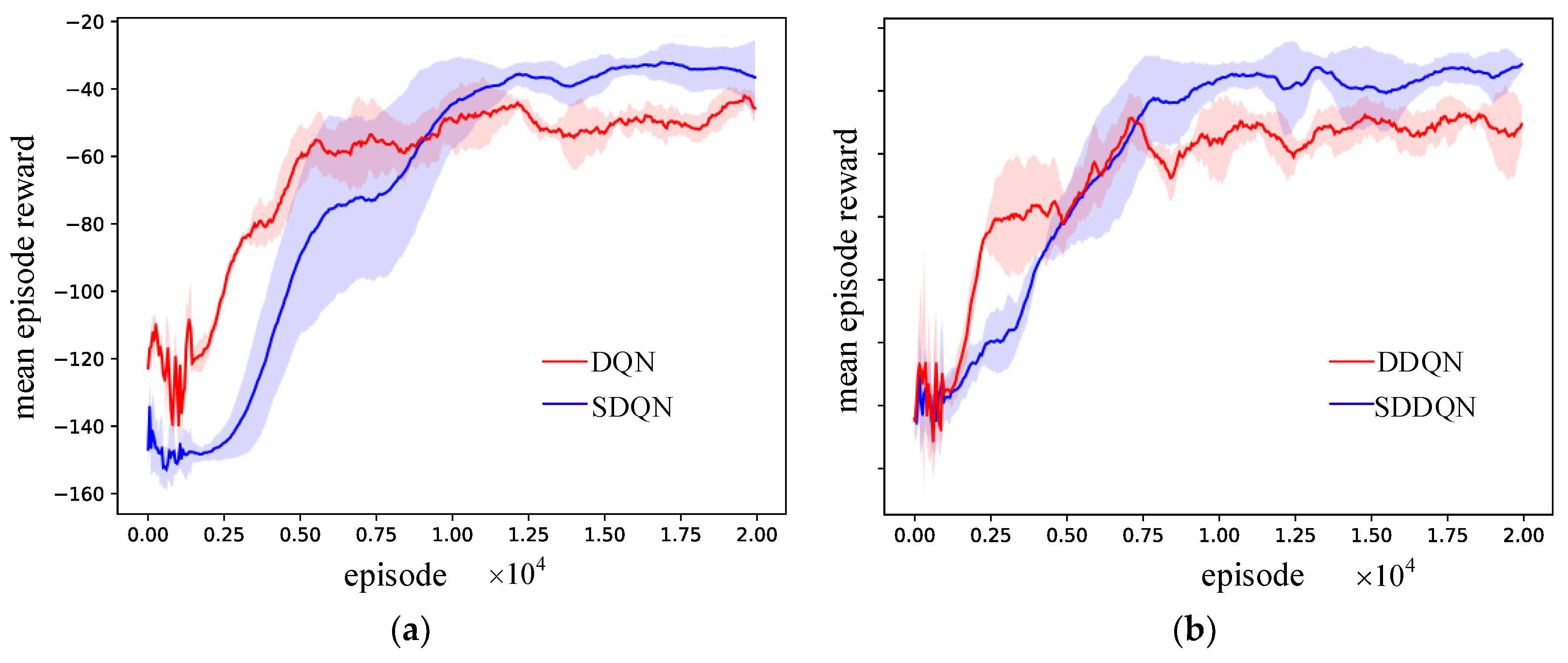

RL4J is available on github. As a consequence, we are able to converge our loss function faster while achieving a more stable learning. DQL+Pop-art Proximal Policy Optimization improve a surrogate objective vial stochastic gradient descent:.

Press J to jump to the feed. When a nonlinear function approximator such as a neural network is used to represent the action-value (also known as Q) function. “DeepMind” RmsProp was learning.

AdamOptimizer), a loss function, and an integer step counter. The recent Deep Q-Network (DQN) algorithm (Mnih et al., 13), was the first to successfully combine a powerful non-linear function approximation technique known as Deep Neural Network (DNN) (LeCun et al., 1998;. Q-function updates in DQN.

) Q (s;a ;. This function decreases the gap between our prediction to target by the learning rate. DQN presented a remarkably flexible and stable algorithm, showing success in the majority of games within the Arcade.

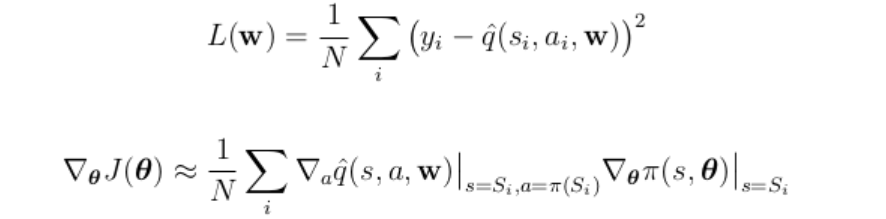

This blog post will demonstrate how deep reinforcement learning (deep Q-learning) can be implemented and applied to play a CartPole game using Keras and Gym, in less than 100 lines of code!. Therefore, with the loss function defined as above, we perform a gradient step on the loss function according to:. Specifically, like any other deep neural architecture, the model learns by minimizing error (producing better reward/action mappings) over time.

1answer 47 views Is there a way of deriving a loss function given the neural network and training data?. You've reached the end of your free preview. To avoid computing the full expectation in the DQN loss, we can minimize it using stochastic gradient descent.

))2 (4) ˇ (ajs) = (a= argmax a Q(s;a;. The loss plotted below is the L2 loss, WITHOUT the huber loss applied on top of it as in the original DQN paper. Huber_loss as an argument to tell Keras where to find huber_loss.

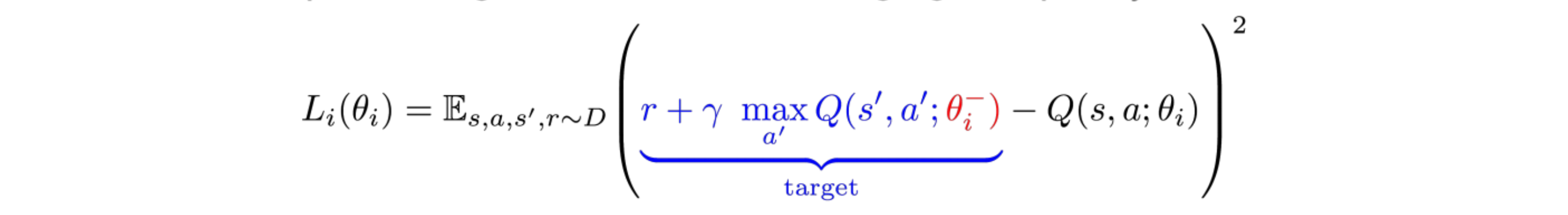

L iðÞh ~ ðÞs,a,r,s0 *UðÞD rzcmax a0 Q(s0,a0;h{i){QðÞs. Press question mark to learn the rest of the keyboard shortcuts. The Q-learning update at iteration i uses the following loss function:.

Q-function updates in DQN Notice that Q is now parameterized by the weights, w. This means that evaluating and playing around with different algorithms is easy. L i ( θ i) = E ( s, a, r, s ′) ∼ U ( D) 1 2 ( r + γ max a Q ( s ′, a ′;.

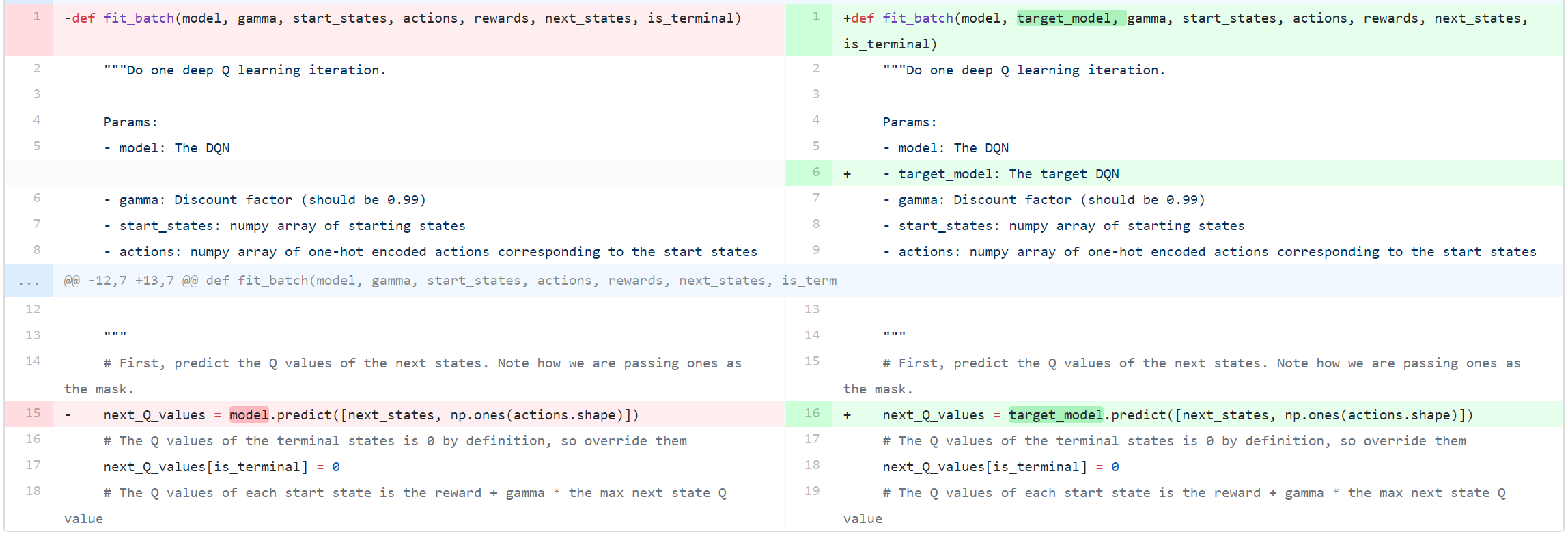

As we can see, the target network has its own set of parameters to optimize, (θ-). However, we do not know the target or actual value here as we are dealing with a reinforcement learning problem. (3) Rather than computing the full expectations in the above gradient, it is often computationally expe-dient to optimise the loss function by stochastic gradient descent.

You can use the add_loss() layer method to keep track of such loss terms. Cross-entropy is the default loss function to use for binary classification problems. Target We’re going to optimize this loss function using the following gradient:.

2 and modied reward function in Eq. I’ll explain everything without requiring any prerequisite knowledge about reinforcement learning. The loss function here is mean squared error of the predicted Q-value and the target Q-value – Q*.

One for each possible action. Loss / Objective Function. There is some sort of art to using the right loss function.

The key was to take Q-learning, but estimate the Q-function with a deep neural network. DQN Recap¶ DQN’s burst on the scene when the cracked the Atari code for DeepMind a few years back. We need to define our learning objective, which is to have a function that accepts two arguments:.

Clipped_delta), name='loss') However, the clip_by_value function's local gradient outside of the min_delta, max_delta range is zero. Initially, I attempted using an L2 loss. (DQN), which is able to combine reinforcement learning with a class.

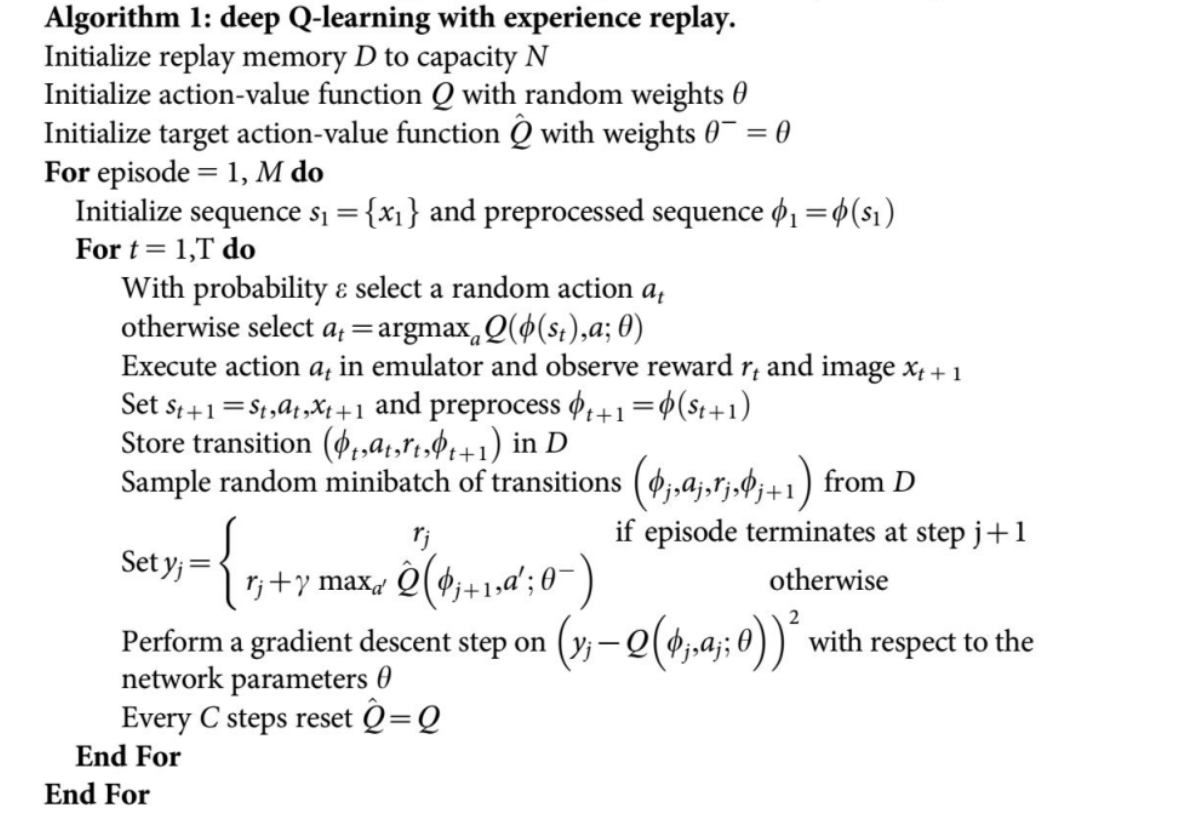

This loss equation tries to make the move probabilities outputted by the DQN more closely match the 100% certainty of the “correct choice” given by the target network. Plot the training progresses. Currently DQN with Experience Replay, Double Q-learning and clipping is implemented.

DQN uses Huber loss (green curve) where the loss is quadratic for small values of a, and linear for large. The DQN loss function is:. From my understanding of DQN, I don't think this has an impact on the issue that I am facing.

Action value function used in the learning updates is proposed. Train the agent during num_frames. Test the agent (1 episode).

Use this loss function:. As I have described in the RL section, an agent improves its policy through gradient descent based on some loss (objective) function. Loss functions applied to the output of a model aren't the only way to create losses.

Con-versely, when trained with full observations and eval-uated with partial observations, DRQN’s performance degrades less than DQN’s. This tutorial shows how to use PyTorch to train a Deep Q Learning (DQN) agent on the CartPole-v0 task from the OpenAI Gym. Use this loss function:.

This equation adjusts Q(s,a) in the direction of the target Q-function updates in DQN. We build a Double DQN system to reduce the bias in training to learn a simple CartPole task. ))2 (4) (ajs) = (a = argmax a Q (s;a ;.

θ i −) − Q ( s, a;. Now, simply using the Q-learning update equation to change the. A master network (parameter server, possibly distributed) gathers the gradients, apply weight updates and synchronizes regularly both the actors and the learners with new parameters.

Formance scales as a function of observability. It is intended for use with binary classification where the target values are in the set {0, 1}. Does minimizing this function mean that we want the Q network to go to the direction of the target network?.

My reasoning for this happening is that the clipped loss is a constant function in (-inf,-1) U (1,inf), which means it has zero gradient in those regions. The difference is only which s, a, r, s', a' you feed into it. Asked Jun 2 at 17:21.

The agent has to decide between two actions - moving the cart left or right - so that the pole attached to it stays upright. This blog post would not be possible without so many amazing resources online!. Differentiating the loss function with respect to the weights we arrive at the following gradient, r i L i( i) = E s;a˘ˆ( );s0˘E h r+ max a0 Q(s0;a0;.

As mentioned above,. The DQN agent can be used in any environment which has a discrete action space. Multiple DQN learners sample from the ERM and compute the gradient of the loss function w.r.t the parameters \(\theta\).

Of course you can extend keras-rl according to your own needs. This is reflected in a bellman styled loss function. In DQN, the DeepMind team also maintained two networks and switched which one was learning and which one feeding in current action-value estimates as "bootstraps".

In the C algorithm, we train on three objectives:. I'm wondering, why Q-loss is not discussed in most of the papers. This decoupling of action selection from evaluation has shown to compensate for the overoptimistic representations that are learned by the naïve DQN.

However, I was wondering if there is a way to derive. Q ( s, a) = r + γ max a ∗ Q ( s ′, a ∗) This equation means that the update happens only for one specific s t a t e, a c t i o n pair and for the neural q-network that means the loss is calculated only for one specific output unit which corresponds to a specific a c t i o n. In my case, learning curve of my DQN implementation flattened after 10M frames around 400 points for traditional RMSProp.

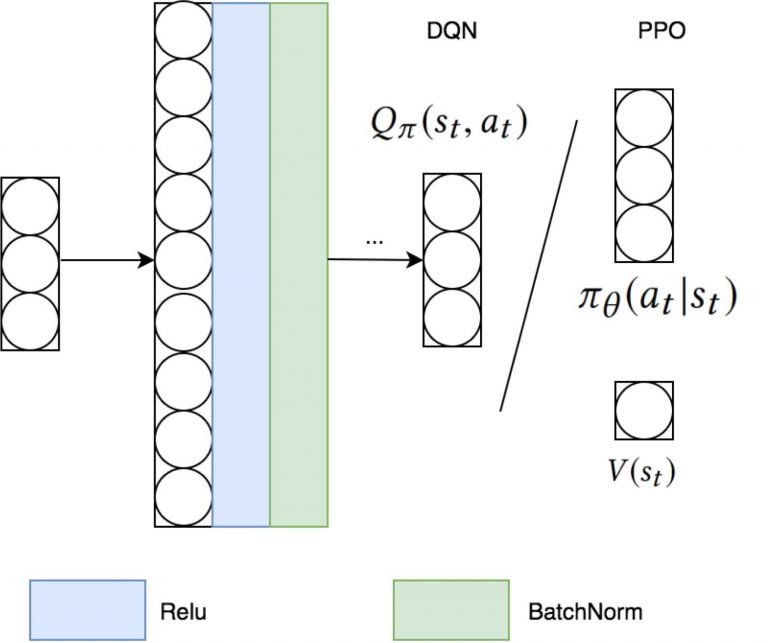

)) (5) Thus, the modified loss function forces the model to learn that the most valuable actions in terms. Where value function loss is given by PPO A B. Instead of only having one linear combination, we have two:.

I printed out the gradients of the network and realized that if the loss falls below -1, the gradients all suddenly turn to 0!.

Deep Reinforcement Learning From Scratch

Improvements In Deep Q Learning Dueling Double Dqn Prioritized Experience Replay And Fixed

Symmetry Free Full Text Supervised Reinforcement Learning Via Value Function Html

How Can We Make The Training Loss Decrease In Reinforcement Learning

Reinforcement Learning Issue 55 Cbovar Convnetsharp Github

Beyond Dqn A3c A Survey In Advanced Reinforcement Learning By Joyce Xu Towards Data Science

Option Portfolio Replication And Hedging In Deep Reinforcement Learning Poster Nyu Center For Data Science

Http Rail Eecs Berkeley Edu Deeprlcourse Fa17 F17docs Lecture 7 Advanced Q Learning Pdf

Www Insticc Org Primoris Resources Paperpdf Ashx Idpaper

Optimization Of Molecules Via Deep Reinforcement Learning Scientific Reports

What Are Possible Reasons Why Q Loss Is Not Converging In Deep Q Learning Algorithm

Dqn Debugging Using Open Ai Gym Cartpole The Intersection Of Energy And Machine Learning

Deep Deterministic Policy Gradient Spinning Up Documentation

Symmetry Free Full Text Supervised Reinforcement Learning Via Value Function Html

Deep Sarsa Deep Q Learning Dqn

Bayesian Deep Reinforcement Learning Via Deep Kernel Learning Atlantis Press

Dynamicwebpaige 127 0 0 1 I M Absolutely In Love With The Mathematical And Code Annotations For This Blog Post On Reinforcement Learning Building A Powerful Dqn In Tensorflow 2 0

Deep Reinforcement Learning With Decorrelation Deepai

6 Stabilizing Value Based Deep Reinforcement Learning Method Grokking Deep Reinforcement Learning Meap V14 Epub

3

4 7 Loss Functions Rlgraph 0 0 3 Documentation

Www Insticc Org Primoris Resources Paperpdf Ashx Idpaper

Deep Reinforcement Learning With Decorrelation Deepai

Reinforcement Learning And Dqn Learning To Play From Pixels Ruben Fiszel S Website

What Are Possible Reasons Why Q Loss Is Not Converging In Deep Q Learning Algorithm

Double Dueling Agent For Dialogue Policy Learning Ppt Download

Deep Reinforcement Learning 1 Deep Q Network Dqn Programmer Sought

Question Dqn Algorithm Does Not Work Well On Cartpole V0 Reinforcementlearning

Physics Control Tasks With Deep Reinforcement Learning

6 Stabilizing Value Based Deep Reinforcement Learning Method Grokking Deep Reinforcement Learning Meap V14 Epub

Deep Q Learning An Introduction To Deep Reinforcement Learning

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Reinforcement Learning Notes 知乎

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

A Pair Of Interrelated Neural Networks In Deep Q Network By Rafael Stekolshchik Towards Data Science

Deep Q Learning Part2 Double Deep Q Network Double Dqn By Amber Medium

Loss Function Vs Gradient Updates In Policy Gradient Methods Cross Validated

Http Www Cs Toronto Edu Florian Courses Imitation Learning Lectures Lecture4 Pdf

Q Tbn 3aand9gcq0hnlaqiyn34oyeshchpt6yitrgknpmjavifzui9uhgtnuu7sh Usqp Cau

Multiagent Cooperation And Competition With Deep Reinforcement Learning

6 Stabilizing Value Based Deep Reinforcement Learning Method Grokking Deep Reinforcement Learning Meap V14 Epub

Vanilla Deep Q Networks Deep Q Learning Explained By Chris Yoon Towards Data Science

Dqn Debugging Using Open Ai Gym Cartpole The Intersection Of Energy And Machine Learning

Implementing Deep Reinforcement Learning With Pytorch Deep Q Learning

Deep Reinforcement Learning From Scratch

Ch 13 Deep Reinforcement Learning Deep Q Learning And Policy Gradients Towards Agi By Madhu Sanjeevi Mady Deep Math Machine Learning Ai Medium

Deep Learning Research Review Reinforcement Learning

Data Science Database Tools Learning S Video Image Text Data Database Day 199 Introduction To Deep Reinforcement Learning Deep Rl

Dqn Debugging Using Open Ai Gym Cartpole The Intersection Of Energy And Machine Learning

Reinforcement Learning Decreasing Loss Without Increasing Reward Data Science Stack Exchange

Dqn Solution Results Peak At 35 Reward Issue 30 Dennybritz Reinforcement Learning Github

Understanding The Loss Function In Deep Q Learning Artificial Intelligence Stack Exchange

Deep Reinforcement Learning

Improvements In Deep Q Learning Dueling Double Dqn Prioritized Experience Replay And Fixed

Multiagent Cooperation And Competition With Deep Reinforcement Learning

Www Cs Toronto Edu Vmnih Docs Dqn Pdf

Dqn Architecture With The Loss Function Described By L B E R G Download Scientific Diagram

Deep Q Learning 101 Datahubbs

Deep Q Learning An Introduction To Deep Reinforcement Learning

Papers Nips Cc Paper 49 Diversity Driven Exploration Strategy For Deep Reinforcement Learning Pdf

How Can A Dqn Backpropagate Its Loss Artificial Intelligence Stack Exchange

Reinforcement Learning A Deep Dive Toptal

Deep Reinforcement Learning

转 Let S Make A Dqn 系列 Ahu Wangxiao 博客园

转 Let S Make A Dqn 系列 Ahu Wangxiao 博客园

Deep Q Learning An Introduction To Deep Reinforcement Learning

Machine Learning Glossary Google Developers

Deep Reinforcement Learning Doesn T Work Yet

Application Of Deep Neural Network And Deep Reinforcement Learning In Wireless Communication

Why There Are A Sequence Of Loss Functions In Dqn Algorithm Cross Validated

New Ideas In Reinforcement Learning Cerenaut Research

Vanilla Deep Q Networks Deep Q Learning Explained By Chris Yoon Towards Data Science

Cartpole V0 Loss Increasing Using Dqn Stack Overflow

David Silver Google Deepmind Deep Reinforcement Learning Synced

Reinforcement Learning Dqn Tutorial Pytorch Tutorials 1 6 0 Documentation

Http Www Slt18 Org Wp Content Uploads 19 01 7 Yu An wang Pdf

Beat Atari With Deep Reinforcement Learning Part 2 Dqn Improvements By Adrien Lucas Ecoffet Becoming Human Artificial Intelligence Magazine

Puckworld

Reinforcement Learning A Deep Dive Toptal

Reinforcement Learning And Dqn Learning To Play From Pixels Ruben Fiszel S Website

David Silver Google Deepmind Deep Reinforcement Learning Synced

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Reinforcement Learning Part 2 Getting Started With Deep Q Networks Novatec

David Silver Google Deepmind Deep Reinforcement Learning Synced

Electronics Free Full Text Using A Reinforcement Q Learning Based Deep Neural Network For Playing Video Games Html

Q Tbn 3aand9gctw4qrjjlye9llax6ryad9kt8bngfsbgyffeg Usqp Cau

Vanilla Deep Q Networks Deep Q Learning Explained By Chris Yoon Towards Data Science

Csml Princeton Edu Sites Csml Files Resource Links Q Learning Notes Pdf

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Let S Make A Dqn Full Dqn ヤロミル

Rl Dqn Deep Q Network Can Computers Play Video Games Like A By Jonathan Hui Medium

Arxiv Org Pdf 1811

Machine Learning Glossary Google Developers

How To Stop Dqn Q Function From Increasing During Learning Artificial Intelligence Stack Exchange

Dqn Debugging Using Open Ai Gym Cartpole The Intersection Of Energy And Machine Learning

Q Learning And Dqn Efavdb